End-to-End Google Earth Engine (Supplementary Course Materials)

Ujaval Gandhi

- Introduction

- Advanced

Supervised Classification Techniques

- k-Fold Cross Validation

- Hyperparameter Tuning

- Post-Processing Classification Results

- Principal Component Analysis (PCA)

- Multi-temporal Composites for Crop Classification

- Computing Correlation

- Calculating Band Correlation Matrix

- Calculating Area by Class

- Spectral Signature Plots

- Using Polygons for Training Data

- Stratified Sampling of GCPs

- Identify Misclassified GCPs

- Image Normalization and Standardization

- Calculate Feature Importance

- Visualizing Random Forest Decision Trees

- Classification with Migrated Training Samples

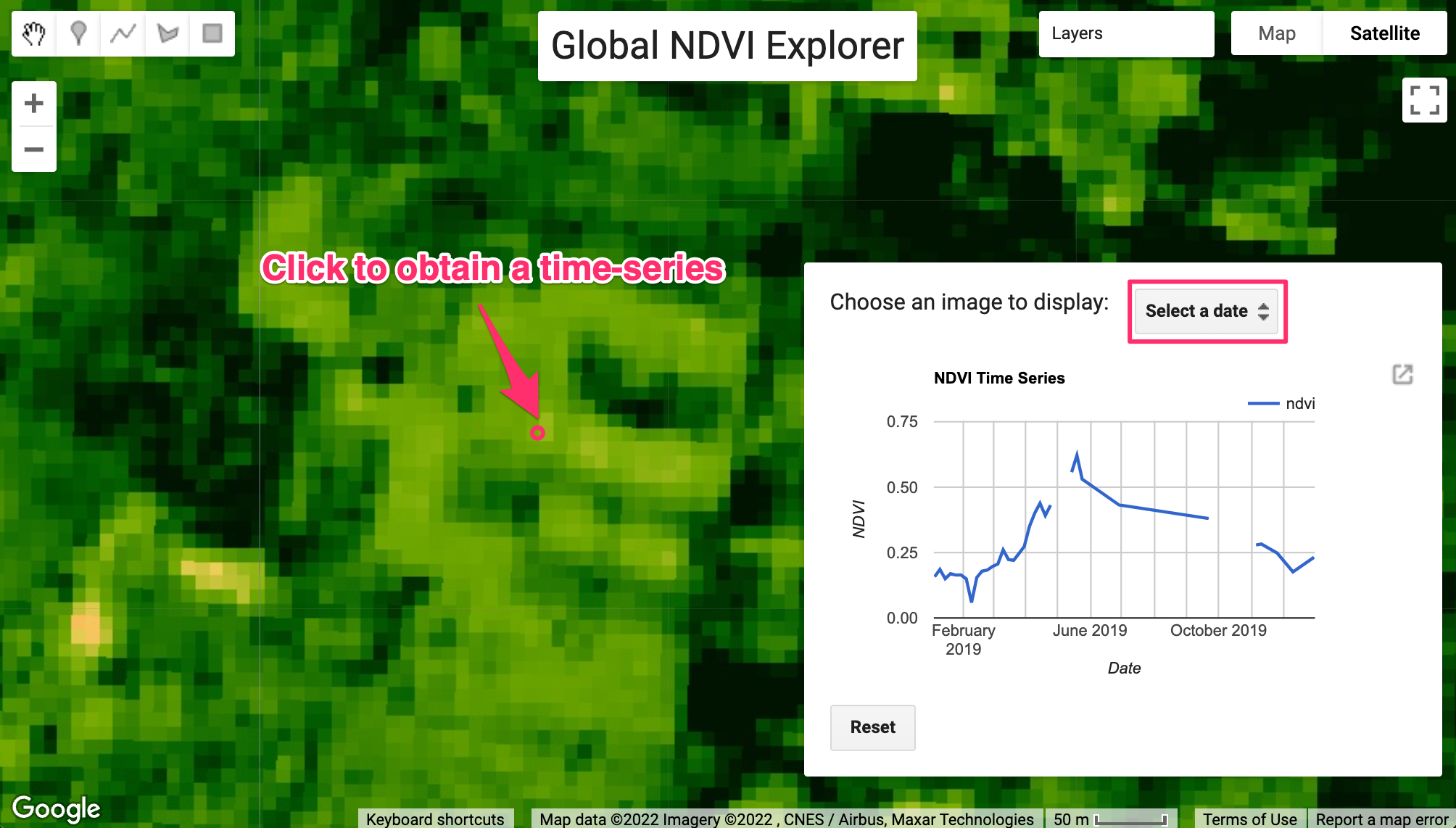

- Time Series Modeling

- Using SAR data

- Adding Spatial Context

- Advanced Change Detection Techniques

- Image Collection Processing

- Aggregating and Visualizing ImageCollections

- Exporting ImageCollections

- Create Composites at Regular Intervals

- Export ImageCollection Metadata

- Get Pixelwise Dates for Composites

- Filter Images by Cloud Cover in a Region

- Harmonized Landsat Time Series

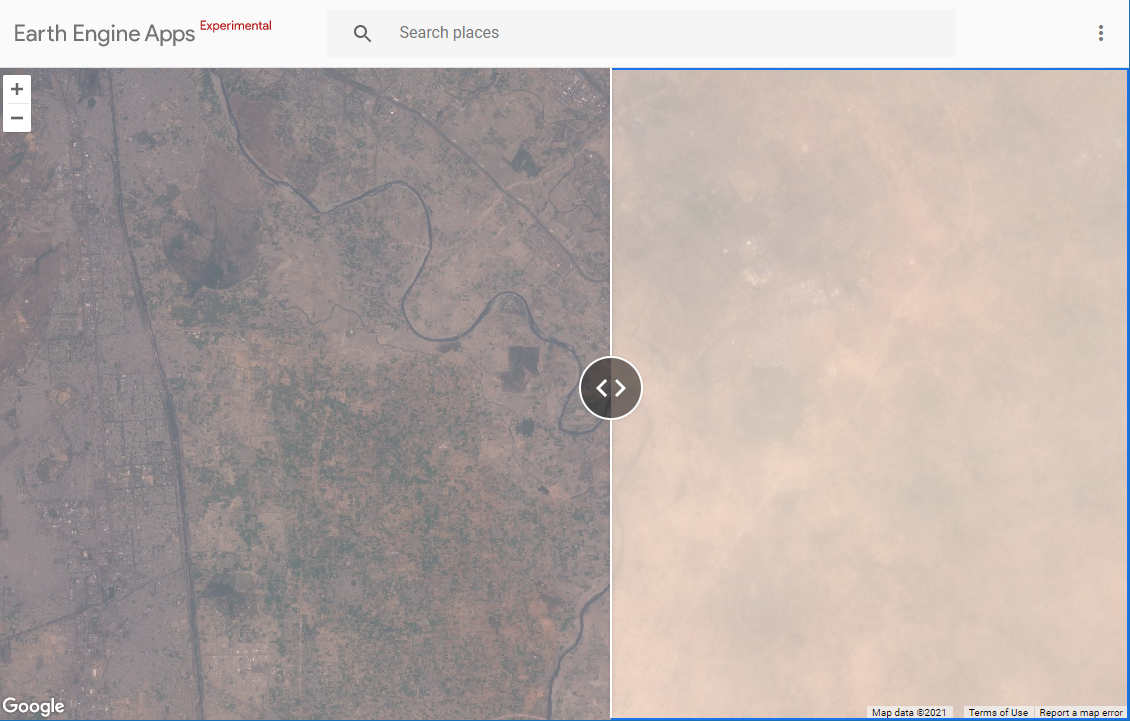

- Landsat Timelapse Animation with Text

- Percentile Composites

- Medoid Composites

- Visualize Number of Images in Composites

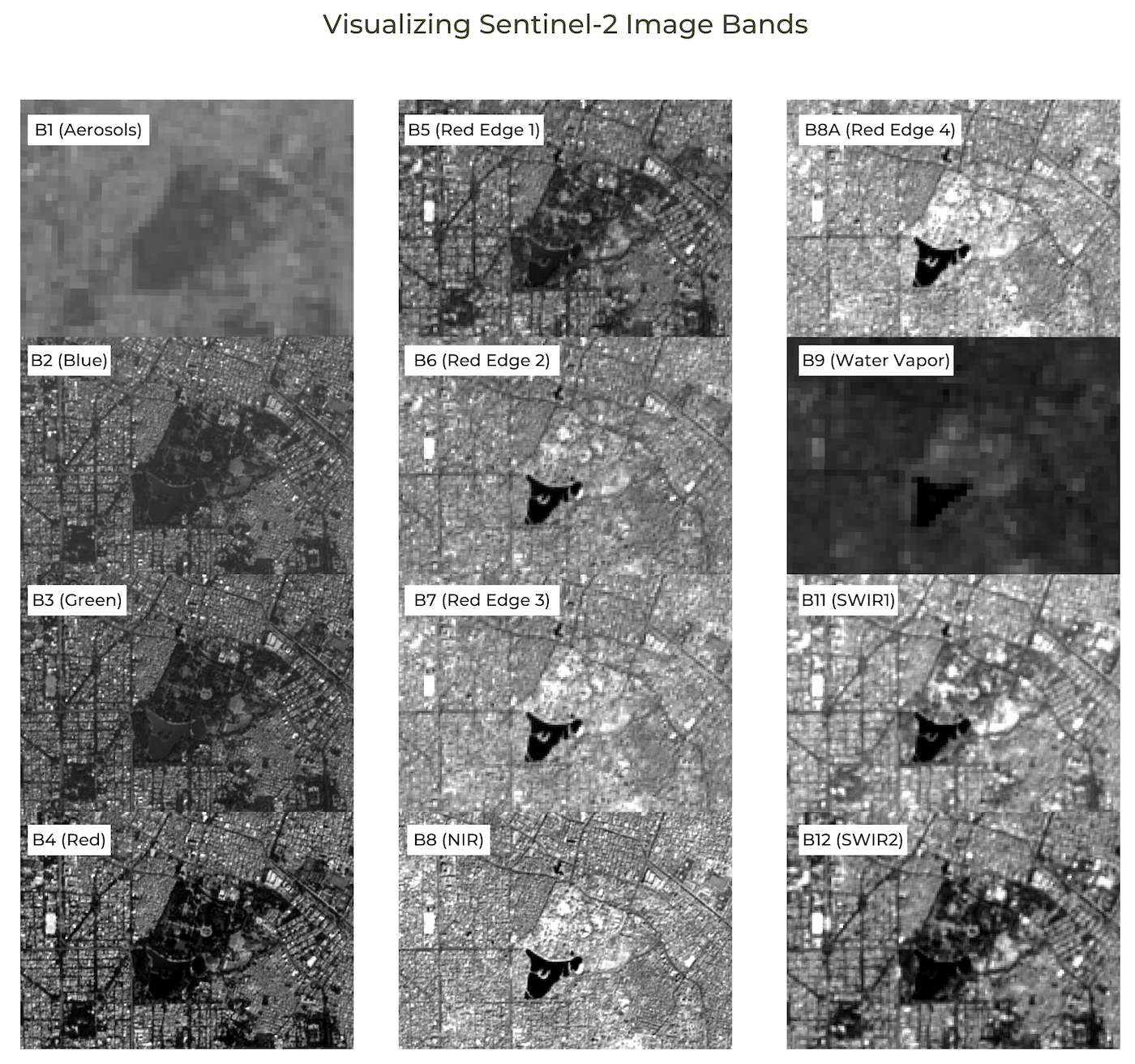

- Visualizing Bands of an Image

- Advanced Image Processing

- User Interface Templates

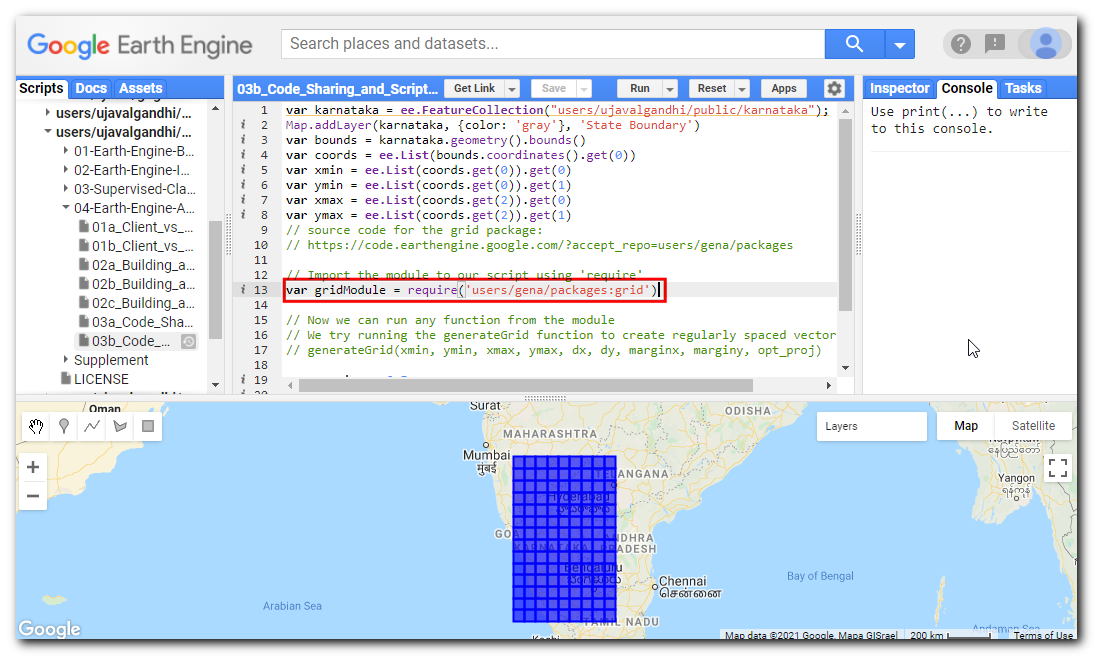

- Code Sharing and Script Modules

- License

- Citing and Referencing

Introduction

This page contains Supplementary Materials for the End-to-End Google Earth Engine course. It is a collection useful scripts and code snippets that can be adapted for your projects.

Please visit the End-to-End Google Earth Engine course page for the full course material.

Advanced Supervised Classification Techniques

k-Fold Cross Validation

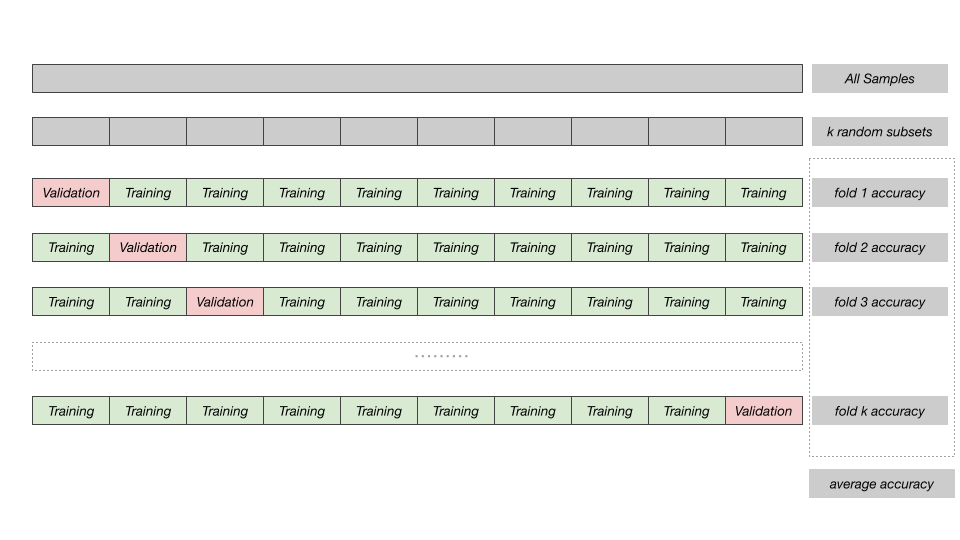

In regular accuracy assessment we split the samples into 2 fractions of training and validation. In k-fold cross validation, this step is repeated multiple times by splitting the data into multiple subsets (i.e folds), using one of these folds as a validation set, and training the model on the remaining folds. This process is repeated multiple times and the accuracy metric from each validation step is averaged to produce a more robust estimate of the model’s performance.

k-Fold Cross-Validation

// Example script for k-Fold Cross Validation

// of Supervised Classification

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

// Function to split a featurecollection into k-folds

var kFoldSplit = function(features, folds) {

var step = ee.Number(1).divide(folds);

var thresholds = ee.List.sequence(

0, ee.Number(1).subtract(step), step);

// Use seed so the distribution is stable across

// all calls of the function

features = features.randomColumn({seed: 0});

var splits = thresholds.map(function (threshold) {

var trainingSplit = features.filter(

ee.Filter.or(

ee.Filter.lt('random', threshold),

ee.Filter.gte('random', ee.Number(threshold).add(step))

)

);

var validationSplit = features.filter(

ee.Filter.and(

ee.Filter.gt('random', threshold),

ee.Filter.lte('random', ee.Number(threshold).add(step))

)

);

return ee.Feature(null, {

'training': trainingSplit,

'validation': validationSplit

});

});

return ee.FeatureCollection(splits);

};

// Use k=10

var folds = kFoldSplit(gcps, 10);

// Check the split

print('Fold 0 Training Samples', folds.first().get('training'));

print('Fold 0 Validation Samples', folds.first().get('validation'));

// Assess the accuracy for each pair of training and validation

var accuracies = ee.FeatureCollection(folds.map(function(fold) {

var trainingGcp = fold.get('training');

var validationGcp = fold.get('validation');

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

var test = composite.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

var accuracy = test

.classify(classifier)

.errorMatrix('landcover', 'classification')

.accuracy();

return ee.Feature(null, {'accuracy': accuracy});

}));

print('K-fold Validation Results',

accuracies.aggregate_array('accuracy'));

var meanAccuracy = accuracies.reduceColumns({

reducer: ee.Reducer.mean(),

selectors: ['accuracy']});

print('Mean Accuracy', meanAccuracy.get('mean'));Hyperparameter Tuning

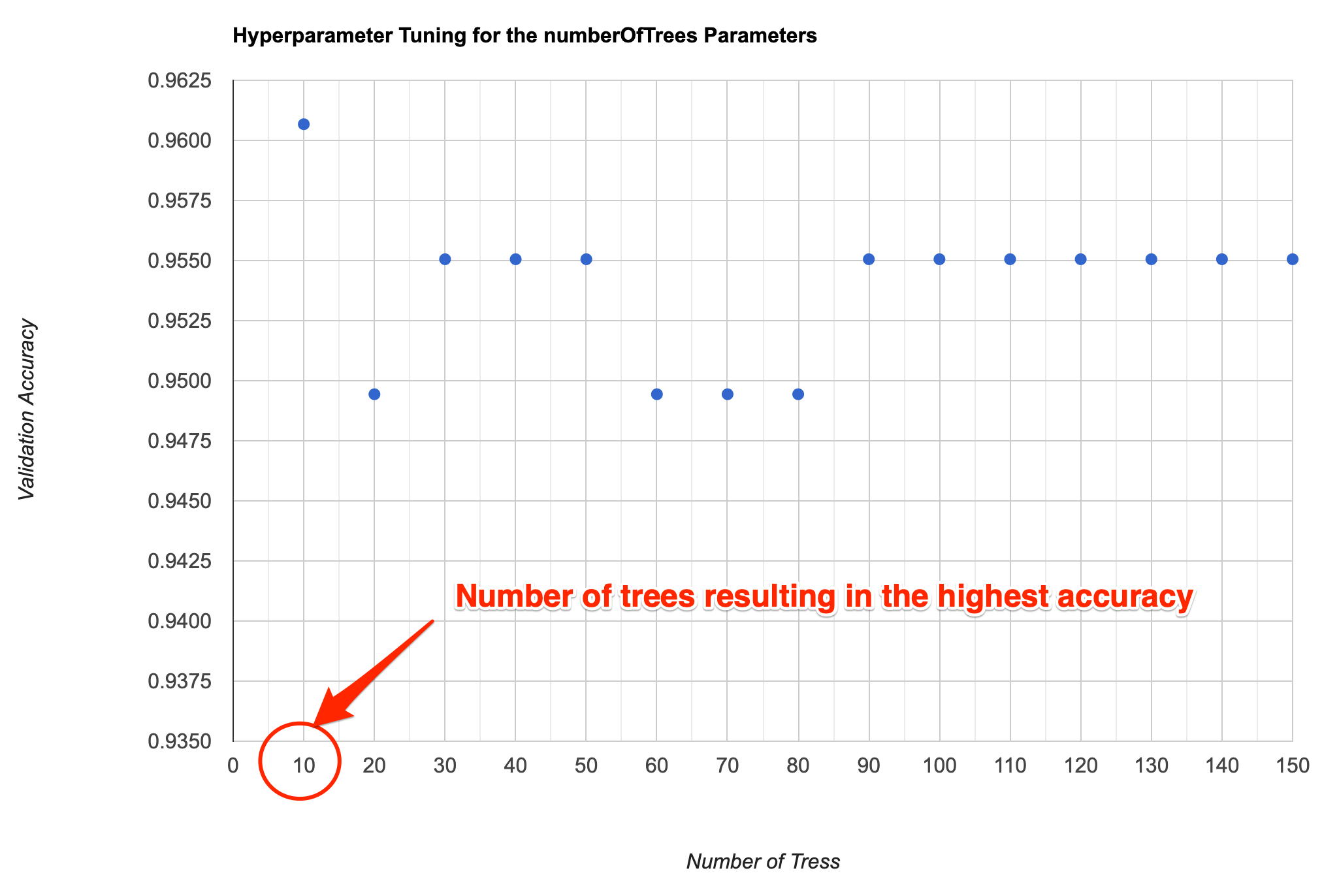

A recommended best practice for improving the accuracy of your

machine learning model is to tune different parameters. For example,

when using the ee.Classifier.smileRandomForest()

classifier, we must specify the Number of Trees. We know that

higher number of trees result in more computation requirement, but it

doesn’t necessarily result in better results. Instead of guessing, we

programmatically try a range of values and choose the smallest value

possible that results in the highest accuracy.

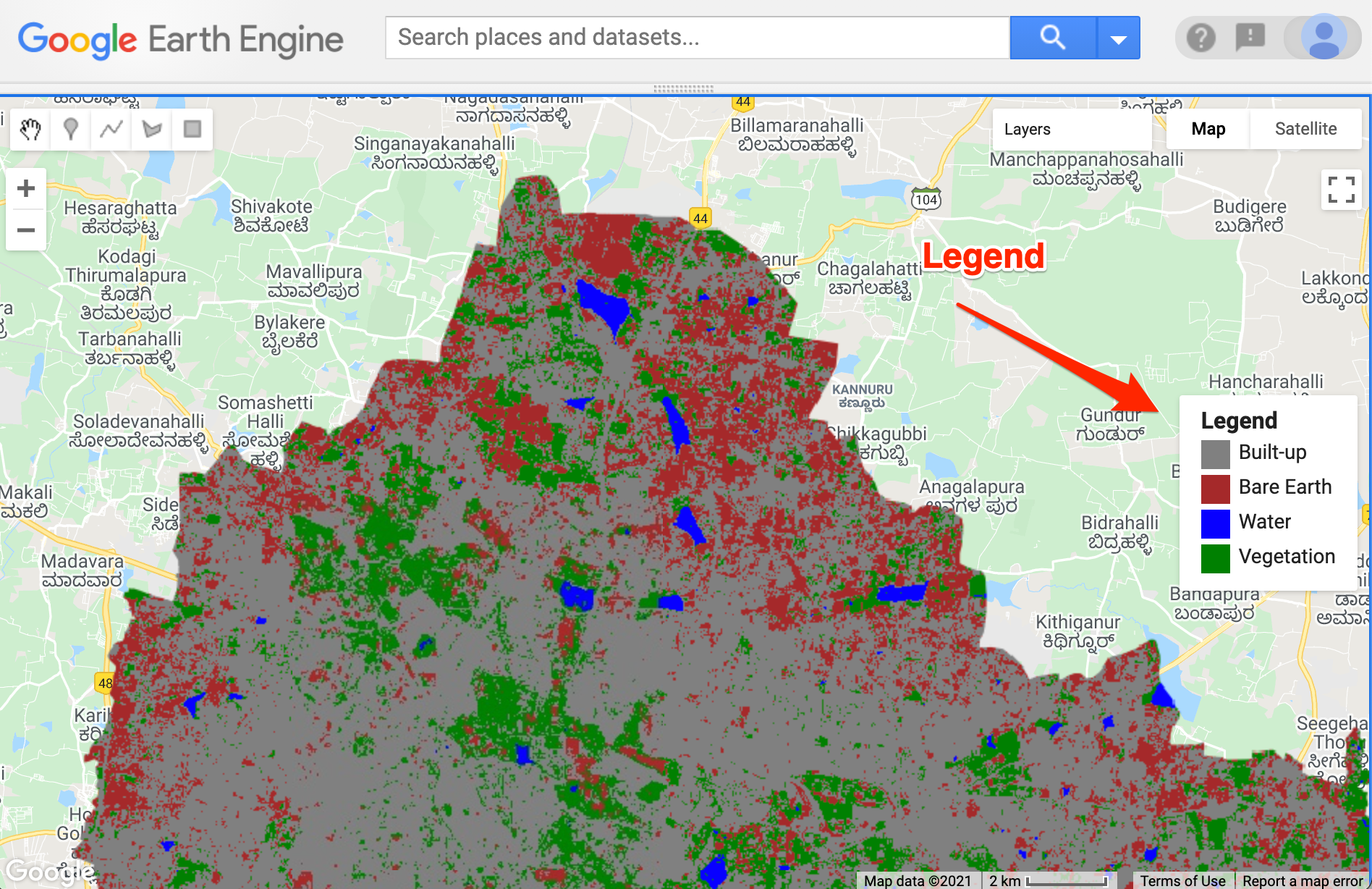

Supervised Classification Output

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

// Add a random column and split the GCPs into training and validation set

gcps = gcps.randomColumn();

// This being a simpler classification, we take 60% points

// for validation. Normal recommended ratio is

// 70% training, 30% validation

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

print(training);

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

//**************************************************************************

// Feature Importance

//**************************************************************************

// Run .explain() to see what the classifer looks like

print(classifier.explain());

// Calculate variable importance

var importance = ee.Dictionary(classifier.explain().get('importance'));

// Calculate relative importance

var sum = importance.values().reduce(ee.Reducer.sum());

var relativeImportance = importance.map(function(key, val) {

return (ee.Number(val).multiply(100)).divide(sum);

});

print(relativeImportance);

// Create a FeatureCollection so we can chart it

var importanceFc = ee.FeatureCollection([

ee.Feature(null, relativeImportance)

]);

var chart = ui.Chart.feature.byProperty({

features: importanceFc

}).setOptions({

title: 'Feature Importance',

vAxis: {title: 'Importance'},

hAxis: {title: 'Feature'}

});

print(chart);

//**************************************************************************

// Hyperparameter Tuning

//**************************************************************************

var test = composite.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

// Tune the numberOfTrees parameter.

var numTreesList = ee.List.sequence(10, 150, 10);

var accuracies = numTreesList.map(function(numTrees) {

var classifier = ee.Classifier.smileRandomForest(numTrees)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// Here we are classifying a table instead of an image

// Classifiers work on both images and tables

return test

.classify(classifier)

.errorMatrix('landcover', 'classification')

.accuracy();

});

var chart = ui.Chart.array.values({

array: ee.Array(accuracies),

axis: 0,

xLabels: numTreesList

}).setOptions({

title: 'Hyperparameter Tuning for the numberOfTrees Parameters',

vAxis: {title: 'Validation Accuracy'},

hAxis: {title: 'Number of Tress', gridlines: {count: 15}}

});

print(chart);

// Tuning Multiple Parameters

// We can tune many parameters together using

// nested map() functions

// Let's tune 2 parameters

// numTrees and bagFraction

var numTreesList = ee.List.sequence(10, 150, 10);

var bagFractionList = ee.List.sequence(0.1, 0.9, 0.1);

var accuracies = numTreesList.map(function(numTrees) {

return bagFractionList.map(function(bagFraction) {

var classifier = ee.Classifier.smileRandomForest({

numberOfTrees: numTrees,

bagFraction: bagFraction

})

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// Here we are classifying a table instead of an image

// Classifiers work on both images and tables

var accuracy = test

.classify(classifier)

.errorMatrix('landcover', 'classification')

.accuracy();

return ee.Feature(null, {'accuracy': accuracy,

'numberOfTrees': numTrees,

'bagFraction': bagFraction});

});

}).flatten();

var resultFc = ee.FeatureCollection(accuracies);

// Export the result as CSV

Export.table.toDrive({

collection: resultFc,

description: 'Multiple_Parameter_Tuning_Results',

folder: 'earthengine',

fileNamePrefix: 'numtrees_bagfraction',

fileFormat: 'CSV'});

// Alternatively we can automatically pick the parameters

// that result in the highest accuracy

var resultFcSorted = resultFc.sort('accuracy', false);

var highestAccuracyFeature = resultFcSorted.first();

var highestAccuracy = highestAccuracyFeature.getNumber('accuracy');

var optimalNumTrees = highestAccuracyFeature.getNumber('numberOfTrees');

var optimalBagFraction = highestAccuracyFeature.getNumber('bagFraction');

// Use the optimal parameters in a model and perform final classification

var optimalModel = ee.Classifier.smileRandomForest({

numberOfTrees: optimalNumTrees,

bagFraction: optimalBagFraction

}).train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

var finalClassification = composite.classify(optimalModel);

// Printing or Displaying the image may time out as it requires

// extensive computation to find the optimal parameters

// Export the 'finalClassification' to Asset and import the

// result to view it.Post-Processing Classification Results

Supervised classification results often contain salt-and-pepper noise caused by mis-classified pixels. It is usually preferable to apply some post-processing techniques to remove such noise. The following script contains the code for two popular techniques for post-processing classification results.

- Using un-supervised clustering to replacing classified value by majority value in each cluster.

- Replacing isolated pixels with surrounding value with a majority filter.

Remember that the neighborhood methods are scale-dependent so the results will change as you zoom in/out. Export the results at the desired scale to see the effect of post-processing.

// Sentinel-2 Median Composite

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(composite, rgbVis, 'Composite');

// Raw Supervised Classification Results

var classified = ee.Image('projects/spatialthoughts/assets/e2e/s2_classification');

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified, {min: 0, max: 3, palette: palette}, 'Classification');

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry, 14);

//**************************************************************************

// Post process by clustering

//**************************************************************************

// Cluster using Unsupervised Clustering methods

var seeds = ee.Algorithms.Image.Segmentation.seedGrid(5);

var snic = ee.Algorithms.Image.Segmentation.SNIC({

image: composite.select('B.*'),

compactness: 0,

connectivity: 4,

neighborhoodSize: 10,

size: 2,

seeds: seeds

});

var clusters = snic.select('clusters');

// Assign class to each cluster based on 'majority' voting (using ee.Reducer.mode()

var smoothed = classified.addBands(clusters);

var clusterMajority = smoothed.reduceConnectedComponents({

reducer: ee.Reducer.mode(),

labelBand: 'clusters'

});

Map.addLayer(clusterMajority, {min: 0, max: 3, palette: palette},

'Processed using Clusters');

//**************************************************************************

// Post process by replacing isolated pixels with surrounding value

//**************************************************************************

// count patch sizes

var patchsize = classified.connectedPixelCount(40, false);

// run a majority filter

var filtered = classified.focal_mode({

radius: 10,

kernelType: 'square',

units: 'meters',

});

// updated image with majority filter where patch size is small

var connectedClassified = classified.where(patchsize.lt(25),filtered);

Map.addLayer(connectedClassified, {min: 0, max: 3, palette: palette},

'Processed using Connected Pixels');

//**************************************************************************

// Export the Results

//**************************************************************************

// Pixel-based methods are scale dependent

// Zooming in or out will change the results

// Export the results at the required scale to see the final result.

Export.image.toDrive({

image: clusterMajority,

description: 'Post_Processed_with_Clusters',

folder: 'earthengine',

fileNamePrefix: 'classification_clusters',

region: geometry,

scale: 10,

maxPixels: 1e10

});

Export.image.toDrive({

image: connectedClassified,

description: 'Post_Processed_with_ConnectedPixels',

folder: 'earthengine',

fileNamePrefix: 'classification_connected',

region: geometry,

scale: 10,

maxPixels: 1e10

});Principal Component Analysis (PCA)

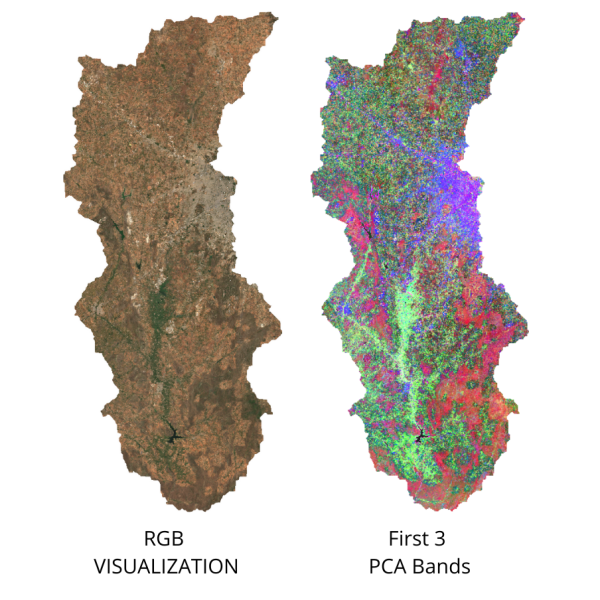

PCA is a very useful technique in improving your supervised classification results. This is a statistical technique that compresses data from a large number of bands into fewer uncorrelated bands. You can run PCA on your image and add the first few (typically 3) principal component bands to the original composite before sampling training points. In the example below, you will notice that 97% of the variance from the 13-band original image is captured in the 3-band PCA image. This sends a stronger signal to the classifier and improves accuracy by allowing it to distinguish different classes better.

// Script showing how to do Principal Component Analysis on images

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

// Display the original image

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(composite, rgbVis, 'Composite');

// Define the geometry and scale parameters

var geometry = boundary.geometry();

var scale = 20;

// Run the PCA function

var pca = PCA(composite);

// Extract the properties of the pca image

var variance = pca.toDictionary();

print('Variance of Principal Components', variance);

// As you see from the printed results, ~97% of the variance

// from the original image is captured in the first 3 principal components

// We select those and discard others

var pca = PCA(composite).select(['pc1', 'pc2', 'pc3']);

print('First 3 PCA Bands', pca);

// PCA computation is expensive and can time out when displaying on the map

// Export the results and import them back

// Replace this with your asset folder

// The folder must exist before exporting

var exportFolder = 'projects/spatialthoughts/assets/e2e/';

// Export Composite

var pcaExportImage = 'composite_pca';

var pcaExportImagePath = exportFolder + pcaExportImage;

Export.image.toAsset({

image: pca,

description: 'Principal_Components_Export',

assetId: pcaExportImagePath,

region: geometry,

scale: scale,

maxPixels: 1e10

});

// Wait for the export to finish

// Once the export finishes, import the asset and display

var pca = ee.Image(pcaExportImagePath);

var pcaVisParams = {bands: ['pc1', 'pc2', 'pc3'], min: -2, max: 2};

Map.addLayer(pca, pcaVisParams, 'Principal Components');

//**************************************************************************

// Function to calculate Principal Components

// Code adapted from https://developers.google.com/earth-engine/guides/arrays_eigen_analysis

//**************************************************************************

function PCA(maskedImage){

var image = maskedImage.unmask();

var scale = scale;

var region = geometry;

var bandNames = image.bandNames();

// Mean center the data to enable a faster covariance reducer

// and an SD stretch of the principal components.

var meanDict = image.reduceRegion({

reducer: ee.Reducer.mean(),

geometry: region,

scale: scale,

maxPixels: 1e13,

tileScale: 16

});

var means = ee.Image.constant(meanDict.values(bandNames));

var centered = image.subtract(means);

// This helper function returns a list of new band names.

var getNewBandNames = function(prefix) {

var seq = ee.List.sequence(1, bandNames.length());

return seq.map(function(b) {

return ee.String(prefix).cat(ee.Number(b).int());

});

};

// This function accepts mean centered imagery, a scale and

// a region in which to perform the analysis. It returns the

// Principal Components (PC) in the region as a new image.

var getPrincipalComponents = function(centered, scale, region) {

// Collapse the bands of the image into a 1D array per pixel.

var arrays = centered.toArray();

// Compute the covariance of the bands within the region.

var covar = arrays.reduceRegion({

reducer: ee.Reducer.centeredCovariance(),

geometry: region,

scale: scale,

maxPixels: 1e13,

tileScale: 16

});

// Get the 'array' covariance result and cast to an array.

// This represents the band-to-band covariance within the region.

var covarArray = ee.Array(covar.get('array'));

// Perform an eigen analysis and slice apart the values and vectors.

var eigens = covarArray.eigen();

// This is a P-length vector of Eigenvalues.

var eigenValues = eigens.slice(1, 0, 1);

// Compute Percentage Variance of each component

// This will allow us to decide how many components capture

// most of the variance in the input

var eigenValuesList = eigenValues.toList().flatten()

var total = eigenValuesList.reduce(ee.Reducer.sum())

var percentageVariance = eigenValuesList.map(function(item) {

var component = eigenValuesList.indexOf(item).add(1).format('%02d')

var variance = ee.Number(item).divide(total).multiply(100).format('%.2f')

return ee.List([component, variance])

})

// Create a dictionary that will be used to set properties on final image

var varianceDict = ee.Dictionary(percentageVariance.flatten())

// This is a PxP matrix with eigenvectors in rows.

var eigenVectors = eigens.slice(1, 1);

// Convert the array image to 2D arrays for matrix computations.

var arrayImage = arrays.toArray(1);

// Left multiply the image array by the matrix of eigenvectors.

var principalComponents = ee.Image(eigenVectors).matrixMultiply(arrayImage);

// Turn the square roots of the Eigenvalues into a P-band image.

// Call abs() to turn negative eigenvalues to positive before

// taking the square root

var sdImage = ee.Image(eigenValues.abs().sqrt())

.arrayProject([0]).arrayFlatten([getNewBandNames('sd')]);

// Turn the PCs into a P-band image, normalized by SD.

return principalComponents

// Throw out an an unneeded dimension, [[]] -> [].

.arrayProject([0])

// Make the one band array image a multi-band image, [] -> image.

.arrayFlatten([getNewBandNames('pc')])

// Normalize the PCs by their SDs.

.divide(sdImage)

.set(varianceDict);

};

var pcImage = getPrincipalComponents(centered, scale, region);

return pcImage.mask(maskedImage.mask());

}Multi-temporal Composites for Crop Classification

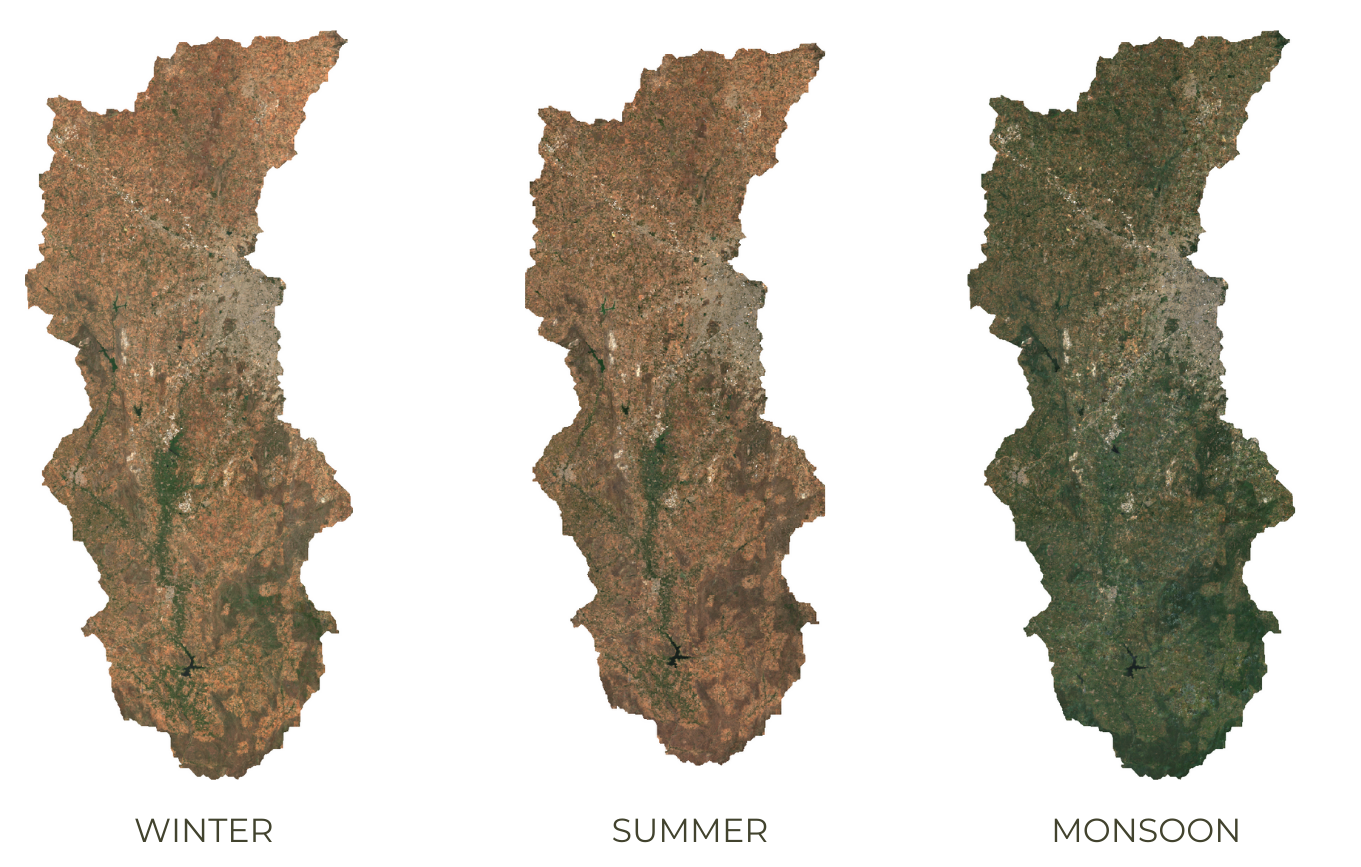

Crop classification is a difficult problem. A useful technique that aids in clear distinction of crops is to account for crop phenology. This technique can be applied to detect a specific type of crop or distinguish crops from other forms of vegetation. You can create composite images for different periods of the cropping cycle and create a stacked image to be used for classification. This allows the classifier to learn the temporal pattern and detect pixels that exhibit similar patterns.

Capturing Crop Phenology through Seasonal Composites

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

// There are 3 distinct crop seasons in the area of interest

// Jan-March = Winter (Rabi) Crops

// April-June = Summer Crops / Harvest

// July-December = Monsoon (Kharif) Crops

var cropCalendar = ee.List([[1,3], [4,6], [7,12]]);

// We create different composites for each season

var createSeasonComposites = function(months) {

var startMonth = ee.List(months).get(0);

var endMonth = ee.List(months).get(1);

var monthFilter = ee.Filter.calendarRange(startMonth, endMonth, 'month');

var seasonFiltered = filteredMasked.filter(monthFilter);

var composite = seasonFiltered.median();

return composite.select('B.*');

};

var compositeList = cropCalendar.map(createSeasonComposites);

var rabi = ee.Image(compositeList.get(0));

var harvest = ee.Image(compositeList.get(1));

var kharif = ee.Image(compositeList.get(2));

var visParams = {bands: ['B4', 'B3', 'B2'], min: 0, max: 3000, gamma: 1.2};

Map.addLayer(rabi.clip(geometry), visParams, 'Rabi');

Map.addLayer(harvest.clip(geometry), visParams, 'Harvest');

Map.addLayer(kharif.clip(geometry), visParams, 'Kharif');

// Create a stacked image with composites from all seasons

// This multi-temporal image is able capture the crop phenology

// Classifier will be able to detect crop-pixels from non-crop pixels

var composite = rabi.addBands(harvest).addBands(kharif);

// This is a 36-band image

// Use this image for sampling training points for

// to train a crop classifier

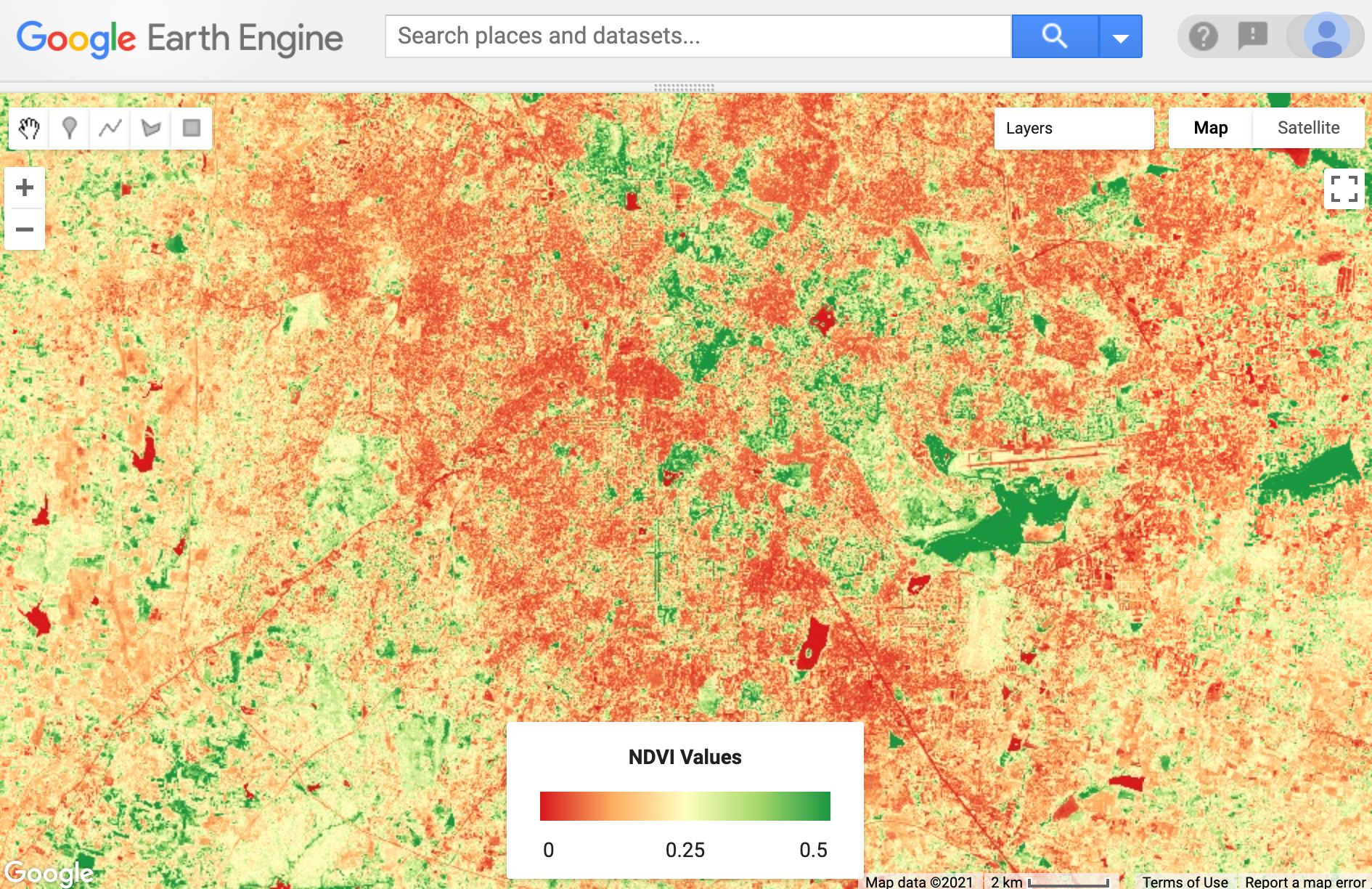

print(composite);Computing Correlation

A useful technique to aid crop classification is to model the

correlation between precipitation and changes in vegetation. This allows

the model to capture differentiated responses to rainfall (i.e. raid-fed

crops vs permanent forests). We first prepare an image collection where

each image consists of 2 bands - cumulative rainfall for each month and

average NDVI for the next month. This will create 11 images per year

which show precipitation and 1-month lagged NDVI at each pixels. The

collection is then reduced using the

ee.Reducer.pearsonsCorrelation() which outputs a

correlation band. Positive values will show regions where

precipitation caused an increase in NDVI. Adding this band to your input

image for classification will greatly aid the classifier in separating

different types of vegetation.

// Calculate Rainfall-NDVI Correlation

// We want to know whether there exists a correlation between

// rainfall and NDVI

// We build a collection containing monthly total rainfall for a year

// and the next month's average NDVI.

// We then use ee.Reducer.pearsonsCorrelation() to compute pixel-wise

// correlation between rainfall and NDVI response.

// Positive values will indicate vegetation growth in response to

// precipitation and generally rainfed agriculture.

var geometry = ee.Geometry.Point([75.71168046831512, 13.30751919691132]);

Map.centerObject(geometry, 10);

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.date('2020-01-01', '2021-01-01'))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

// Write a function that computes NDVI for an image and adds it as a band

function addNDVI(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename('ndvi');

return image.addBands(ndvi);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var filteredWithNdvi = filteredMasked

.map(addNDVI);

var composite = filteredWithNdvi.median();

var rgbVis = {bands: ['B4', 'B3', 'B2'], min: 0, max: 3000, gamma: 1.2};

Map.addLayer(composite, rgbVis, 'Composite') ;

// Rainfall

var chirps = ee.ImageCollection("UCSB-CHG/CHIRPS/PENTAD");

var chirpsFiltered = chirps

.filter(ee.Filter.date('2020-01-01', '2021-01-01'));

// Create a collection of monthly images

var months = ee.List.sequence(1, 11);

var byMonth = months.map(function(month) {

// Total monthly rainfall

var monthlyRain = chirpsFiltered

.filter(ee.Filter.calendarRange(month, month, 'month'));

var totalRain = monthlyRain.sum();

// Next month's average NDVI

var nextMonth = ee.Number(month).add(1);

var monthly = filteredWithNdvi

.filter(ee.Filter.calendarRange(nextMonth, nextMonth, 'month'));

var medianComposite = monthly.median().select('ndvi');

return totalRain.addBands(medianComposite).set({'month': month});

});

var monthlyCol = ee.ImageCollection.fromImages(byMonth);

// Compute Correlation

var correlationCol = monthlyCol.select(['precipitation', 'ndvi']);

var correlation = correlationCol.reduce(ee.Reducer.pearsonsCorrelation());

// Select all pixels with positive correlation

var positive = correlation.select('correlation').gt(0.5);

Map.addLayer(correlation.select('correlation'),

{min:-1, max:1, palette: ['red', 'white', 'green']}, 'Correlation');

Map.addLayer(positive.selfMask(),

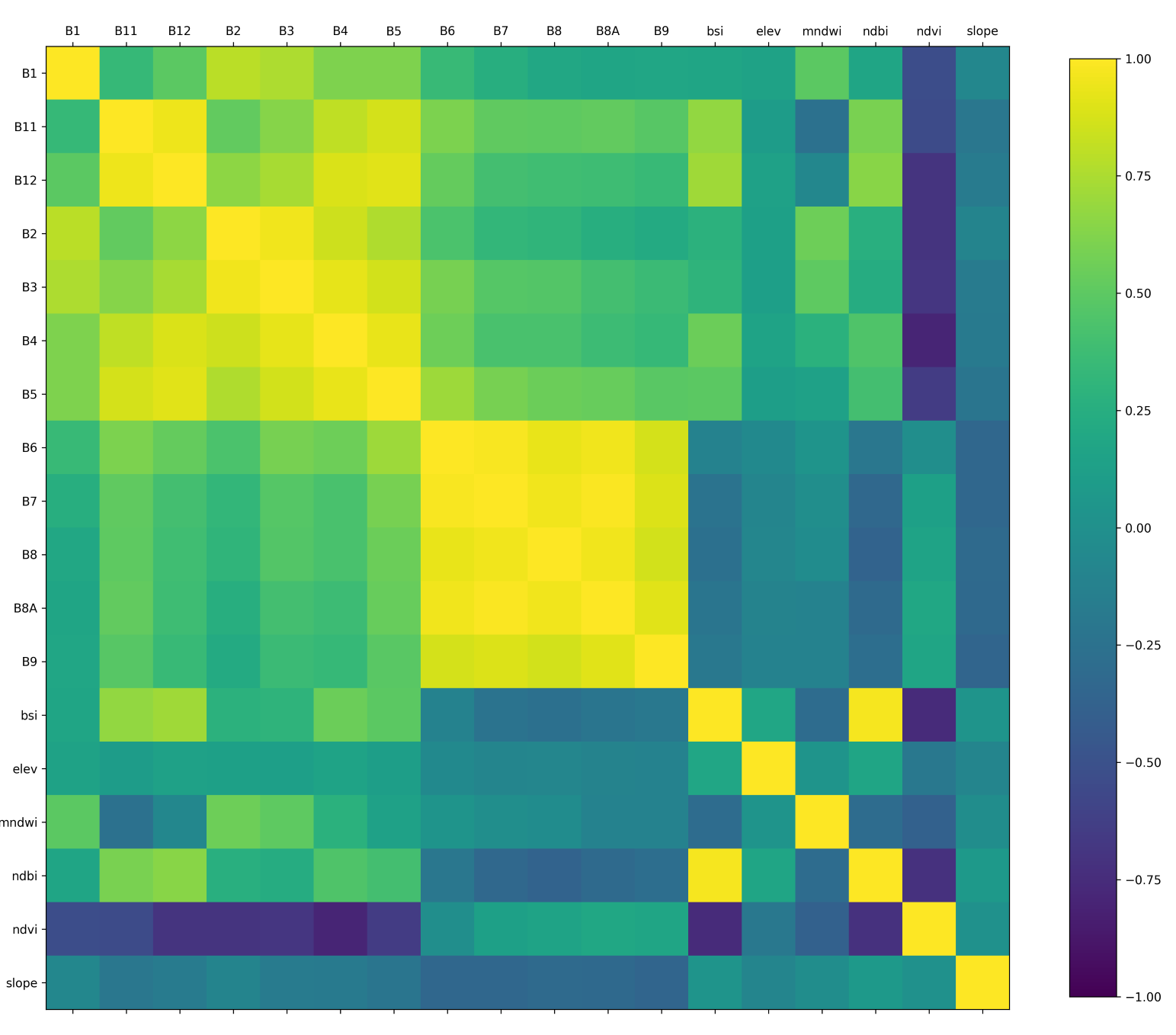

{palette: ['yellow']}, 'Positive Correlation', false); Calculating Band Correlation Matrix

When selecting features for your machine learning model, it is

important to have features which are not correlated with each other.

Correlated features makes it difficult for machine learning models to

discover the interactions between different features. A commonly used

technique to aid in removing redundant variables is to create a

Correlation Matrix. In Earth Engine, you can take a multi-band image and

calculate pair-wise correlation between the bands using either

ee.Reducer.pearsonsCorrelation() or

ee.Reducer.spearmansCorrelation(). The correlation matrix

helps you identify variables that are redundant and can be removed. The

code below also shows how to export the table of features that can be

used in other software to compute correlation.

Correlation Matrix created in Python using data exported from GEE

// Calculate Pair-wise Correlation Between Bands of an Image

// We take a multi-band composite image

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

var visParams = {bands: ['B4', 'B3', 'B2'], min: 0, max: 3000, gamma: 1.2};

Map.addLayer(composite, visParams, 'RGB');

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

// This image has 18 bands and we want to compute correlation between them.

// Get the band names

// These bands will be the input variables to the model

var bands = composite.bandNames();

print(bands);

// Generate random points to sample from the image

var numPoints = 5000;

var samples = composite.sample({

region: geometry,

scale: 10,

numPixels: numPoints,

tileScale: 16

});

print(samples.first());

// Calculate pairwise-correlation between each pair of bands

// Use ee.Reducer.pearsonsCorrelation() for Pearson's Correlation

// Use ee.Reducer.spearmansCorrelation() for Spearman's Correlation

var pairwiseCorr = ee.FeatureCollection(bands.map(function(i){

return bands.map(function(j){

var stats = samples.reduceColumns({

reducer: ee.Reducer.pearsonsCorrelation(),

selectors: [i,j]

});

var bandNames = ee.String(i).cat('_').cat(j);

return ee.Feature(null, {'correlation': stats.get('correlation'), 'band': bandNames});

});

}).flatten());

// Export the table as a CSV file

Export.table.toDrive({

collection: pairwiseCorr,

description: 'Pairwise_Correlation',

folder: 'earthengine',

fileNamePrefix: 'pairwise_correlation',

fileFormat: 'CSV',

});

// You can also export the sampled points and calculate correlation

// in Python or R. Reference Python implementation is at

// https://courses.spatialthoughts.com/python-dataviz.html#feature-correlation-matrix

Export.table.toDrive({

collection: samples,

description: 'Feature_Sample_Data',

folder: 'earthengine',

fileNamePrefix: 'feature_sample_data',

fileFormat: 'CSV',

selectors: bands.getInfo()

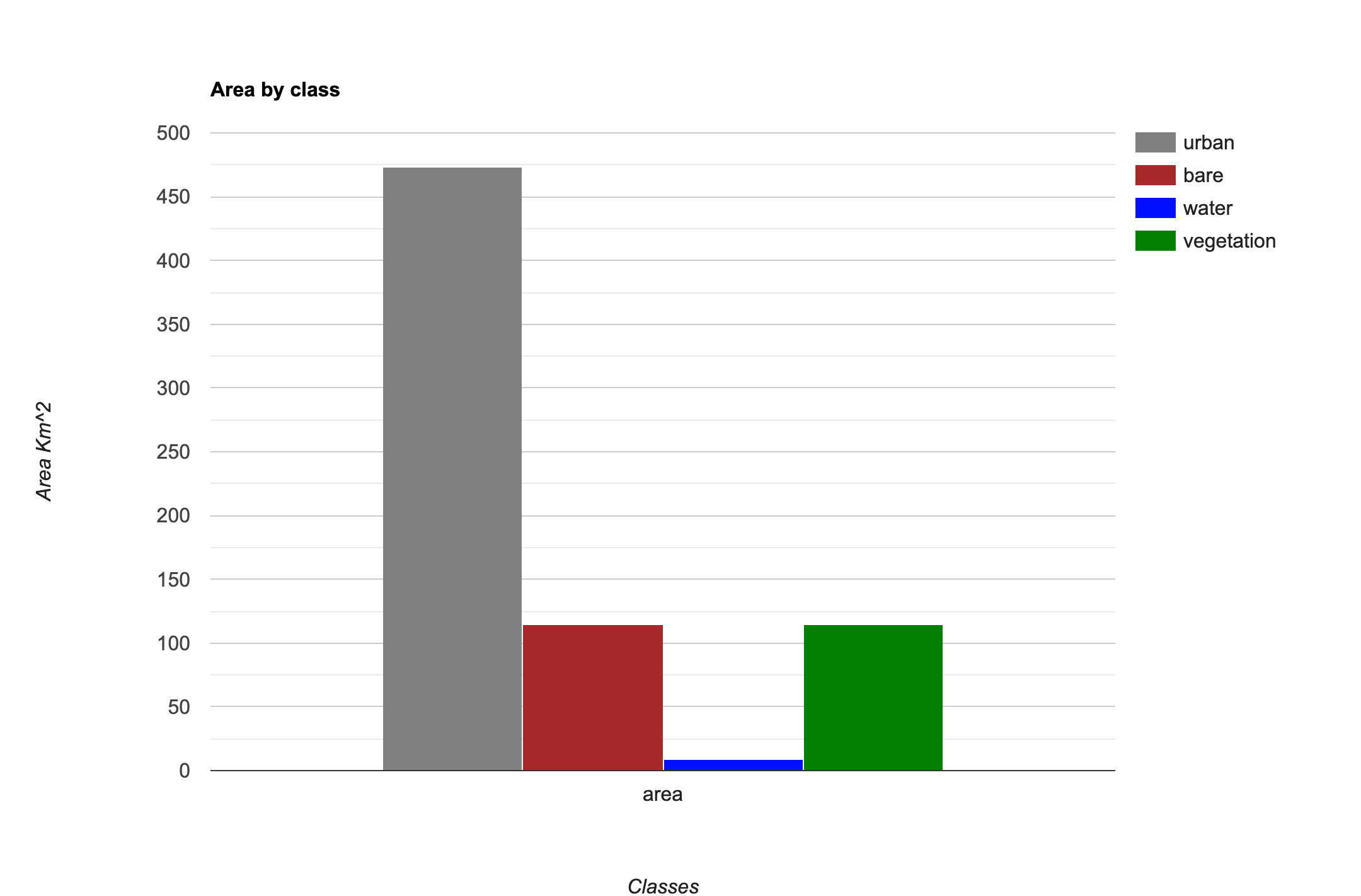

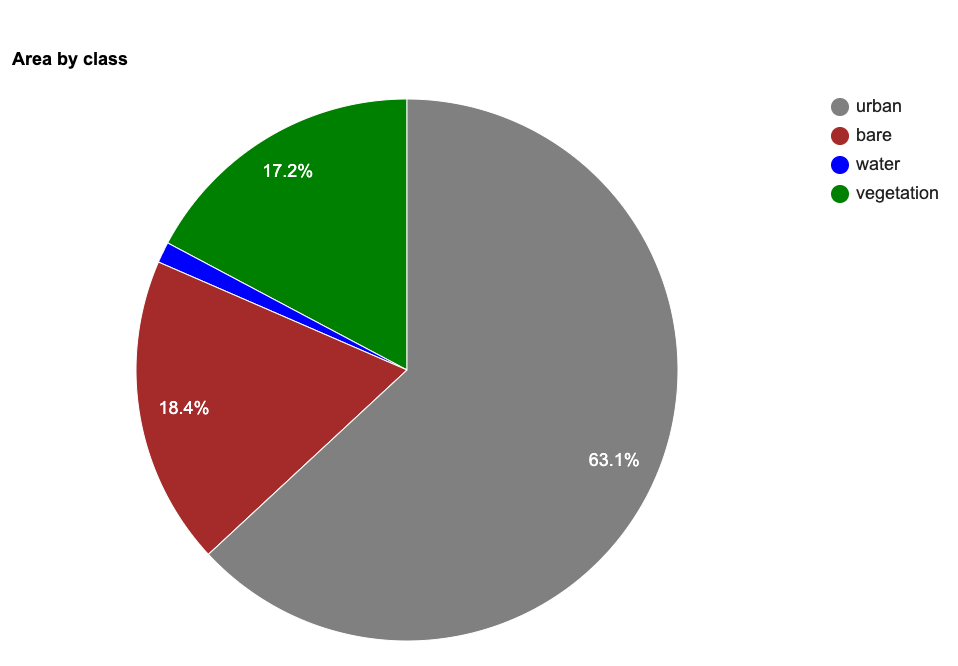

});Calculating Area by Class

This code snippet shows how to use a Grouped

Reducer to calculate area covered by each class in a classified

image. It also shows how to use the

ui.Chart.feature.byProperty() function to create a column

chart and the ui.Chart.feature.byFeature() function to

create a pie chart with areas of each class.

var classified = ee.Image('projects/spatialthoughts/assets/e2e/embeddings_classification');

var geometry = classified.geometry();

Map.centerObject(geometry);

// Display the classified image.

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified, {min: 0, max: 3, palette: palette}, 'Classification');

// We can calculate the areas of all classes in a single pass

// using a Grouped Reducer. Learn more at

// https://spatialthoughts.com/2020/06/19/calculating-area-gee/

// First create a 2 band image with the area image and the classified image

// Divide the area image by 1e6 so area results are in Sq Km

var areaImage = ee.Image.pixelArea().divide(1e6).addBands(classified);

// Calculate areas

var areas = areaImage.reduceRegion({

reducer: ee.Reducer.sum().group({

groupField: 1,

groupName: 'classification',

}),

geometry: geometry,

scale: 10,

maxPixels: 1e10

});

var classAreas = ee.List(areas.get('groups'))

// Process results to extract the areas and

// create a FeatureCollection

// We can define a dictionary with class names

var classNames = ee.Dictionary({

'0': 'urban',

'1': 'bare',

'2': 'water',

'3': 'vegetation'

});

var classAreas = classAreas.map(function(item) {

var areaDict = ee.Dictionary(item);

var classNumber = ee.Number(areaDict.get('classification')).format();

var className = classNames.get(classNumber);

var area = ee.Number(

areaDict.get('sum'));

return ee.Feature(null, {'class': classNumber, 'class_name': className, 'area': area});

});

var classAreaFc = ee.FeatureCollection(classAreas);

// We can now chart the resulting FeatureCollection

// If your area is large, it is advisable to first Export

// the FeatureCollection as an Asset and import it once

// the export is finished.

// Let's create a Bar Chart

var areaChart = ui.Chart.feature.byProperty({

features: classAreaFc,

xProperties: ['area'],

seriesProperty: 'class_name',

}).setChartType('ColumnChart')

.setOptions({

hAxis: {title: 'Classes'},

vAxis: {title: 'Area Km^2'},

title: 'Area by class',

series: {

0: { color: '#cc6d8f' },

1: { color: '#ffc107' },

2: { color: '#1e88e5' },

3: { color: '#004d40' }

}

});

print(areaChart);

// We can also create a Pie-Chart

var areaChart = ui.Chart.feature.byFeature({

features: classAreaFc,

xProperty: 'class_name',

yProperties: ['area']

}).setChartType('PieChart')

.setOptions({

hAxis: {title: 'Classes'},

vAxis: {title: 'Area Km^2'},

title: 'Area by class',

colors: palette

});

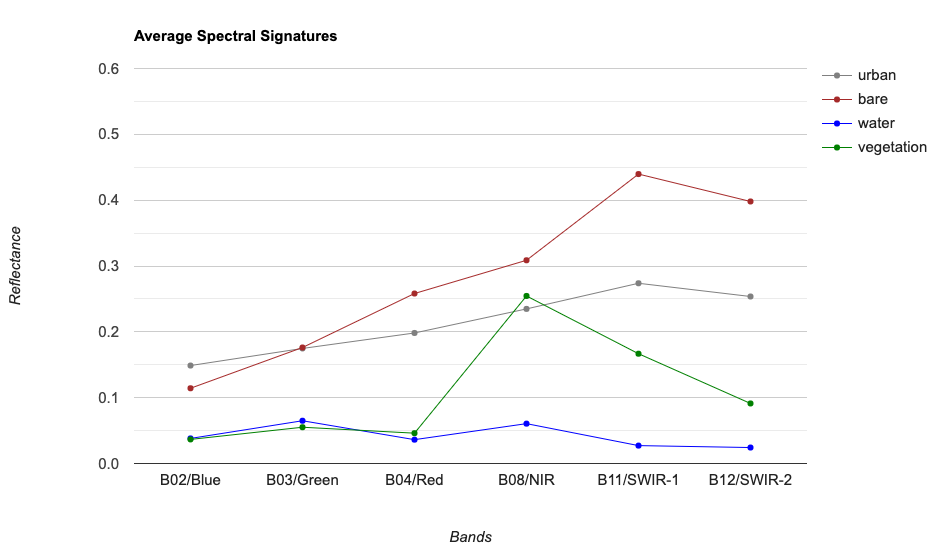

print(areaChart); Spectral Signature Plots

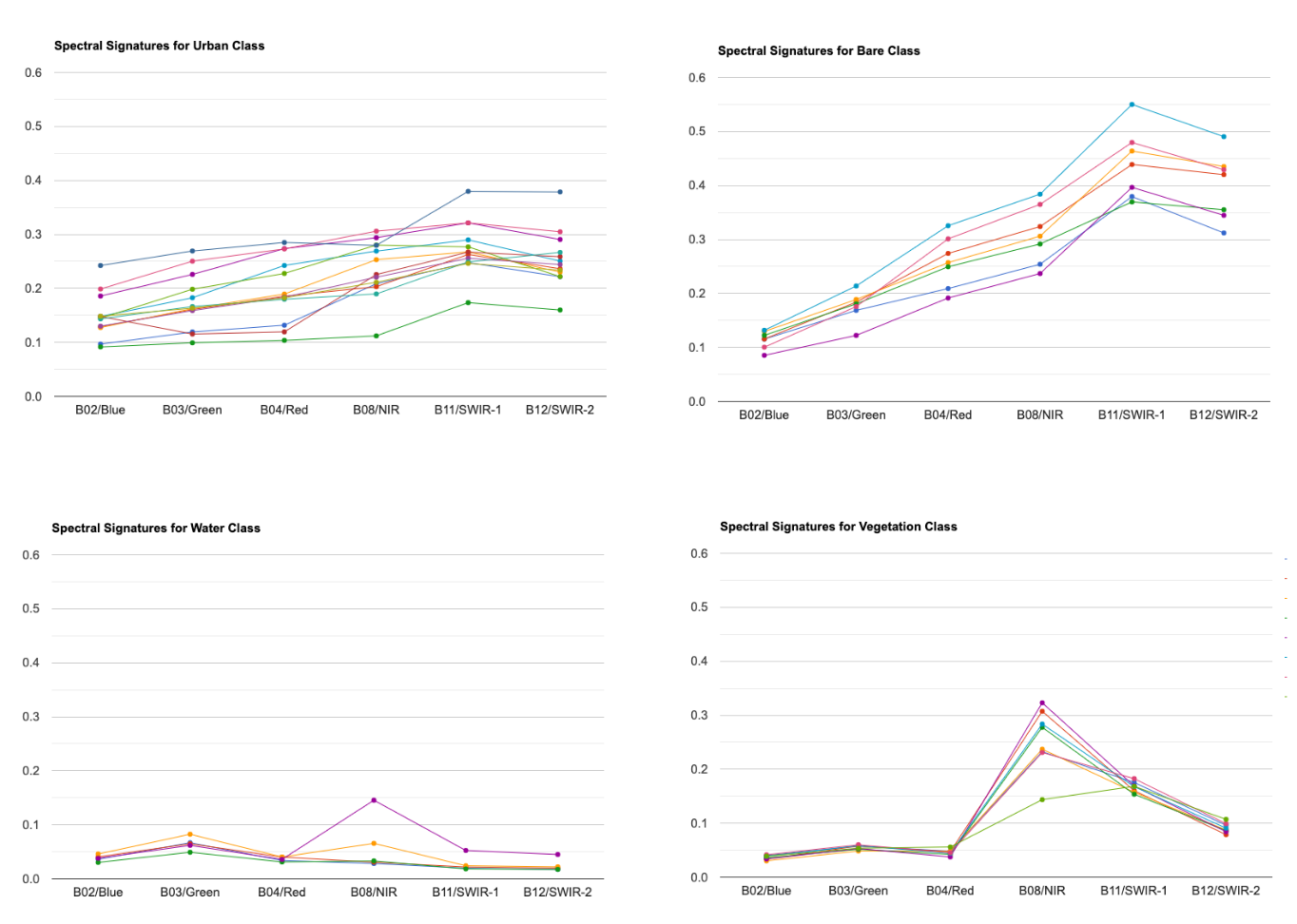

For supervised classification, it is useful to visualize average spectral responses for each band for each class. Such charts are called Spectral Response Curves or Spectral Signatures. Such charts helps determine separability of classes. If classes have very different signatures, a classifier will be able to separate them well.

We can also plot spectral signatures of all training samples for a class and check the quality of the training dataset. If all training samples show similar signatures - it indicates that you have done a good job of collecting appropriate samples. You can also catch potential outliers from these plots.

These charts provide a qualitative and visual methods for checking separability of classes. For quantitative methods, one can apply measures such as Spectral Distance, Mahalanobis distance, Bhattacharyya distance , Jeffreys-Matusita (JM) distance etc. You can find the code for these in this Stack Exchange answer.

Mean Signatures for All Classes

Spectral Signatures for All Training Points by Class

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

// Overlay the point on the image to get bands data.

var training = composite.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

// We will create a chart of spectral signature for all classes

// We have multiple GCPs for each class

// Use a grouped reducer to calculate the average reflectance

// for each band for each class

// We have 12 bands so need to repeat the reducer 12 times

// We also need to group the results by class

// So we find the index of the landcover property and use it

// to group the results

var bands = composite.bandNames();

var numBands = bands.length();

var bandsWithClass = bands.add('landcover');

var classIndex = bandsWithClass.indexOf('landcover');

// Use .combine() to get a reducer capable of

// computing multiple stats on the input

var combinedReducer = ee.Reducer.mean().combine({

reducer2: ee.Reducer.stdDev(),

sharedInputs: true

});

// Use .repeat() to get a reducer for each band

// We then use .group() to get stats by class

var repeatedReducer = combinedReducer.repeat(numBands).group(classIndex);

var gcpStats = training.reduceColumns({

selectors: bands.add('landcover'),

reducer: repeatedReducer,

});

// Result is a dictionary, we do some post-processing to

// extract the results

var groups = ee.List(gcpStats.get('groups'));

var classNames = ee.List(['urban', 'bare', 'water', 'vegetation']);

var fc = ee.FeatureCollection(groups.map(function(item) {

// Extract the means

var values = ee.Dictionary(item).get('mean');

var groupNumber = ee.Dictionary(item).get('group');

var properties = ee.Dictionary.fromLists(bands, values);

var withClass = properties.set('class', classNames.get(groupNumber));

return ee.Feature(null, withClass);

}));

// Chart spectral signatures of training data

var options = {

title: 'Average Spectral Signatures',

hAxis: {title: 'Bands'},

vAxis: {title: 'Reflectance'},

lineWidth: 1,

pointSize: 4,

series: {

0: {color: 'grey'},

1: {color: 'brown'},

2: {color: 'blue'},

3: {color: 'green'},

}};

// Default band names don't sort propertly

// Instead, we can give a dictionary with

// labels for each band in the X-Axis

var bandDescriptions = {

'B2': 'B02/Blue',

'B3': 'B03/Green',

'B4': 'B04/Red',

'B8': 'B08/NIR',

'B11': 'B11/SWIR-1',

'B12': 'B12/SWIR-2'

};

// Create the chart and set options.

var chart = ui.Chart.feature.byProperty({

features: fc,

xProperties: bandDescriptions,

seriesProperty: 'class'

})

.setChartType('ScatterChart')

.setOptions(options);

print(chart);

var classChart = function(landcover, label, color) {

var options = {

title: 'Spectral Signatures for ' + label + ' Class',

hAxis: {title: 'Bands'},

vAxis: {title: 'Reflectance'},

lineWidth: 1,

pointSize: 4,

};

var fc = training.filter(ee.Filter.eq('landcover', landcover));

var chart = ui.Chart.feature.byProperty({

features: fc,

xProperties: bandDescriptions,

})

.setChartType('ScatterChart')

.setOptions(options);

print(chart);

};

classChart(0, 'Urban');

classChart(1, 'Bare');

classChart(2, 'Water');

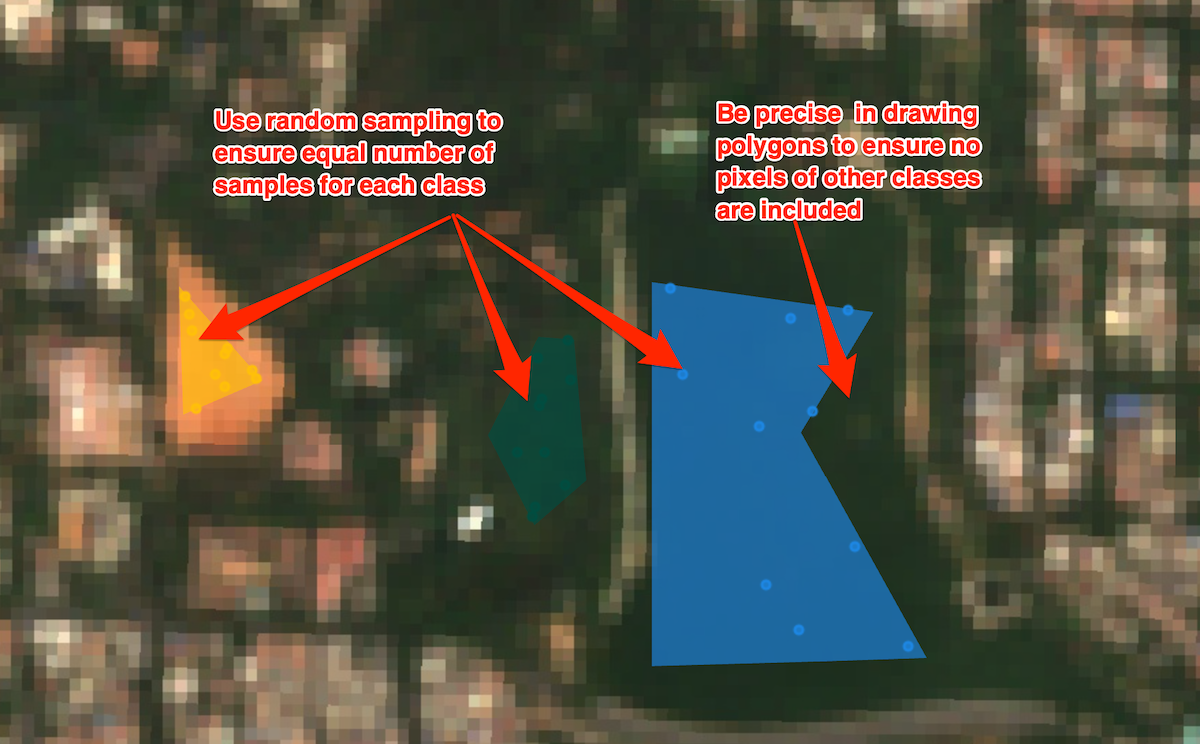

classChart(3, 'Vegetation');Using Polygons for Training Data

Tips for Using Polygons for Training Data

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry))

.select('B.*');

var composite = filtered.median();

// Display the input composite.

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Function to get exact number of random samples

// from polygons

var getPoints = function(polygons, nPoints, classProperty){

// Generate N random points inside the polygons

var points = ee.FeatureCollection.randomPoints(polygons, nPoints);

// Get the class value propertie

var classValue = polygons.first().get(classProperty);

// Iterate over points and assign the class value

var pointsWithClassProperty = points.map(function(point){

return point.set(classProperty, classValue);

});

return pointsWithClassProperty;

};

// Extract random samples from each polygon

// This helps us get balanced samples for each class

var urbanPoints = getPoints(urban, 10, 'landcover');

var barePoints = getPoints(bare, 10, 'landcover');

var waterPoints = getPoints(water, 10, 'landcover');

var vegetationPoints = getPoints(vegetation, 10, 'landcover');

var gcps = urbanPoints

.merge(barePoints)

.merge(waterPoints)

.merge(vegetationPoints);

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50).train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// // Classify the image.

var classified = composite.classify(classifier);

Map.centerObject(geometry);

// Choose a 4-color palette

// Assign a color for each class in the following order

// Urban, Bare, Water, Vegetation

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, '2019');

// Display the chosen random samples

// We use the style() function to style the GCPs

var palette = ee.List(palette);

var landcover = ee.List([0, 1, 2, 3]);

var gcpsStyled = ee.FeatureCollection(

landcover.map(function(lc){

var color = palette.get(landcover.indexOf(lc));

var markerStyle = { color: 'white', pointShape: 'diamond',

pointSize: 4, width: 1, fillColor: color};

return gcps.filter(ee.Filter.eq('landcover', lc))

.map(function(point){

return point.set('style', markerStyle);

});

})).flatten();

Map.addLayer(gcpsStyled.style({styleProperty:'style'}), {}, 'Point Samples');Stratified Sampling of GCPs

// Example script for stratified sampling of

// training samples for supervised classification

// The script demonstrates the difference between

// a simple random split of training features vs.

// a random split of training features by by class

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Simple Random Split

gcps = gcps.randomColumn();

var trainingGcpSimple = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcpSimple = gcps.filter(ee.Filter.gte('random', 0.6));

// Split with Stratified Random Sampling

// Split features into training / validation sets, per class

var classes = ee.List(gcps.aggregate_array('landcover').distinct());

print('Classes', classes);

var getSplitSamples = function(classNumber) {

var classSamples = gcps

.filter(ee.Filter.eq('landcover', classNumber))

.randomColumn('random');

// Split the samples, 60% for training, 40% for validation

var classTrainingGcp = classSamples

.filter(ee.Filter.lt('random', 0.6))

// Set a property to identify the fraction

.map(function(f) {return f.set('fraction', 'training')});

var classValidationGcp = classSamples

.filter(ee.Filter.gte('random', 0.6))

.map(function(f) {return f.set('fraction', 'validation')});

return classTrainingGcp.merge(classValidationGcp);

};

// map() the function on the list of classes

var splitSamples = ee.FeatureCollection(classes.map(getSplitSamples))

.flatten();

// Filter using the 'fraction' property

var trainingGcpStratified = splitSamples.filter(

ee.Filter.eq('fraction', 'training'));

var validationGcpStratified = splitSamples.filter(

ee.Filter.eq('fraction', 'validation'));

// Validate the results

// Function to calculate distribution of samples

var getDistribution = function(fc) {

return fc.reduceColumns({

reducer: ee.Reducer.frequencyHistogram(),

selectors: ['landcover']}).get('histogram');

};

print('Distribution of All Samples by Class', getDistribution(gcps));

print('Training (Simple Split)',

getDistribution(trainingGcpSimple));

print('Training (Stratified Split)',

getDistribution(trainingGcpStratified));

print('Validation (Simple Split)',

getDistribution(validationGcpSimple));

print('Validation (Stratified Split)',

getDistribution(validationGcpStratified));

Identify Misclassified GCPs

While doing accuracy assessment, you will see the validation features

that were not classified correctly. It is useful to visually see the

points that were misclassified. We can use ee.Filter.eq()

and ee.Filter.neq() filters to filter the features where

the actual and predicted classes were different. The code below shows

how to implement this and also use the style() function

visualize them effectively.

// Script that shows how to apply filters to identify

// validation points that were misclassified

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

// Display the input composite.

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(composite, rgbVis, 'image');

// Add a random column and split the GCPs into training and validation set

gcps = gcps.randomColumn();

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16,

geometries:true

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// Classify the image.

var classified = composite.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified, {min: 0, max: 3, palette: palette}, 'Classified');

var test = classified.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

tileScale: 16,

scale: 10,

geometries:true // This is requires to visualize the locations

});

var testConfusionMatrix = test.errorMatrix('landcover', 'classification');

print('Confusion Matrix', testConfusionMatrix);

// Let's apply filters to find misclassified points

// We can find all points which are labeled landcover=0 (urban)

// but were not classified correctly

var urbanMisclassified = test

.filter(ee.Filter.eq('landcover', 0))

.filter(ee.Filter.neq('classification', 0));

print('Urban Misclassified Points', urbanMisclassified);

// We can also apply a filter to select all misclassified points

// Since we are comparing 2 properties agaist each-other,

// we need to use a binary filter

var misClassified = test.filter(ee.Filter.notEquals({

leftField:'classification', rightField:'landcover'}));

print('All Misclassified Points', misClassified);

// Display the misclassified points by styling them

var landcover = ee.List([0, 1, 2, 3]);

var palette = ee.List(['gray','brown','blue','green']);

var misclassStyled = ee.FeatureCollection(

landcover.map(function(lc){

var feature = misClassified.filter(ee.Filter.eq('landcover', lc));

var color = palette.get(landcover.indexOf(lc));

var markerStyle = {color:color};

return feature.map(function(point){

return point.set('style', markerStyle);

});

})).flatten();

Map.addLayer(misclassStyled.style({styleProperty:"style"}), {}, 'Misclassified Points');Image Normalization and Standardization

For machine learning, it is a recommended practice to either normalize or standardize your features. The code below shows how to implement these feature scaling techniques.

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

//**************************************************************************

// Function to Normalize Image

// Pixel Values should be between 0 and 1

// Formula is (x - xmin) / (xmax - xmin)

//**************************************************************************

function normalize(image){

var bandNames = image.bandNames();

// Compute min and max of the image

var minDict = image.reduceRegion({

reducer: ee.Reducer.min(),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

var maxDict = image.reduceRegion({

reducer: ee.Reducer.max(),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

var mins = ee.Image.constant(minDict.values(bandNames));

var maxs = ee.Image.constant(maxDict.values(bandNames));

var normalized = image.subtract(mins).divide(maxs.subtract(mins));

return normalized;

}

//**************************************************************************

// Function to Standardize Image

// (Mean Centered Imagery with Unit Standard Deviation)

// https://365datascience.com/tutorials/statistics-tutorials/standardization/

//**************************************************************************

function standardize(image){

var bandNames = image.bandNames();

// Mean center the data to enable a faster covariance reducer

// and an SD stretch of the principal components.

var meanDict = image.reduceRegion({

reducer: ee.Reducer.mean(),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

var means = ee.Image.constant(meanDict.values(bandNames));

var centered = image.subtract(means);

var stdDevDict = image.reduceRegion({

reducer: ee.Reducer.stdDev(),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

var stddevs = ee.Image.constant(stdDevDict.values(bandNames));

var standardized = centered.divide(stddevs);

return standardized;

}

var standardizedComposite = standardize(composite);

var normalizedComposite = normalize(composite);

Map.addLayer(composite,

{bands: ['B4', 'B3', 'B2'], min: 0, max: 3000, gamma: 1.2}, 'Original Image');

Map.addLayer(normalizedComposite,

{bands: ['B4', 'B3', 'B2'], min: 0, max: 1, gamma: 1.2}, 'Normalized Image');

Map.addLayer(standardizedComposite,

{bands: ['B4', 'B3', 'B2'], min: -1, max: 2, gamma: 1.2}, 'Standarized Image');

Map.centerObject(geometry);

// Verify Normalization

var beforeDict = composite.reduceRegion({

reducer: ee.Reducer.minMax(),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

var afterDict = normalizedComposite.reduceRegion({

reducer: ee.Reducer.minMax(),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

print('Original Image Min/Max', beforeDict);

print('Normalized Image Min/Max', afterDict);

// Verify Standadization

// Verify that the means are 0 and standard deviations are 1

var beforeDict = composite.reduceRegion({

reducer: ee.Reducer.mean().combine({

reducer2: ee.Reducer.stdDev(), sharedInputs: true}),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

var resultDict = standardizedComposite.reduceRegion({

reducer: ee.Reducer.mean().combine({

reducer2: ee.Reducer.stdDev(), sharedInputs: true}),

geometry: geometry,

scale: 10,

maxPixels: 1e9,

bestEffort: true,

tileScale: 16

});

// Means are very small franctions close to 0

// Round them off to 2 decimals

var afterDict = resultDict.map(function(key, value) {

return ee.Number(value).format('%.2f');

});

print('Original Image Mean/StdDev', beforeDict);

print('Standadized Image Mean/StdDev', afterDict);Calculate Feature Importance

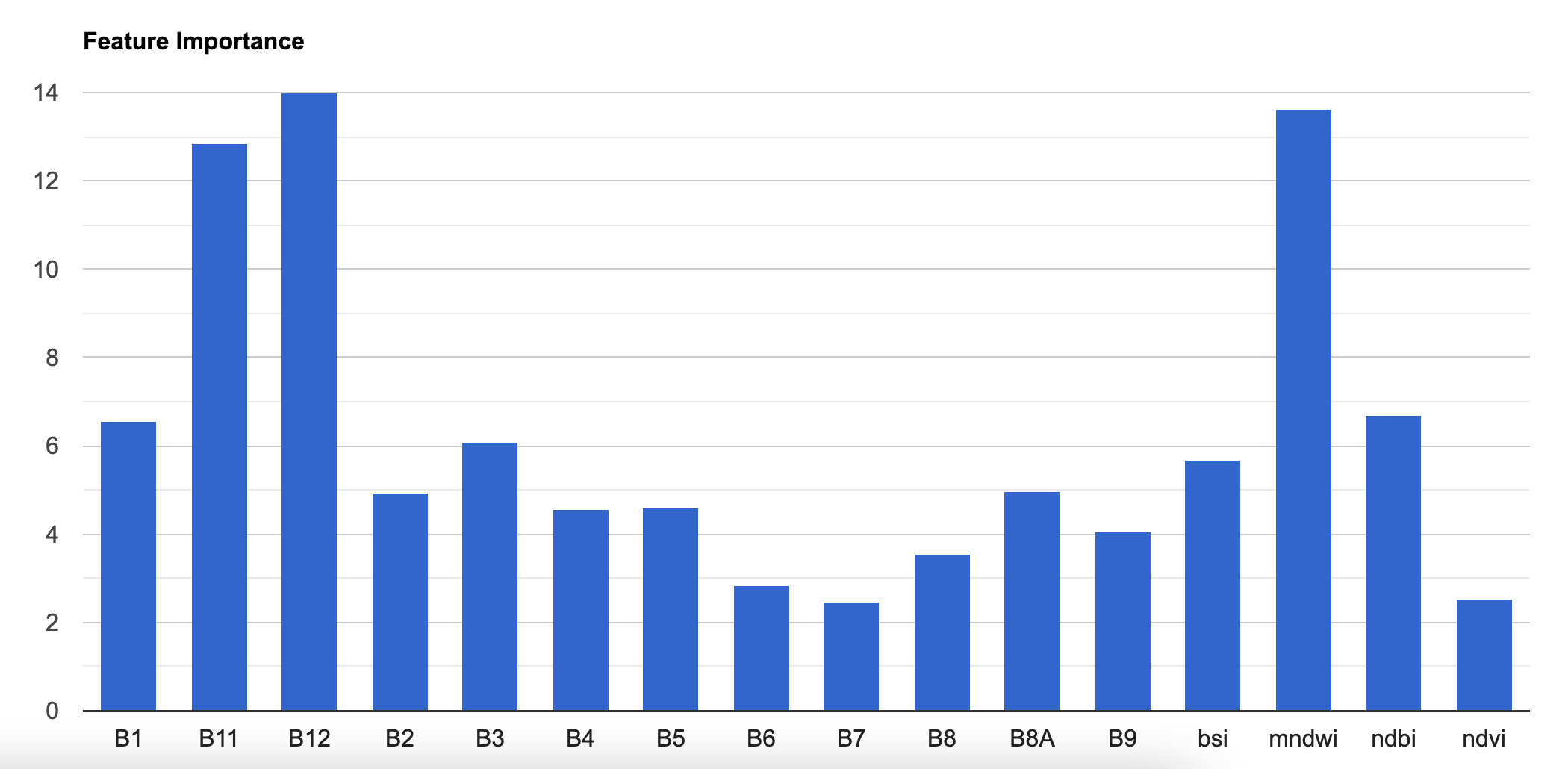

Many classifiers in GEE have a explain() method that

calculates feature importances. The classifier will assign a score to

each input variable on how useful they were at predicting the correct

value. The script below shows how to extract the feature importance and

create a chart to visualize it.

Relative Feature Importance

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var addIndices = function(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

var ndbi = image.normalizedDifference(['B11', 'B8']).rename(['ndbi']);

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

var bsi = image.expression(

'(( X + Y ) - (A + B)) /(( X + Y ) + (A + B)) ', {

'X': image.select('B11'), //swir1

'Y': image.select('B4'), //red

'A': image.select('B8'), // nir

'B': image.select('B2'), // blue

}).rename('bsi');

return image.addBands(ndvi).addBands(ndbi).addBands(mndwi).addBands(bsi);

};

composite = addIndices(composite);

// Display the input composite.

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.addLayer(composite, rgbVis, 'image');

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50).train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// // Classify the image.

var classified = composite.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified, {min: 0, max: 3, palette: palette}, '2019');

//**************************************************************************

// Calculate Feature Importance

//**************************************************************************

// Run .explain() to see what the classifer looks like

print(classifier.explain());

// Calculate variable importance

var importance = ee.Dictionary(classifier.explain().get('importance'));

// Calculate relative importance

var sum = importance.values().reduce(ee.Reducer.sum());

var relativeImportance = importance.map(function(key, val) {

return (ee.Number(val).multiply(100)).divide(sum);

});

print(relativeImportance);

// Create a FeatureCollection so we can chart it

var importanceFc = ee.FeatureCollection([

ee.Feature(null, relativeImportance)

]);

var chart = ui.Chart.feature.byProperty({

features: importanceFc

}).setOptions({

title: 'Feature Importance',

vAxis: {title: 'Importance'},

hAxis: {title: 'Feature'},

legend: {position: 'none'}

});

print(chart);Visualizing Random Forest Decision Trees

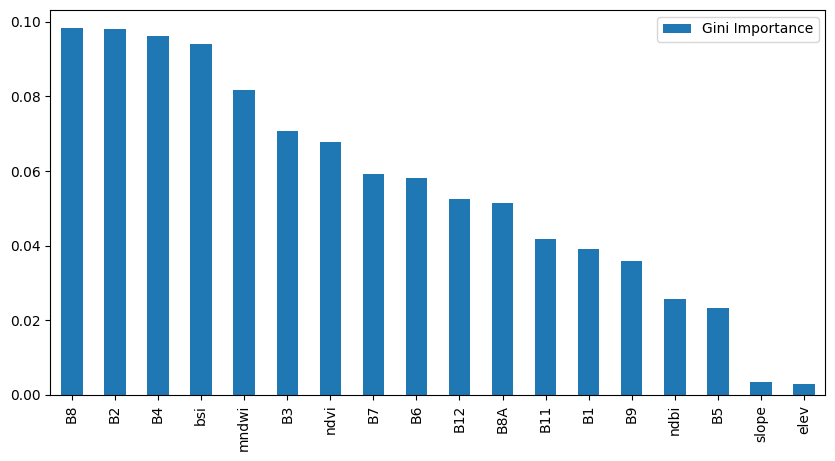

The Earth Engine API lacks functions for analyzing and visualizing machine learning models. There are several implementations available from the community to fill this gap - including us. As an alternative, we can export our training samples from GEE and use widely-used machine learning packages like Scikit-Learn to carry out this analysis. This notebook demonstrates the process of Exporting the training samples as a CSV file and using it with Scikit-Learn for the following analysis

- Analyzing Feature Importance using GINI Importance

- Visualizing Random Forest Trees

Initialization

First of all, you need to run the following cells to initialize the

API and authorize your account. You must have a Google Cloud Project

associated with your GEE account. Replace the cloud_project

with your own project from Google Cloud Console.

Part 1: Export Training Samples from GEE

# Load training samples

gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps')

# Load the region boundary of a basin

boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary')

geometry = boundary.geometry()

year = 2019

startDate = ee.Date.fromYMD(year, 1, 1)

endDate = startDate.advance(1, 'year')

s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED')

filtered = s2 \

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30)) \

.filter(ee.Filter.date(startDate, endDate)) \

.filter(ee.Filter.bounds(geometry))

# Load the Cloud Score+ collection

csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED')

csPlusBands = csPlus.first().bandNames()

# We need to add Cloud Score + bands to each Sentinel-2

# image in the collection

# This is done using the linkCollection() function

filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands)

# Function to mask pixels with low CS+ QA scores.

def maskLowQA(image):

qaBand = 'cs'

clearThreshold = 0.5

mask = image.select(qaBand).gte(clearThreshold)

return image.updateMask(mask)

filteredMasked = filteredS2WithCs \

.map(maskLowQA) \

.select('B.*')

composite = filteredMasked.median()

# Add Spectral Indices

def addIndices(image):

ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi'])

ndbi = image.normalizedDifference(['B11', 'B8']).rename(['ndbi'])

mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi'])

bsi = image.expression(

'(( X + Y ) - (A + B)) /(( X + Y ) + (A + B)) ', {

'X': image.select('B11'),

'Y': image.select('B4'),

'A': image.select('B8'),

'B': image.select('B2'),

}).rename('bsi')

return image.addBands(ndvi).addBands(ndbi).addBands(mndwi).addBands(bsi)

composite = addIndices(composite)

# Add Slope and Elevation

# Use ALOS World 3D

alos = ee.ImageCollection('JAXA/ALOS/AW3D30/V4_1')

# This comes as a collection of images

# We mosaic it to create a single image

# Need to set the projection correctly on the mosaic

# for the slope computation

proj = alos.first().projection()

elevation = alos.select('DSM').mosaic() \

.setDefaultProjection(proj) \

.rename('elev')

slope = ee.Terrain.slope(elevation) \

.rename('slope')

composite = composite.addBands(elevation).addBands(slope)

print('Composite Bands', composite.bandNames().getInfo())

# Train the model with new features

# Add a random column and split the GCPs into training and validation set

gcps = gcps.randomColumn()

# This being a simpler classification, we take 60% points

# for validation. Normal recommended ratio is

# 70% training, 30% validation

trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6))

validationGcp = gcps.filter(ee.Filter.gte('random', 0.6))

# Overlay the samples on the image to get training data.

training = composite.sampleRegions(**{

'collection': trainingGcp,

'properties': ['landcover'],

'scale': 10,

'tileScale': 16,

})

print('Training Feature', training.first().getInfo())Export the training features as a CSV file. We use

Export.table.toCloudStorage() for ease of sharing.

If you want to Export the samples to your Drive, run the follow code to mount your drive in Colab.

# Mount Google Drive

drive.mount('/content/drive')

# Adjust the path to where you saved your CSV in Drive

export_folder = '/content/drive/MyDrive/earthengine'bucket = 'spatialthoughts-public-data'

folder = 'e2e'

export_folder = f'gs://{bucket}/{folder}'

export_name = 'training_samples'

file_name_prefix = f'{folder}/{export_name}'

# Create an export task using ee.batch.Export.table.toCloudStorage()

task = ee.batch.Export.table.toCloudStorage(**{

'collection': training,

'description': 'Training_Samples_Export',

'bucket': 'spatialthoughts-public-data',

'fileNamePrefix': 'e2e/training_samples',

'fileFormat': 'CSV',

})

# Start the task

#task.start()

print('Started Training Samples Export Task')Part 2: Analysis with Scikit-Learn

We read the exported training samples.

gcs_url = 'https://storage.googleapis.com'

file_path = f'{gcs_url}/{bucket}/{folder}/{export_name}.csv'

df = pd.read_csv(file_path)

dfTrain a Random Forest Model.

feature_cols = [

'B1', 'B2', 'B3', 'B4', 'B5', 'B6', 'B7', 'B8',

'B8A','B9', 'B11', 'B12','ndvi', 'ndbi', 'mndwi',

'bsi', 'elev', 'slope'

]

target_col = 'landcover'

x = df[feature_cols]

y = df[target_col]

rf_classifier = RandomForestClassifier(

n_estimators=50, # Number of trees in the forest

max_depth=5, # Maximum depth of the trees

random_state=42 # Seed for reproducibility

)

# Train the classifier on the training data

rf_classifier.fit(x, y)Visualize GINI Importance

# Built-in feature importance (Gini Importance)

importances = rf_classifier.feature_importances_

feature_importance = pd.DataFrame({

'Feature': feature_cols,

'Gini Importance': importances

}).sort_values('Gini Importance', ascending=False)

# Plot the results

fig, ax = plt.subplots(1, 1)

fig.set_size_inches(10,5)

feature_importance.plot(kind='bar', ax=ax)

ax.set_xticklabels(feature_importance['Feature'], rotation=90)

plt.show()

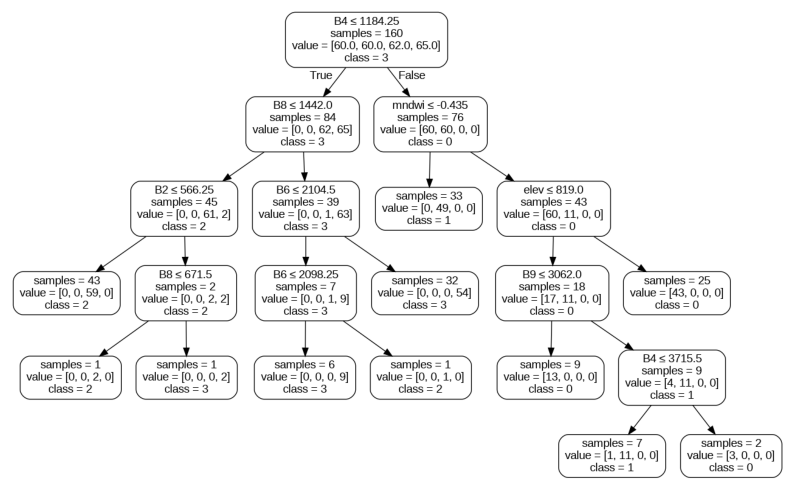

We can also visualize the individual decision trees created by the Random Forest algorithm.

from sklearn.tree import export_graphviz

import graphviz

# Export one of the trees

tree_index = 0

output_file_path = f'decision_tree_{tree_index}'

tree_to_visualize = rf_classifier.estimators_[tree_index] # Visualize the first tree

dot_data = export_graphviz(

tree_to_visualize,

out_file=None,

feature_names=feature_cols,

class_names=[str(cls) for cls in rf_classifier.classes_], # Convert class names to strings

rounded=True,

special_characters=True,

impurity=False

)

# Visualize the tree

graph = graphviz.Source(dot_data)

graph.render(output_file_path, format='png', cleanup=True)import matplotlib.pyplot as plt

import os

# Plot the results

fig, ax = plt.subplots(1, 1)

fig.set_size_inches(10,10)

img = mpimg.imread(f'{output_file_path}.png')

ax.imshow(img)

plt.axis('off')

plt.show()

Classification with Migrated Training Samples

// Script showing how to use migrated training samples

// for multi-year classification

// Training samples are collected on a 2019 Sentinel-2 composite

// and are used to classify a 2020 Sentinel-2 composite

// We use spectral distance measure to discard samples that show

// large change between the target and reference years.

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

var composite = ee.Image('projects/spatialthoughts/assets/e2e/composite');

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 0.3,

bands: ['B4', 'B3', 'B2'],

};

var scaleValues = function(image) {

return image.multiply(0.0001).copyProperties(image, ['system:time_start']);

};

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

// Prepare 2019 composite

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry))

.select('B.*')

.map(scaleValues);

var composite2019 = filtered.median().clip(geometry);

// Prepare 2020 Composite

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2020-01-01', '2021-01-01'))

.filter(ee.Filter.bounds(geometry))

.select('B.*')

.map(scaleValues);

var composite2020 = filtered.median().clip(geometry);

// Display the 2020 composite.

Map.addLayer(composite2019, rgbVis, 'Composite 2019');

// Display the 2021 composite.

Map.addLayer(composite2020, rgbVis, 'Composite 2020');

// Compute Spectral Distance between 2019 and 2020 images

var distance = composite2019.spectralDistance(composite2020, 'sam');

// GCPs were collected on 2020 image

// Find out which GCPs are still unchanged in 2021

// Get the distance at the training points

var gcpsWithDistance = distance.sampleRegions({

collection: gcps,

scale: 10,

tileScale: 16,

geometries: true

});

Map.addLayer(gcpsWithDistance, {color: 'red'}, 'GCPs with Distance', false);

// Adjust the threshold to discard GCPs are changed locations

// Threshold is determined manually

var threshold = 0.2

var newGcps = gcpsWithDistance.filter(ee.Filter.lt('distance', threshold));

Map.addLayer(newGcps, {color: 'blue'}, 'Filtered GCPs');

print('Total GCPs', gcps.size());

print('Migrated GCPs', newGcps.size());

// We wrap the classification workflow in a function

// and call the function with the different composites and GCPs

performClassification(composite2019, gcps, '2019');

performClassification(composite2020, newGcps, '2020');

//**************************************************************************

// Classification and Accuracy Assessment

//**************************************************************************

function performClassification(image, gcps, year) {

gcps = gcps.randomColumn();

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = image.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: image.bandNames()

});

// Classify the image.

var classified = image.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified, {min: 0, max: 3, palette: palette}, year);

// Use classification map to assess accuracy using the validation fraction

// of the overall training set created above.

var test = classified.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

tileScale: 16,

scale: 10,

});

var testConfusionMatrix = test.errorMatrix('landcover', 'classification')

// Printing of confusion matrix may time out. Alternatively, you can export it as CSV

print('Confusion Matrix ' + year, testConfusionMatrix);

print('Test Accuracy ' + year, testConfusionMatrix.accuracy());

}Time Series Modeling

// Example script showing how to fit a harmonic model

// to a NDVI time-series

// This is largely adapted from

// https://developers.google.com/earth-engine/tutorials/community/time-series-modeling

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

// We define 2 polygons for adjacent farms

var farms = ee.FeatureCollection([

ee.Feature(

ee.Geometry.Polygon(

[[[82.55407706060632, 27.135887938359975],

[82.55605116644128, 27.135085913223808],

[82.55613699712976, 27.13527687211144],

[82.55418434896691, 27.136117087342033]]]),

{'system:index': '0'}),

ee.Feature(

ee.Geometry.Polygon(

[[[82.54973858752477, 27.137188234050676],

[82.55046814837682, 27.136806322479018],

[82.55033940234411, 27.136500792282273],

[82.5508973018192, 27.136328931179623],

[82.55119770922887, 27.13688270489774],

[82.5498887912296, 27.137455571374517]]]),

{'system:index': '1'})

]);

Map.centerObject(farms);

var geometry = farms.geometry();

//Map.addLayer(geometry, {color: 'grey'}, 'Boundary');

//Map.centerObject(geometry);

var filtered = s2

.filter(ee.Filter.date('2017-01-01', '2023-01-01'))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

// Function to add NDVI, time, and constant variables

var addVariables = function(image) {

// Compute time in fractional years since the epoch.

var date = image.date();

var years = date.difference(ee.Date('1970-01-01'), 'year');

var timeRadians = ee.Image(years.multiply(2 * Math.PI));

// Return the image with the added bands.

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

var t = timeRadians.rename('t').float();

var constant = ee.Image.constant(1);

return image.addBands([ndvi, t, constant]);

};

var filteredWithVariables = filteredMasked.map(addVariables);

print(filteredWithVariables.first());

// Plot a time series of NDVI at a single location.

var singleFarm = ee.Feature(farms.toList(2).get(0));

// Display a time-series chart

var chart = ui.Chart.image.series({

imageCollection: filteredWithVariables.select('ndvi'),

region: singleFarm.geometry(),

reducer: ee.Reducer.mean(),

scale: 10

}).setOptions({

title: 'Original NDVI Time Series',

interpolateNulls: false,

vAxis: {title: 'NDVI', viewWindow: {min: 0, max: 1}},

hAxis: {title: '', format: 'YYYY-MM'},

lineWidth: 1,

pointSize: 2,

series: {

0: {color: '#238b45'},

},

});

print(chart);

// The number of cycles per year to model.

var harmonics = 2;

// Make a list of harmonic frequencies to model.

// These also serve as band name suffixes.

var harmonicFrequencies = ee.List.sequence(1, harmonics);

// Function to get a sequence of band names for harmonic terms.

var getNames = function(base, list) {

return ee.List(list).map(function(i) {

return ee.String(base).cat(ee.Number(i).int());

});

};

// Construct lists of names for the harmonic terms.

var cosNames = getNames('cos_', harmonicFrequencies);

var sinNames = getNames('sin_', harmonicFrequencies);

// The dependent variable we are modeling.

var dependent = 'ndvi';

// Independent variables.

var independents = ee.List(['constant', 't']).cat(cosNames).cat(sinNames);

// Function to compute the specified number of harmonics

// and add them as bands. Assumes the time band is present.

var addHarmonics = function(freqs) {

return function(image) {

// Make an image of frequencies.

var frequencies = ee.Image.constant(freqs);

// This band should represent time in radians.

var time = ee.Image(image).select('t');

// Get the cosine terms.

var cosines = time.multiply(frequencies).cos().rename(cosNames);

// Get the sin terms.

var sines = time.multiply(frequencies).sin().rename(sinNames);

return image.addBands(cosines).addBands(sines);

};

};

var filteredHarmonic = filteredWithVariables.map(addHarmonics(harmonicFrequencies));

// The output of the regression reduction is a 4x1 array image.

var harmonicTrend = filteredHarmonic

.select(independents.add(dependent))

.reduce(ee.Reducer.linearRegression(independents.length(), 1));

// Turn the array image into a multi-band image of coefficients.

var harmonicTrendCoefficients = harmonicTrend.select('coefficients')

.arrayProject([0])

.arrayFlatten([independents]);

// Compute fitted values.

var fittedHarmonic = filteredHarmonic.map(function(image) {

return image.addBands(

image.select(independents)

.multiply(harmonicTrendCoefficients)

.reduce('sum')

.rename('fitted'));

});

// Plot the fitted model and the original data at the ROI.

var chart = ui.Chart.image.series({

imageCollection: fittedHarmonic.select(['fitted', 'ndvi']),

region: singleFarm.geometry(),

reducer: ee.Reducer.mean(),

scale: 10

}).setOptions({

title: 'NDVI Time Series',

vAxis: {title: 'NDVI', viewWindow: {min: 0, max: 1}},

hAxis: {title: '', format: 'YYYY-MM'},

lineWidth: 1,

series: {

1: {color: '#66c2a4', lineDashStyle: [1, 1], pointSize: 1}, // Original NDVI

0: {color: '#238b45', lineWidth: 2, pointSize: 1 }, // Fitted NDVI

},

})

print(chart);

print(fittedHarmonic);

// Compute phase and amplitude.

var phase = harmonicTrendCoefficients.select('sin_1')

.atan2(harmonicTrendCoefficients.select('cos_1'))

// Scale to [0, 1] from radians.

.unitScale(-Math.PI, Math.PI);

var amplitude = harmonicTrendCoefficients.select('sin_1')

.hypot(harmonicTrendCoefficients.select('cos_1'))

// Add a scale factor for visualization.

.multiply(5);

var constant = harmonicTrendCoefficients.select('constant');

// Add the phase, amplitude and constant bands to your composite

// which captures the phenology at each pixel.

// Use the HSV to RGB transformation to display phase and amplitude.

var rgb = ee.Image.cat([phase, amplitude, 1]).hsvToRgb();

Map.addLayer(rgb, {}, 'Phase (hue), Amplitude (sat)', false);

// The Phase and Amplitude values will be very different

// at farms following different cropping cycles

// Let's plot and compare the fitted time series

// Farm 1: Single cropping

// Farm 2: Multiple cropping

var chart = ui.Chart.image.seriesByRegion({

imageCollection: fittedHarmonic.select('fitted'),

regions: farms,

reducer: ee.Reducer.mean(),

scale: 10

}).setSeriesNames(['farm1', 'farm2']).setOptions({

lineWidth: 1,