End-to-End Google Earth Engine (Full Course)

A hands-on introduction to applied remote sensing using Google Earth Engine.

Ujaval Gandhi

- Introduction

- Sign-up for Google Earth Engine

- Complete the Class Pre-Work

- Get the Course Videos

- Get the Course Materials

- Module 1: Earth Engine Basics

- Module 2: Earth Engine Intermediate

- Module 3: Supervised Classification

- Module 4: Change Detection

- Module 5: Earth Engine Apps

- Module 6: Google Earth Engine Python API

- Supplement

- Guided Projects

- Learning Resources

- Useful Public Repositories

- Debugging Errors and Scaling Your Analysis

- Data Credits

- References

- License

- Citing and Referencing

Introduction

Google Earth Engine is a cloud-based platform that enables large-scale processing of satellite imagery to detect changes, map trends, and quantify differences on the Earth’s surface. This course covers the full range of topics in Earth Engine to give the participants practical skills to master the platform and implement their remote sensing projects.

Sign-up for Google Earth Engine

If you already have a Google Earth Engine account, you can skip this step.

Visit our GEE Sign-Up Guide for step-by-step instructions.

Complete the Class Pre-Work

This class needs about 2-hours of pre-work. Please watch the following videos to get a good understanding of remote sensing and how Earth Engine works.

- Introduction to Remote Sensing: This video introduces the remote sensing concepts, terminology and techniques.

- Introduction to Google Earth Engine: This video gives a broad overview of Google Earth Engine with selected case studies and application. The video also covers the Earth Engine architecture and how it is different than traditional remote sensing software.

Get the Course Videos

The course is accompanied by a set of videos covering the all the modules. These videos are recorded from our live instructor-led classes and are edited to make them easier to consume for self-study. We have 2 versions of the videos:

YouTube

We have created a YouTube Playlist with separate videos for each module to enable effective online-learning. Access the YouTube Playlist ↗

Vimeo

We are also making the module videos available on Vimeo. These videos can be downloaded for offline learning. Access the Vimeo Playlist ↗

Get the Course Materials

The course material and exercises are in the form of Earth Engine scripts shared via a code repository.

- Click this link to open Google Earth Engine code editor and add the repository to your account.

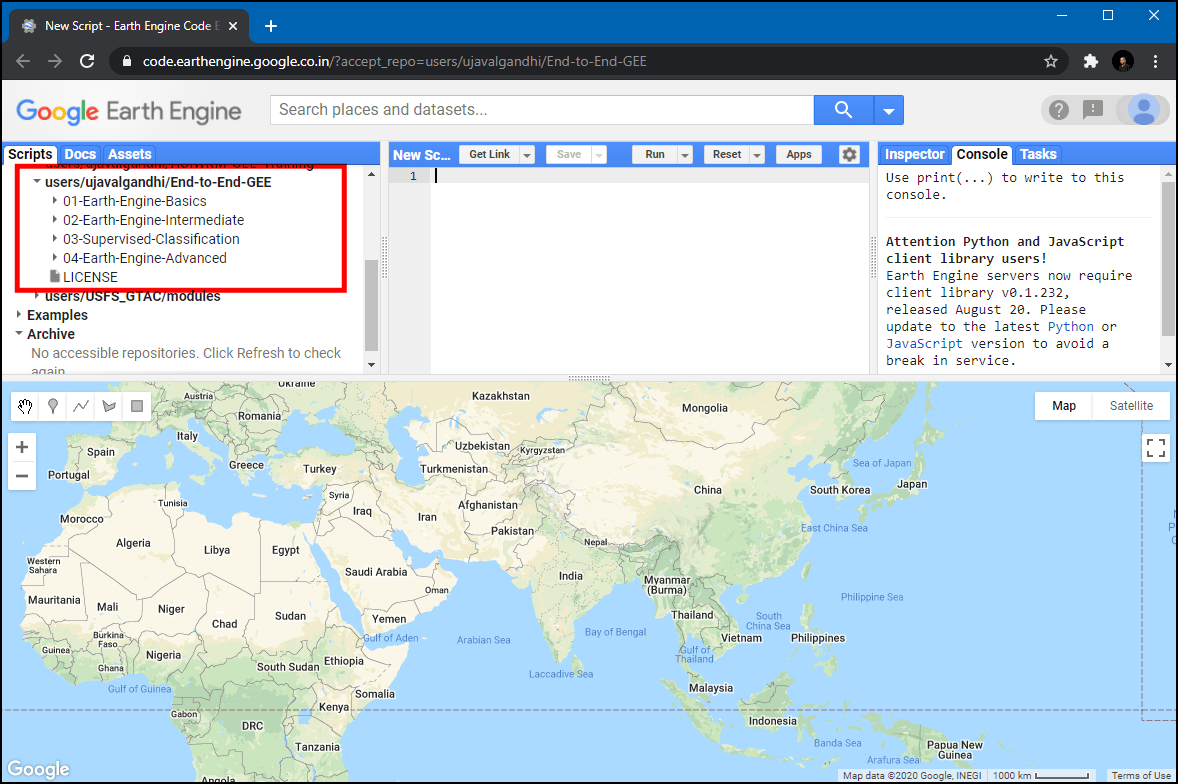

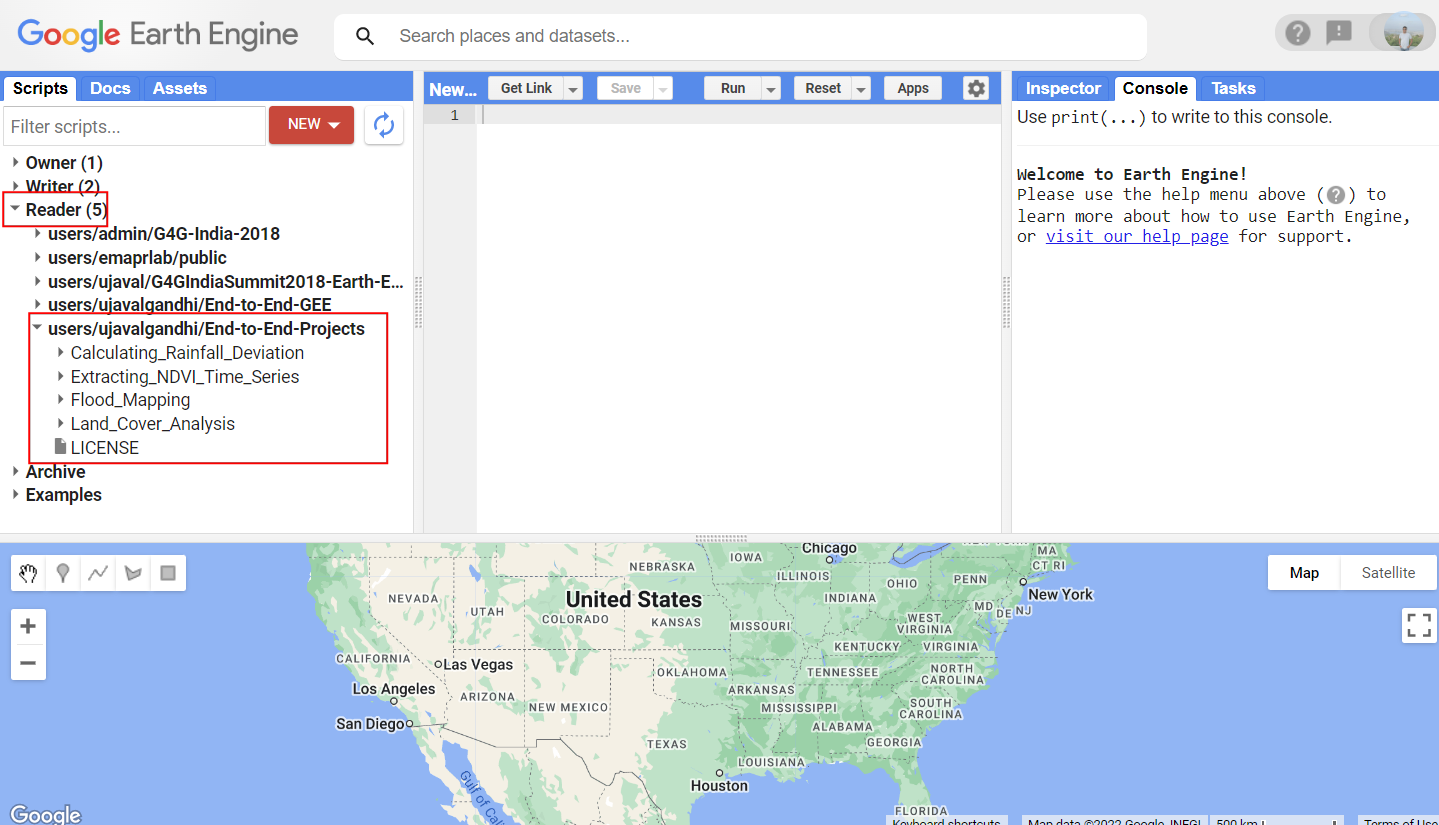

- If successful, you will have a new repository named

users/ujavalgandhi/End-to-End-GEEin the Scripts tab in the Reader section. - Verify that your code editor looks like below

Code Editor After Adding the Class Repository

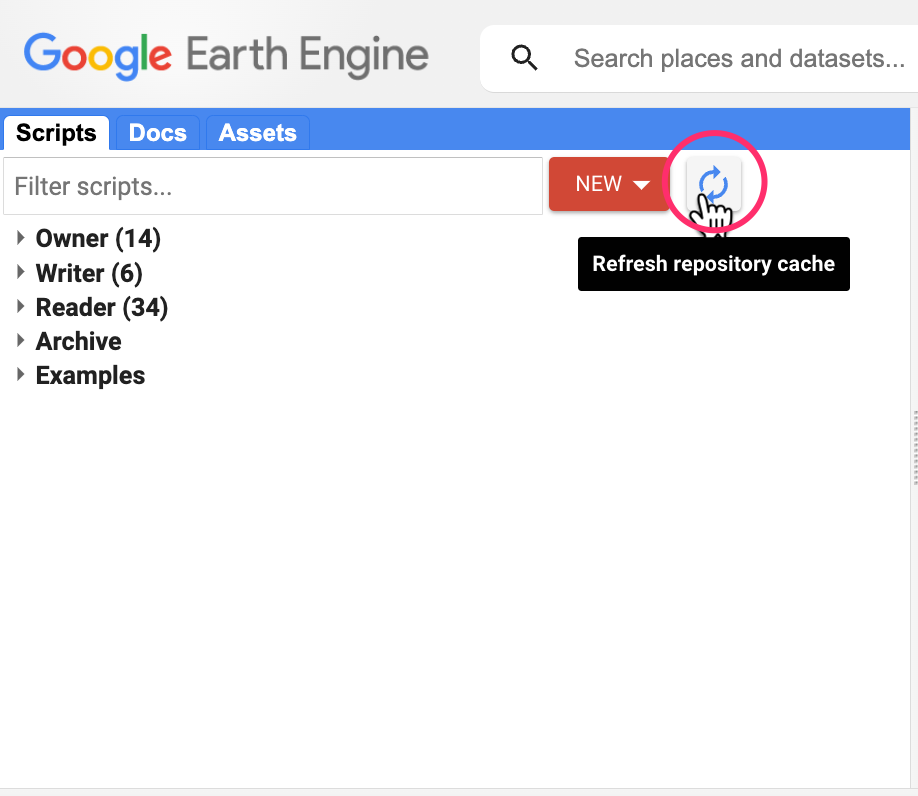

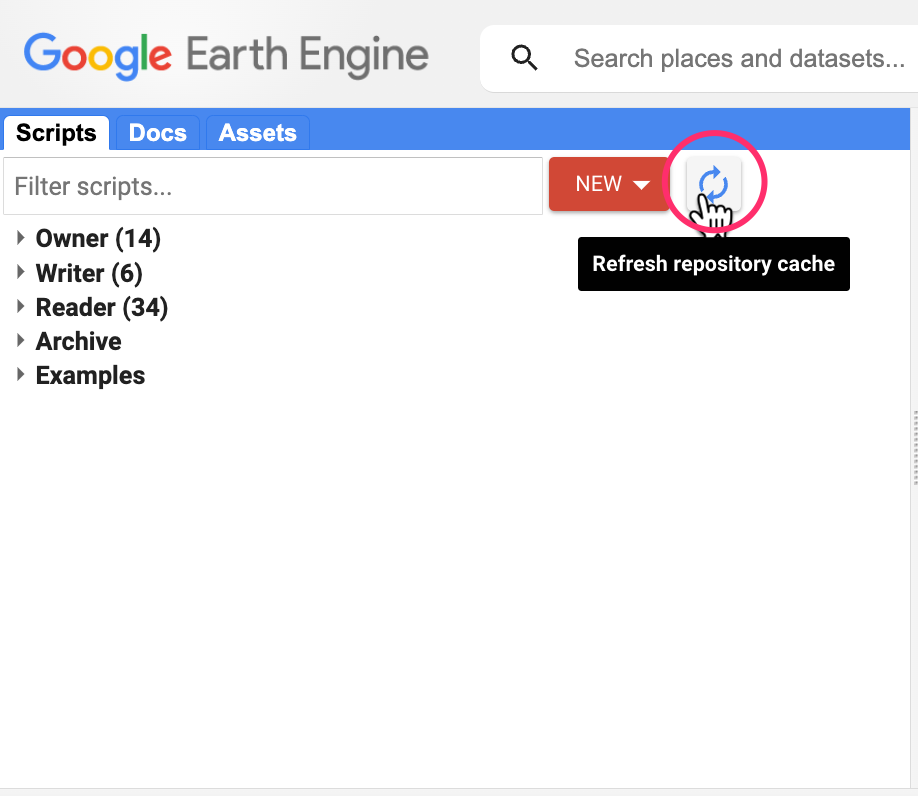

If you do not see the repository in the Reader section, click Refresh repository cache button in your Scripts tab and it will show up.

Refresh repository cache

There are several slide decks containing useful information and references. You can access all the presentations used in the course from the links below.

- Introduction and course overview [Presentation ↗]

- Map/Reduce Programming Concepts [Presentation ↗]

- Introduction to Machine Learning & Supervised Classification [Presentation ↗]

- Introduction to Change Detection [Presentation ↗]

- Introduction to Earth Engine Apps [Presentation ↗]

- Introduction to Google Earth Engine Python API [Presentation ↗]

Module 1: Earth Engine Basics

Module 1 is designed to give you basic skills to be able to find datasets you need for your project, filter them to your region of interest, apply basic processing and export the results. Mastering this will allow you to start using Earth Engine for your project quickly and save a lot of time pre-processing the data.

01. Hello World

This script introduces the basic Javascript syntax and the video covers the programming concepts you need to learn when using Earth Engine. To learn more, visit Introduction to JavaScript for Earth Engine section of the Earth Engine User Guide.

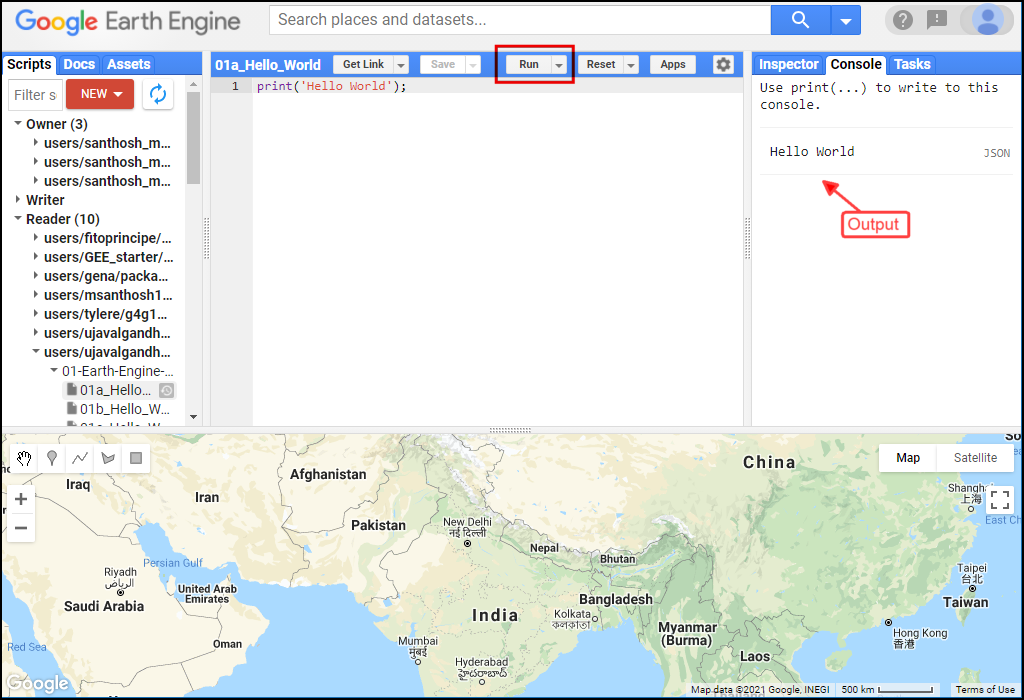

The Code Editor is an Integrated Development Environment (IDE) for Earth Engine Javascript API.. It offers an easy way to type, debug, run and manage code. Type the code below and click Run to execute it and see the output in the Console tab.

Tip: You can use the keyboard shortcut Ctrl+Enter to run the code in the Code Editor

Hello World

print('Hello World');

// Variables

var city = 'Bengaluru';

var country = 'India';

print(city, country);

var population = 8400000;

print(population);

// List

var majorCities = ['Mumbai', 'Delhi', 'Chennai', 'Kolkata'];

print(majorCities);

// Dictionary

var cityData = {

'city': city,

'population': 8400000,

'elevation': 930

};

print(cityData);

// Function

var greet = function(name) {

return 'Hello ' + name;

};

print(greet('World'));

// This is a commentExercise

Saving Your Work

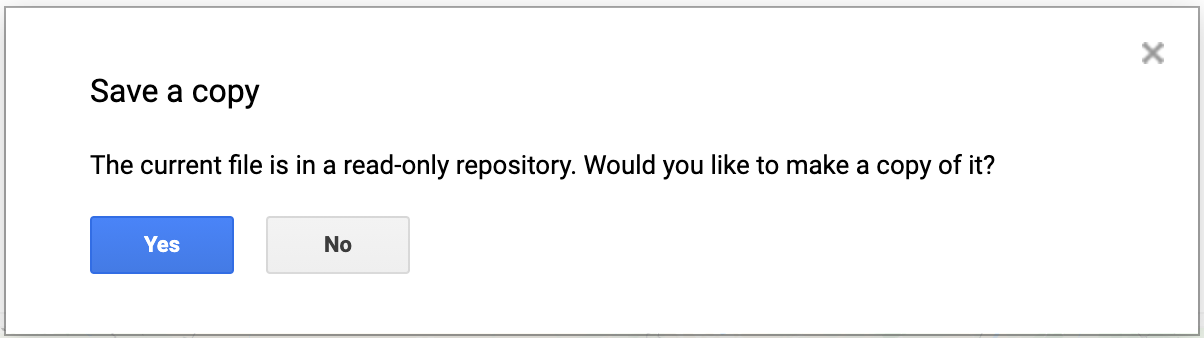

When you modify any script for the course repository, you may want to save a copy for yourself. If you try to click the Save button, you will get an error message like below

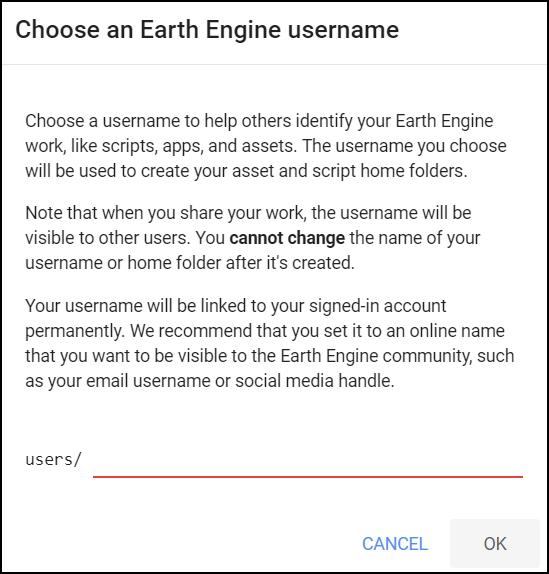

This is because the shared class repository is a Read-only repository. You can click Yes to save a copy in your repository. If this is the first time you are using Earth Engine, you will be prompted to choose a Earth Engine username. Choose the name carefully, as it cannot be changed once created.

After entering your username, your home folder will be created. After that, you will be prompted to enter a new repository. A repository can help you organize and share code. Your account can have multiple repositories and each repository can have multiple scripts inside it. To get started, you can create a repository named default. Finally, you will be able to save the script.

02. Working with Image Collections

Most datasets in Earth Engine come as a ImageCollection.

An ImageCollection is a dataset that consists of images takes at

different time and locations - usually from the same satellite or data

provider. You can load a collection by searching the Earth Engine

Data Catalog for the ImageCollection ID. Search for the

Sentinel-2 Level 1C dataset and you will find its id

COPERNICUS/S2_SR. Visit the Sentinel-2,

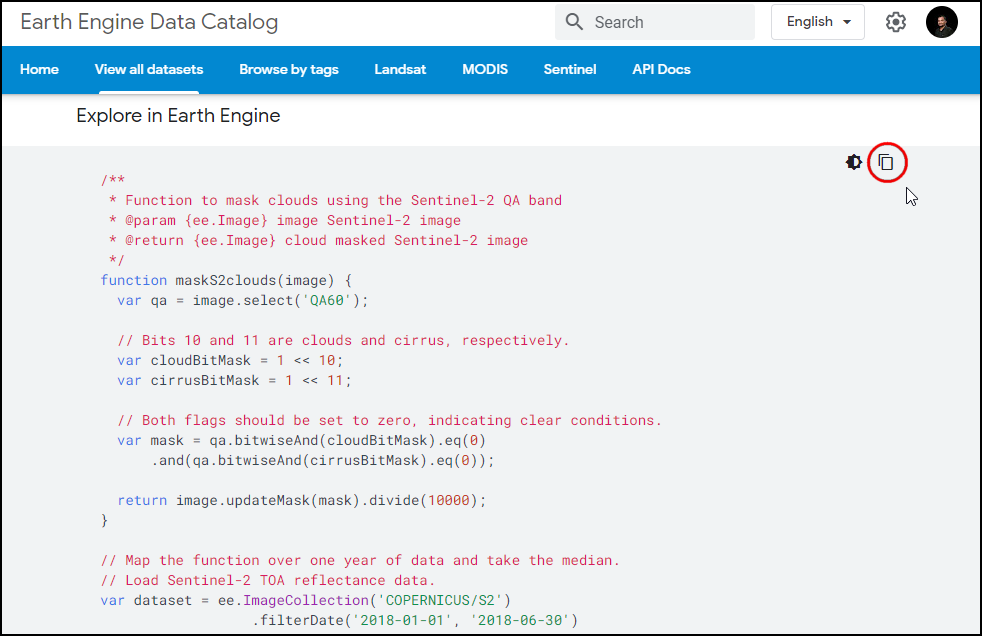

Level 1C page and see Explore in Earth Engine section to

find the code snippet to load and visualize the collection. This snippet

is a great starting point for your work with this dataset. Click the

Copy Code Sample button and paste the code into the

code editor. Click Run and you will see the image tiles load in

the map.

In the code snippet, You will see a function

Map.setCenter() which sets the viewport to a specific

location and zoom level. The function takes the X coordinate

(longitude), Y coordinate (latitude) and Zoom Level parameters. Replace

the X and Y coordinates with the coordinates of your city and click

Run to see the images of your city.

Exercise

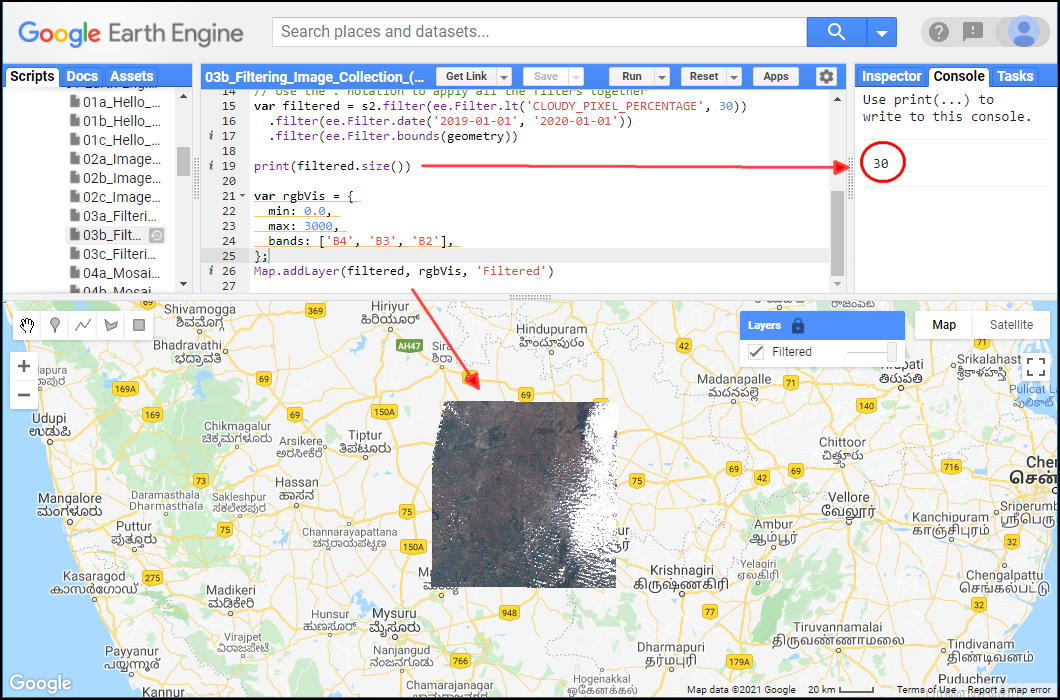

03. Filtering Image Collections

The collection contains all imagery ever collected by the sensor. The

entire collections are not very useful. Most applications require a

subset of the images. We use filters to select the

appropriate images. There are many types of filter functions, look at

ee.Filter... module to see all available filters. Select a

filter and then run the filter() function with the filter

parameters.

We will learn about 3 main types of filtering techniques

- Filter by metadata: You can apply a filter on the

image metadata using filters such as

ee.Filter.eq(),ee.Filter.lt()etc. You can filter by PATH/ROW values, Orbit number, Cloud cover etc. - Filter by date: You can select images in a

particular date range using filters such as

ee.Filter.date(). - Filter by location: You can select the subset of

images with a bounding box, location or geometry using the

ee.Filter.bounds(). You can also use the drawing tools to draw a geometry for filtering.

After applying the filters, you can use the size()

function to check how many images match the filters.

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241])

Map.centerObject(geometry, 10)

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

// Filter by metadata

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30));

// Filter by date

var filtered = s2.filter(ee.Filter.date('2019-01-01', '2020-01-01'));

// Filter by location

var filtered = s2.filter(ee.Filter.bounds(geometry));

// Let's apply all the 3 filters together on the collection

// First apply metadata fileter

var filtered1 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30));

// Apply date filter on the results

var filtered2 = filtered1.filter(

ee.Filter.date('2019-01-01', '2020-01-01'));

// Lastly apply the location filter

var filtered3 = filtered2.filter(ee.Filter.bounds(geometry));

// Instead of applying filters one after the other, we can 'chain' them

// Use the . notation to apply all the filters together

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

print(filtered.size());Exercise

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241]);

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

print(filtered.size());

// Exercise

// Delete the 'geometry' variable

// Add a point at your chosen location

// Change the filter to find images from January 2023

// Note: If you are in a very cloudy region,

// make sure to adjust the CLOUDY_PIXEL_PERCENTAGE04. Creating Mosaics and Composites from ImageCollections

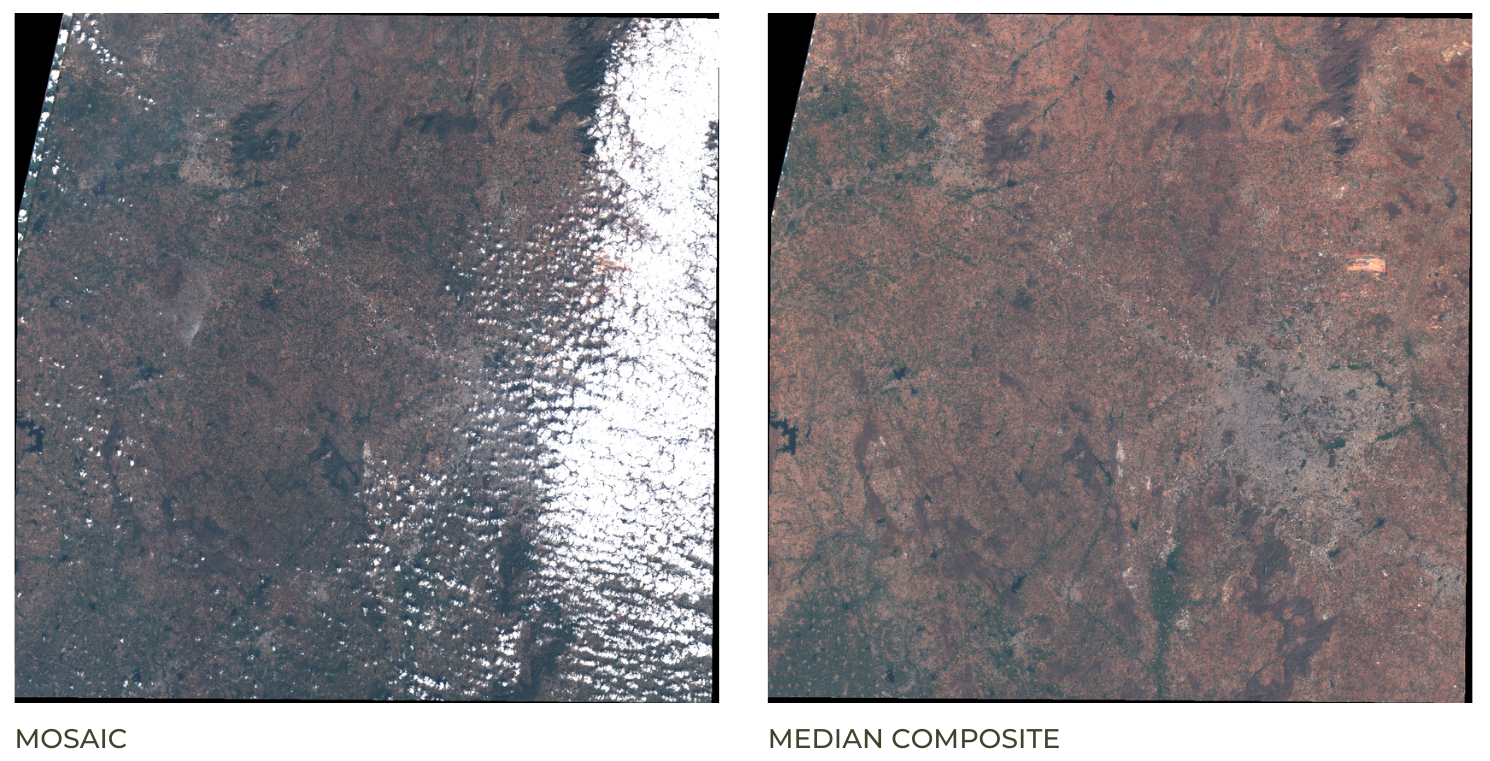

The default order of the collection is by date. So when you display

the collection, it implicitly creates a mosaic with the latest pixels on

top. You can call .mosaic() on a ImageCollection to create

a mosaic image from the pixels at the top.

We can also create a composite image by applying selection criteria

to each pixel from all pixels in the stack. Here we use the

median() function to create a composite where each pixel

value is the median of all pixels from the stack.

Tip: If you need to create a mosaic where the images are in a specific order, you can use the

.sort()function to sort your collection by a property first.

Mosaic vs. Composite

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241]);

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var mosaic = filtered.mosaic();

var medianComposite = filtered.median();

Map.centerObject(geometry, 10);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.addLayer(filtered, rgbVis, 'Filtered Collection');

Map.addLayer(mosaic, rgbVis, 'Mosaic');

Map.addLayer(medianComposite, rgbVis, 'Median Composite');Exercise

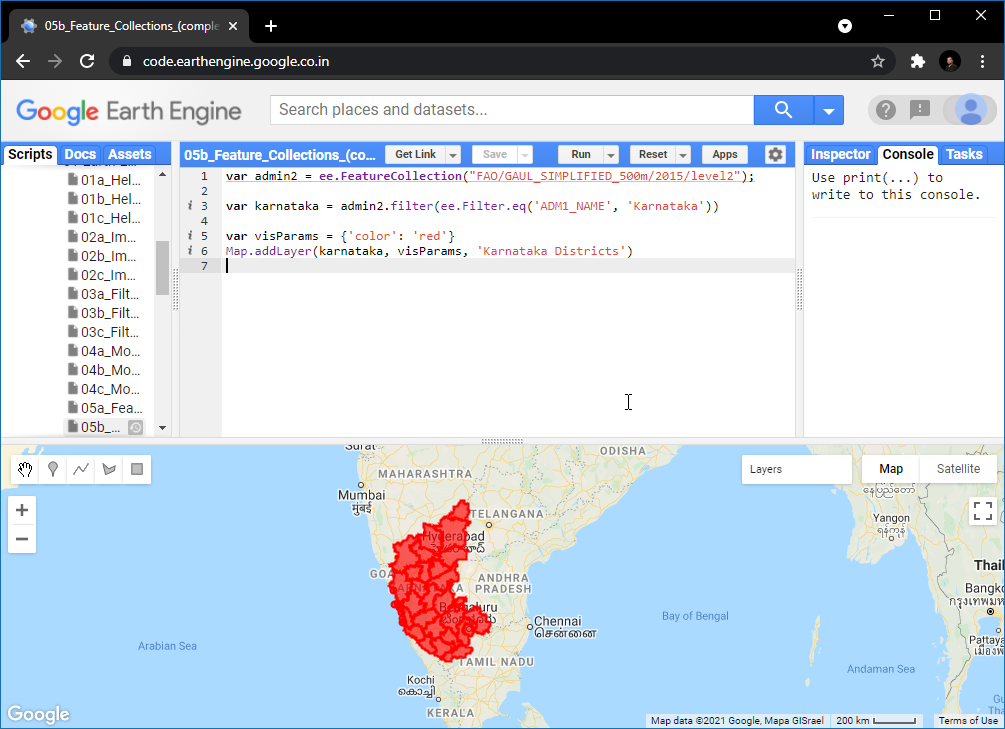

05. Working with Feature Collections

Feature Collections are similar to Image Collections - but they contain Features, not images. They are equivalent to Vector Layers in a GIS. We can load, filter and display Feature Collections using similar techniques that we have learned so far.

Search for GAUL Second Level Administrative Boundaries and

load the collection. This is a global collection that contains all

Admin2 boundaries. We can apply a filter using the

ADM1_NAME property to get all Admin2 boundaries

(i.e. Districts) from a state.

// Use the GAUL 2015 dataset from the GEE Data Catalog

var admin2 = ee.FeatureCollection('FAO/GAUL_SIMPLIFIED_500m/2015/level2');

var filtered = admin2.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'));

// Alternatively, use the newer GAUL 2024 dataset from the community catalog

// https://gee-community-catalog.org/projects/gaul/

var admin2 = ee.FeatureCollection("projects/sat-io/open-datasets/FAO/GAUL/GAUL_2024_L2");

var filtered = admin2.filter(ee.Filter.eq('gaul1_name', 'Karnataka'));

var visParams = {'color': 'red'};

Map.addLayer(filtered, visParams, 'Selected Districts');Exercise

var admin2 = ee.FeatureCollection('FAO/GAUL_SIMPLIFIED_500m/2015/level2');

Map.addLayer(admin2, {color: 'grey'}, 'All Admin2 Polygons');

// Exercise

// Apply filters to select your chosen Admin2 region

// Display the results in 'red' color

// Hint1: Switch to the 'Inspector' tab and click on any

// polygon to know its properties and their values

// Hint2: Many countries do not have unique names for

// Admin2 regions. Make sure to apply a filter to select

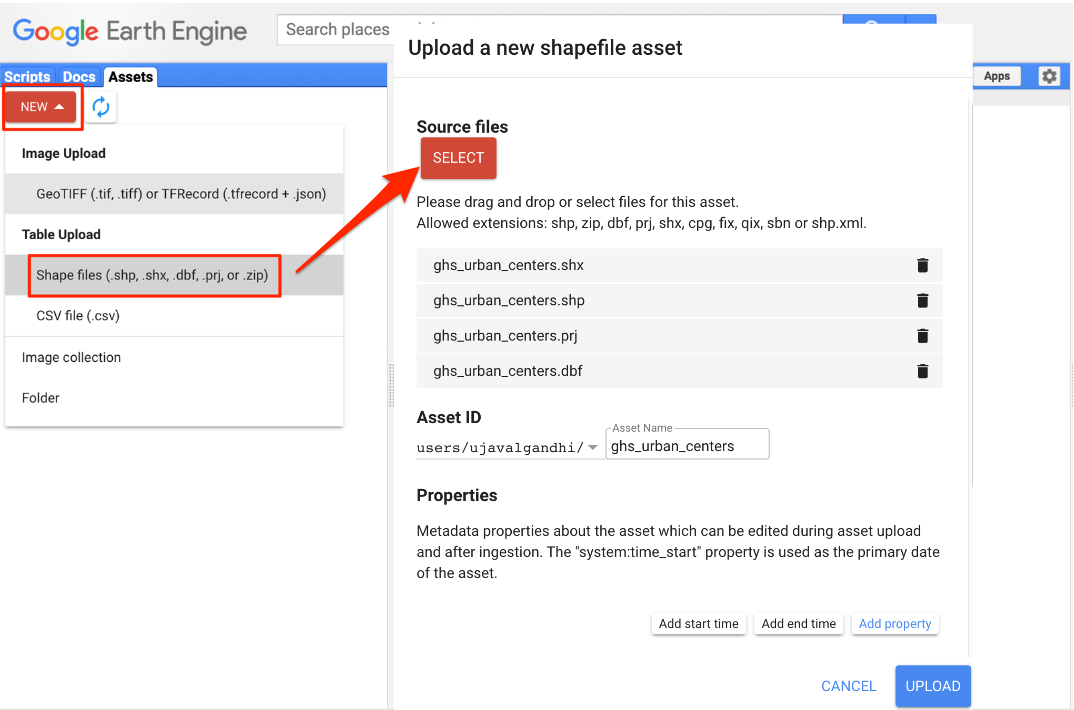

// the Admin1 region that contains your chosen Admin2 region06. Importing Data

You can import vector or raster data into Earth Engine. We will now

import a shapefile of Urban

Centres from JRC’s GHS Urban Centre Database (GHS-UCDB). Unzip the

ghs_urban_centers.zip into a folder on your computer. In

the Code Editor, go to Assets → New → Table Upload → Shape

Files. Select the .shp, .shx,

.dbf and .prj files. Enter

ghs_urban_centers as the Asset Name and click

Upload. Once the upload and ingest finishes, you will have a

new asset in the Assets tab. The shapefile is imported as a

Feature Collection in Earth Engine. Select the

ghs_urban_centers asset and click Import. You can

then visualize the imported data.

Importing a Shapefile

// Let's import some data to Earth Engine

// Upload the 'GHS Urban Centers' database from JRC

// https://ghsl.jrc.ec.europa.eu/ghs_stat_ucdb2015mt_r2019a.php

// Download the shapefile from https://bit.ly/ghs-ucdb-shapefile

// Unzip and upload

// Import the collection

var urban = ee.FeatureCollection('users/ujavalgandhi/e2e/ghs_urban_centers');

// Visualize the collection

Map.addLayer(urban, {color: 'blue'}, 'Urban Areas');Exercise

var urban = ee.FeatureCollection('users/ujavalgandhi/e2e/ghs_urban_centers');

print(urban.first());

// Exercise

// Apply a filter to select only large urban centers

// in your country and display it on the map.

// Select all urban centers in your country with

// a population greater than 1000000

// Hint1: Use the property 'CTR_MN_NM' containing country names

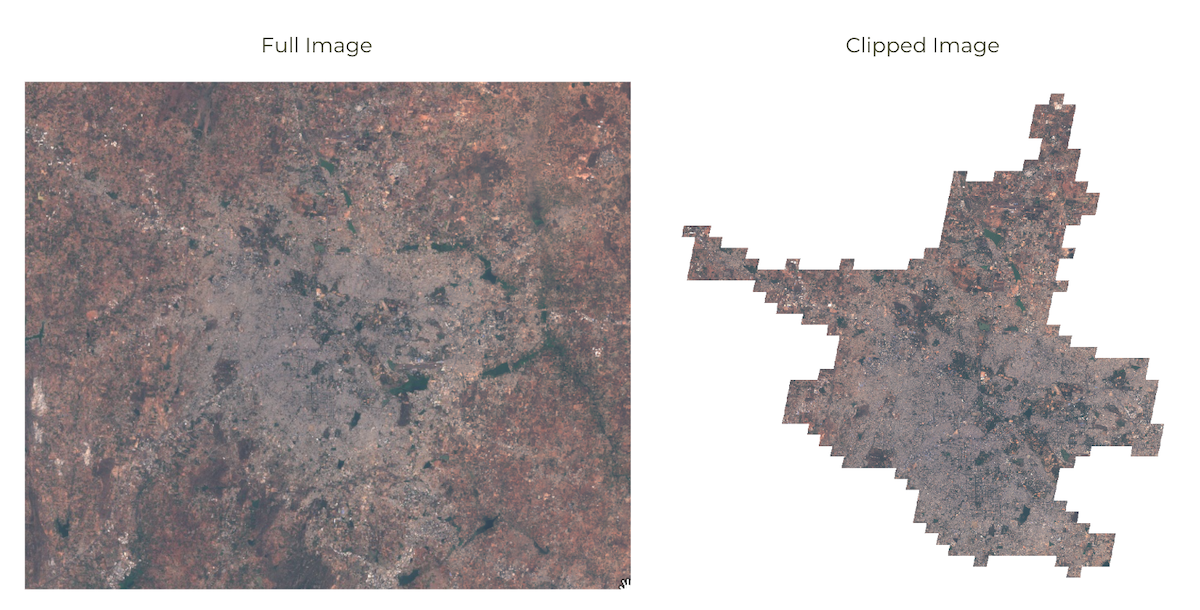

// Hint2: Use the property 'P15' containing 2015 Population07. Clipping Images

It is often desirable to clip the images to your area of interest.

You can use the clip() function to mask out an image using

a geometry.

While in a Desktop software, clipping is desirable to remove unnecessary portion of a large image and save computation time, in Earth Engine clipping can actually increase the computation time. As described in the Earth Engine Coding Best Practices guide, avoid clipping the images or do it at the end of your script.

Original vs. Clipped Image

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var urban = ee.FeatureCollection('users/ujavalgandhi/e2e/ghs_urban_centers');

// Find the name of the urban centre

// by adding the layer to the map and using Inspector.

var filtered = urban

.filter(ee.Filter.eq('UC_NM_MN', 'Bengaluru'))

.filter(ee.Filter.eq('CTR_MN_NM', 'India'));

var geometry = filtered.geometry();

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filtered.median();

var clipped = image.clip(geometry);

Map.centerObject(geometry);

Map.addLayer(clipped, rgbVis, 'Clipped');Exercise

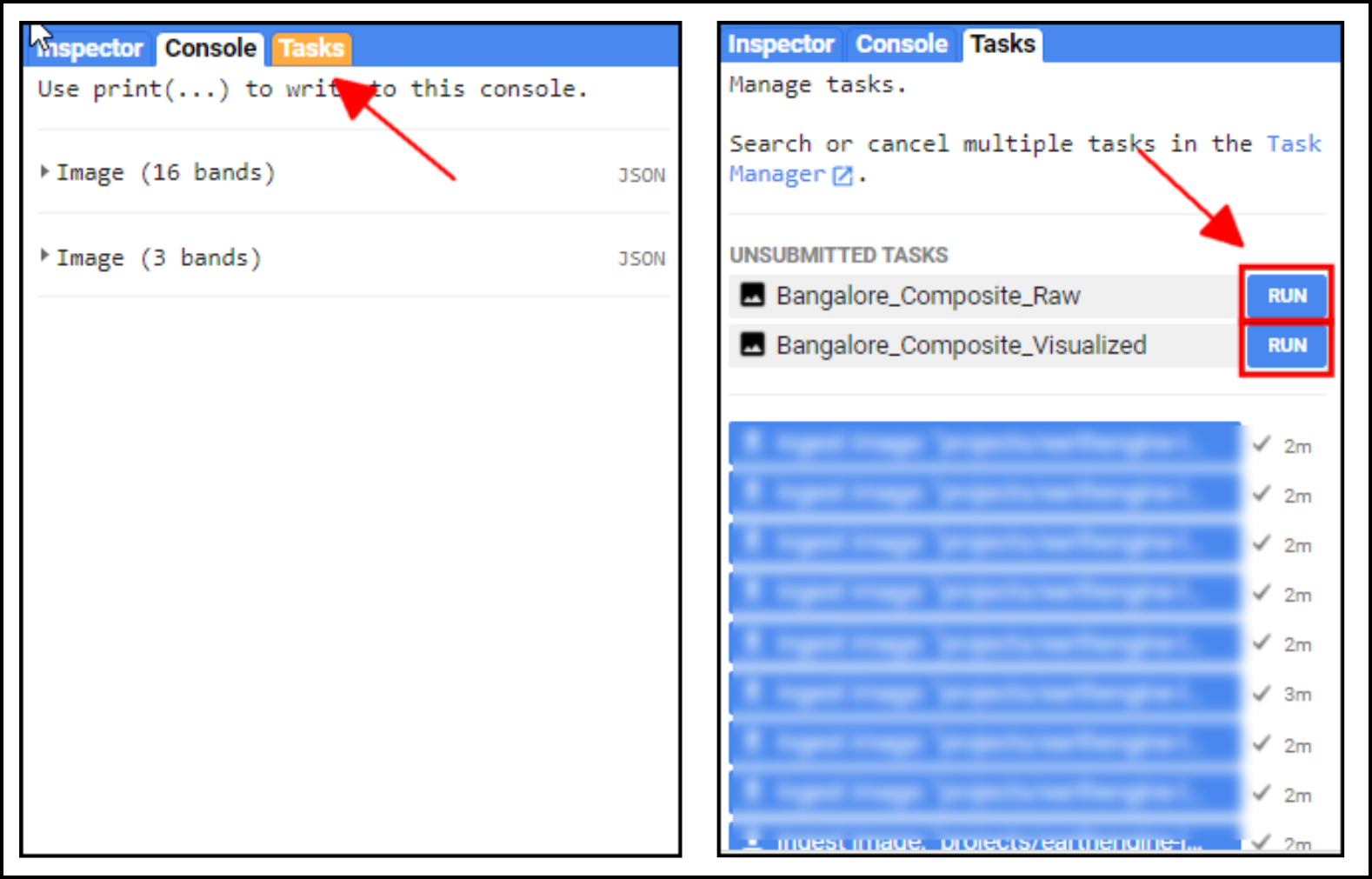

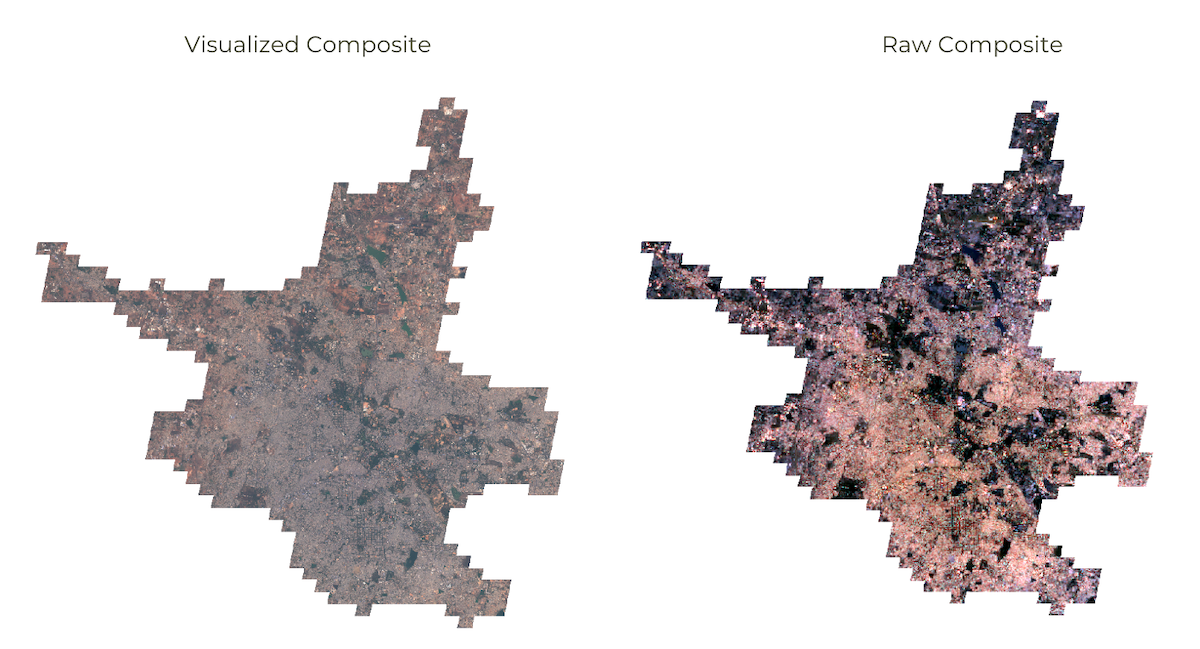

08. Exporting Data

Earth Engine allows for exporting both vector and raster data to be

used in an external program. Vector data can be exported as a

CSV or a Shapefile, while Rasters can be

exported as GeoTIFF files. We will now export the

Sentinel-2 Composite as a GeoTIFF file.

Tip: Code Editor supports autocompletion of API functions using the combination Ctrl+Space. Type a few characters of a function and press Ctrl+Space to see autocomplete suggestions. You can also use the same key combination to fill all parameters of the function automatically.

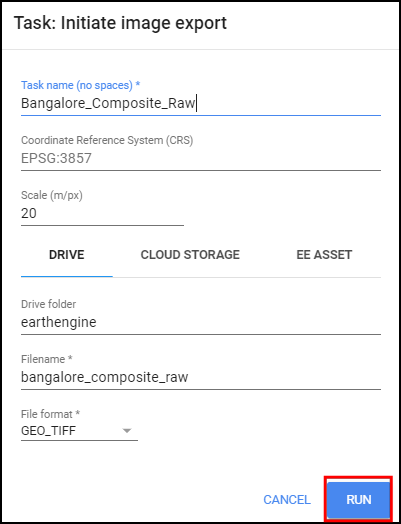

Once you run this script, the Tasks tab will be highlighted. Switch to the tab and you will see the tasks waiting. Click Run next to each task to start the process.

On clicking the Run button, you will be prompted for a confirmation dialog. Verify the settings and click Run to start the export.

Once the Export finishes, a GeoTiff file for each export task will be added to your Google Drive in the specified folder. You can download them and use it in a GIS software.

Visualized vs. Raw Composite

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var urban = ee.FeatureCollection('users/ujavalgandhi/e2e/ghs_urban_centers');

var filtered = urban

.filter(ee.Filter.eq('UC_NM_MN', 'Bengaluru'))

.filter(ee.Filter.eq('CTR_MN_NM', 'India'));

var geometry = filtered.geometry();

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filtered.median();

var clipped = image.clip(geometry);

Map.centerObject(geometry);

Map.addLayer(clipped, rgbVis, 'Clipped');

var exportImage = clipped.select('B.*');

// Export raw image with original pixel values

Export.image.toDrive({

image: exportImage,

description: 'Bangalore_Composite_Raw',

folder: 'earthengine',

fileNamePrefix: 'bangalore_composite_raw',

region: geometry,

scale: 10,

maxPixels: 1e9

});

// Export visualized image as colorized RGB image

// Rather than exporting raw bands, we can apply a rendered image

// visualize() function allows you to apply the same parameters

// that are used in earth engine which exports a 3-band RGB image

// Note: Visualized images are not suitable for analysis

var visualized = clipped.visualize(rgbVis);

Export.image.toDrive({

image: visualized,

description: 'Bangalore_Composite_Visualized',

folder: 'earthengine',

fileNamePrefix: 'bangalore_composite_visualized',

region: geometry,

scale: 10,

maxPixels: 1e9

});Exercise

Assignment 1

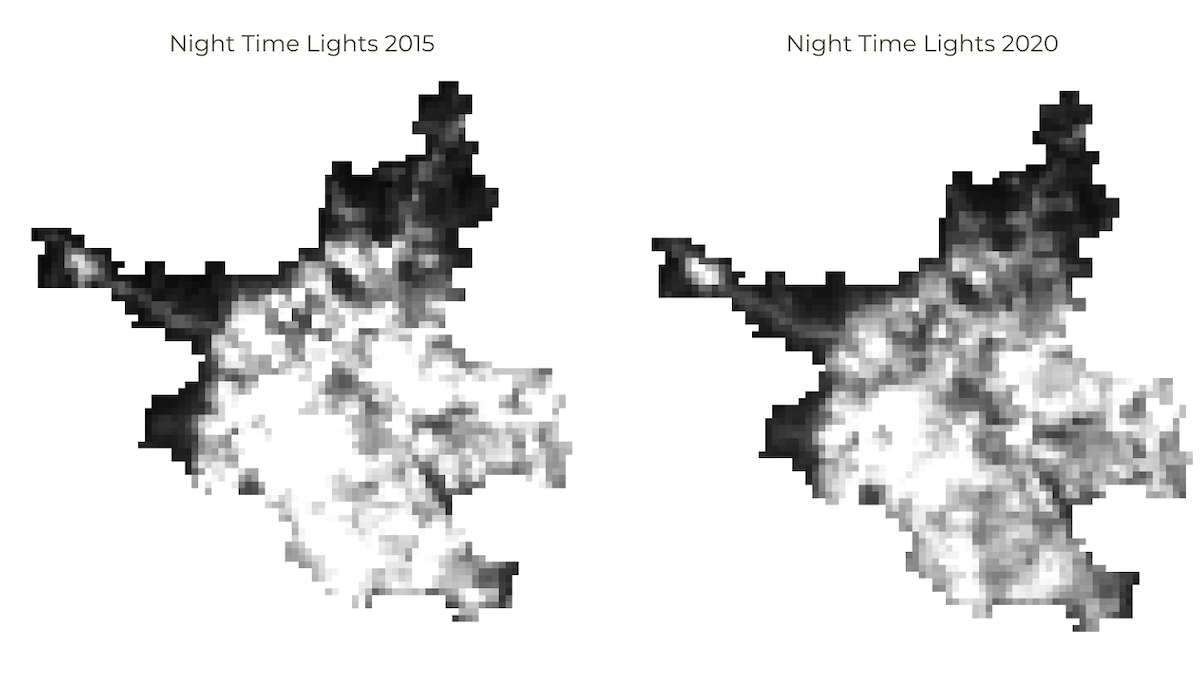

Load the Night Lights Data for May 2015 and May 2020. Compare the imagery for your region and find the changes in the city due to COVID-19 effect.

Assignment1 Expected Output

// Assignment

// Export the Night Lights images for May,2015 and May,2020

// Workflow:

// Load the VIIRS Nighttime Day/Night Band Composites collection

// Filter the collection to the date range

// Extract the 'avg_rad' band which represents the nighttime lights

// Clip the image to the geometry of your city

// Export the resulting image as a GeoTIFF file.

// Hint1:

// There are 2 VIIRS Nighttime Day/Night collections

// Use the one that corrects for stray light

// Hint2:

// The collection contains 1 global image per month

// After filtering for the month, there will be only 1 image in the collection

// You can use the following technique to extract that image

// var image = ee.Image(filtered.first())Module 2: Earth Engine Intermediate

Module 2 builds on the basic Earth Engine skills you have gained. This model introduces the parallel programming concepts using Map/Reduce - which is key in effectively using Earth Engine for analyzing large volumes of data. You will learn how to use the Earth Engine API for calculating various spectral indices, do cloud masking and then use map/reduce to do apply these computations to collections of imagery. You will also learn how to take long time-series of data and create charts.

01. Earth Engine Objects

This script introduces the basics of the Earth Engine API. When programming in Earth Engine, you must use the Earth Engine API so that your computations can use the Google Earth Engine servers. To learn more, visit Earth Engine Objects and Methods section of the Earth Engine User Guide.

// Let's see how to take a list of numbers and add 1 to each element

var myList = ee.List.sequence(1, 10);

// Define a function that takes a number and adds 1 to it

var myFunction = function(number) {

return number + 1;

};

print(myFunction(1));

//Re-Define a function using Earth Engine API

var myFunction = function(number) {

return ee.Number(number).add(1);

};

// Map the function of the list

var newList = myList.map(myFunction);

print(newList);

// Extracting value from a list

var value = newList.get(0);

print(value);

// Casting

// Let's try to do some computation on the extracted value

//var newValue = value.add(1)

//print(newValue)

// You get an error because Earth Engine doesn't know what is the type of 'value'

// We need to cast it to appropriate type first

var value = ee.Number(value);

var newValue = value.add(1);

print(newValue);

// Dictionary

// Convert javascript objects to EE Objects

var data = {'city': 'Bengaluru', 'population': 8400000, 'elevation': 930};

var eeData = ee.Dictionary(data);

// Once converted, you can use the methods from the

// ee.Dictionary module

print(eeData.get('city'));

// Dates

// For any date computation, you should use ee.Date module

var date = ee.Date('2019-01-01');

var futureDate = date.advance(1, 'year');

print(futureDate);As a general rule, you should always use Earth Engine API methods in your code, there is one exception where you will need to use client-side Javascript method. If you want to get the current time, the server doesn’t know your time. You need to use javascript method and cast it to an Earth Engine object.

var now = Date.now() print(now) var now = ee.Date(now) print(now)

Exercise

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241]);

var now = Date.now();

var now = ee.Date(now);

// Exercise

// Apply another filter to the collection below to filter images

// collected in the last 1-month

// Do not hard-code the dates, it should always show images

// from the past 1-month whenever you run the script

// Hint: Use ee.Date.advance() function

// to compute the date 1 month before now

var filtered = s2

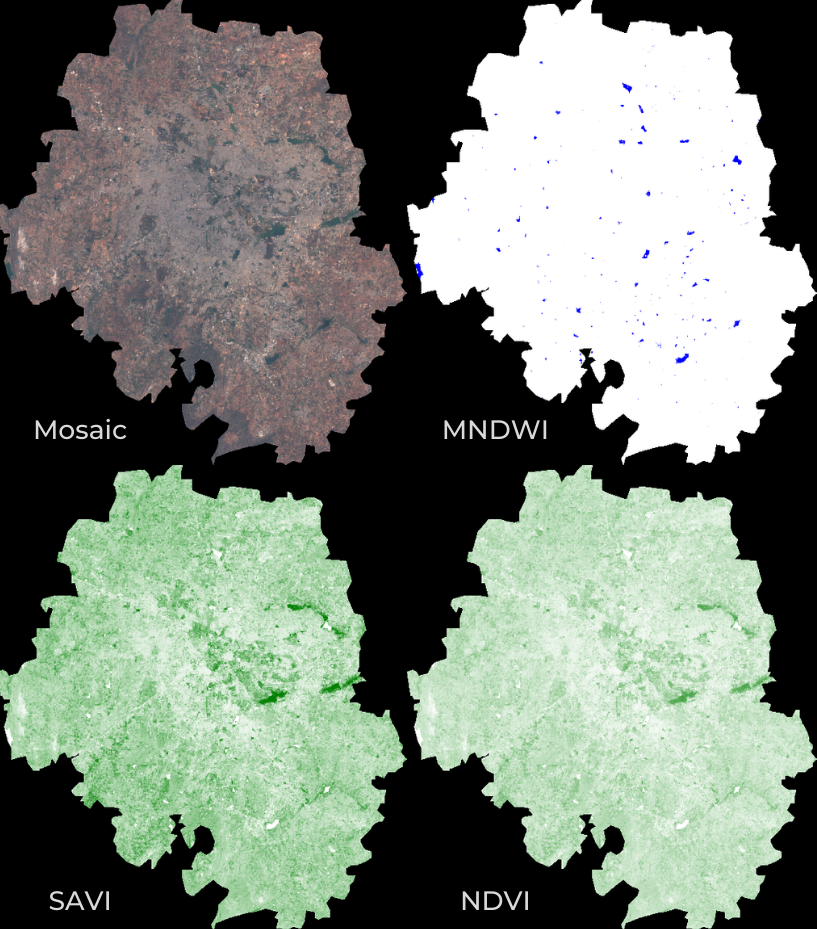

.filter(ee.Filter.bounds(geometry))02. Calculating Indices

Spectral Indices are central to many aspects of remote sensing.

Whether you are studying vegetation or tracking fires - you will need to

compute a pixel-wise ratio of 2 or more bands. The most commonly used

formula for calculating an index is the Normalized Difference

between 2 bands. Earth Engine provides a helper function

normalizedDifference() to help calculate normalized

indices, such as Normalized Difference Vegetation Index (NDVI). For more

complex formulae, you can also use the expression()

function to describe the calculation.

MNDWI, SAVI and NDVI images

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var admin2 = ee.FeatureCollection('FAO/GAUL_SIMPLIFIED_500m/2015/level2');

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'))

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'));

var geometry = filteredAdmin2.geometry();

Map.centerObject(geometry);

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

// Calculate Normalized Difference Vegetation Index (NDVI)

// 'NIR' (B8) and 'RED' (B4)

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

// Calculate Modified Normalized Difference Water Index (MNDWI)

// 'GREEN' (B3) and 'SWIR1' (B11)

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

// Calculate Soil-adjusted Vegetation Index (SAVI)

// 1.5 * ((NIR - RED) / (NIR + RED + 0.5))

// For more complex indices, you can use the expression() function

// Note:

// For the SAVI formula, the pixel values need to converted to reflectances

// Multiplyng the pixel values by 'scale' gives us the reflectance value

// The scale value is 0.0001 for Sentinel-2 dataset

var savi = image.expression(

'1.5 * ((NIR - RED) / (NIR + RED + 0.5))', {

'NIR': image.select('B8').multiply(0.0001),

'RED': image.select('B4').multiply(0.0001),

}).rename('savi');

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

var ndviVis = {min:0, max:1, palette: ['white', 'green']};

var ndwiVis = {min:0, max:0.5, palette: ['white', 'blue']};

Map.addLayer(image.clip(geometry), rgbVis, 'Image');

Map.addLayer(mndwi.clip(geometry), ndwiVis, 'mndwi');

Map.addLayer(savi.clip(geometry), ndviVis, 'savi');

Map.addLayer(ndvi.clip(geometry), ndviVis, 'ndvi');Exercise

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var admin2 = ee.FeatureCollection('FAO/GAUL_SIMPLIFIED_500m/2015/level2');

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'))

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'));

var geometry = filteredAdmin2.geometry();

Map.centerObject(geometry);

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(image.clip(geometry), rgbVis, 'Image');

// Exercise

// Change the filter to select your chosen Admin2 region

// Calculate the Normalized Difference Built-Up Index (NDBI) for the image

// Hint: NDBI = (SWIR1 – NIR) / (SWIR1 + NIR)

// Visualize the built-up area using a 'red' palette03. Computation on ImageCollections

So far we have learnt how to run computation on single images. If you

want to apply some computation - such as calculating an index - to many

images, you need to use map(). You first define a function

that takes 1 image and returns the result of the computation on that

image. Then you can map() that function over the

ImageCollection which results in a new ImageCollection with the results

of the computation. This is similar to a for-loop that you

maybe familiar with - but using map() allows the

computation to run in parallel. Learn more at Mapping

over an ImageCollection

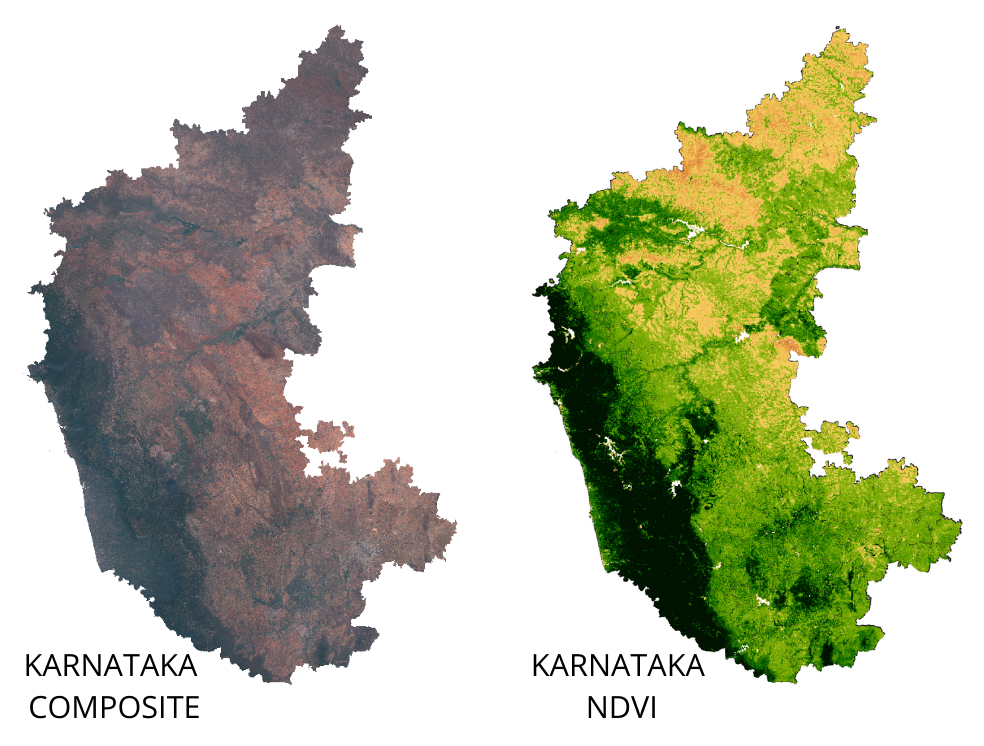

NDVI computation on an ImageCollection

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var admin1 = ee.FeatureCollection('FAO/GAUL_SIMPLIFIED_500m/2015/level1');

// Select an Admin1 region

var filteredAdmin1 = admin1.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'));

var geometry = filteredAdmin1.geometry();

Map.centerObject(geometry);

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var composite = filteredS2.median().clip(geometry);

Map.addLayer(composite, rgbVis, 'Admin1 Composite');

// Write a function that computes NDVI for an image and adds it as a band

function addNDVI(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename('ndvi');

return image.addBands(ndvi);

}

// Map the function over the collection

var withNdvi = filteredS2.map(addNDVI);

var composite = withNdvi.median();

var ndviComposite = composite.select('ndvi');

var palette = [

'FFFFFF', 'CE7E45', 'DF923D', 'F1B555', 'FCD163', '99B718',

'74A901', '66A000', '529400', '3E8601', '207401', '056201',

'004C00', '023B01', '012E01', '011D01', '011301'];

var ndviVis = {min:0, max:0.5, palette: palette };

Map.addLayer(ndviComposite.clip(geometry), ndviVis, 'ndvi');Exercise

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var admin1 = ee.FeatureCollection('FAO/GAUL_SIMPLIFIED_500m/2015/level1');

// Select an Admin1 region

var filteredAdmin1 = admin1.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'));

var geometry = filteredAdmin1.geometry();

Map.centerObject(geometry);

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var composite = filteredS2.median();

Map.addLayer(composite.clip(geometry), rgbVis, 'Admin1 Composite');

// This function calculates both NDVI and NDWI indices

// and returns an image with 2 new bands added to the original image.

function addIndices(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename('ndvi');

var ndwi = image.normalizedDifference(['B3', 'B8']).rename('ndwi');

return image.addBands(ndvi).addBands(ndwi);

}

// Map the function over the collection

var withIndices = filteredS2.map(addIndices);

// Composite

var composite = withIndices.median();

print(composite);

// Exercise

// Display a map of NDWI for the region

// Select the 'ndwi' band and clip it before displaying

// Use a color palette from https://colorbrewer2.org/

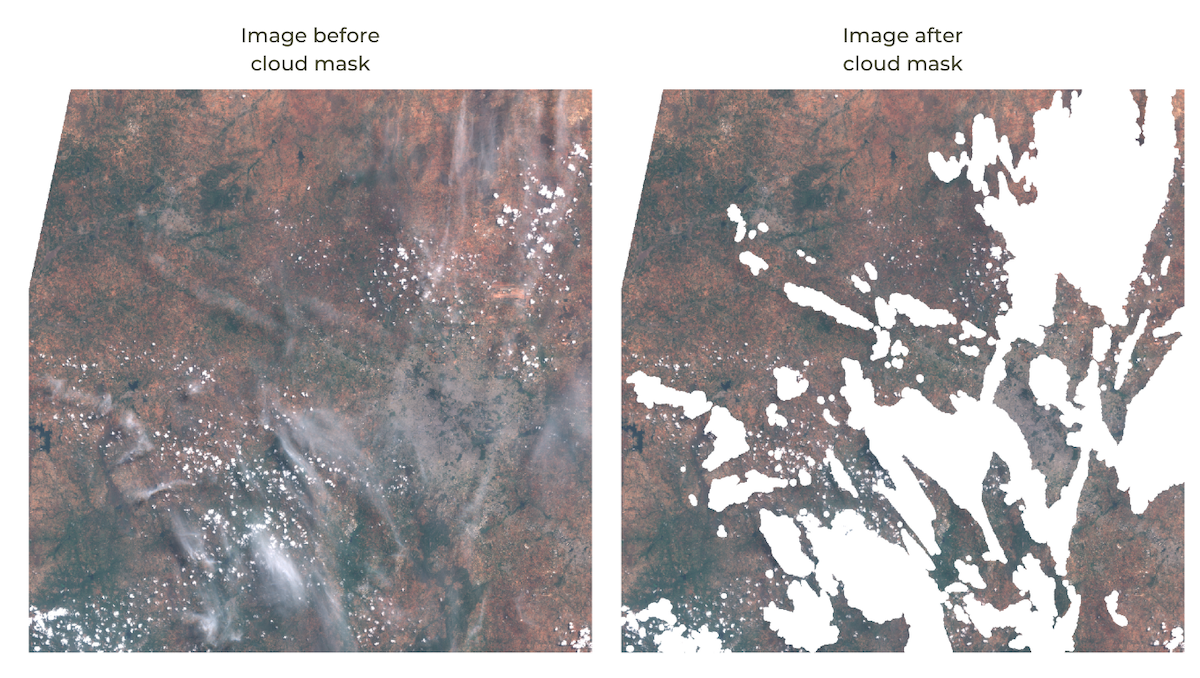

// Hint: Use .select() function to select a band04. Cloud Masking

Masking pixels in an image makes those pixels transparent and

excludes them from analysis and visualization. To mask an image, we can

use the updateMask() function and pass it an image with 0

and 1 values. All pixels where the mask image is 0 will be masked.

Most remote sensing datasets come with a QA or Cloud Mask band that contains the information on whether pixels is cloudy or not. Your Code Editor contains pre-defined functions for masking clouds for popular datasets under Scripts Tab → Examples → Cloud Masking. To understand how cloud-masking functions work and learn advanced techniques for bitmasking, please refer to our article on Working with QA Bands and Bitmasks in Google Earth Engine.

The script below takes the Sentinel-2 masking function and shows how to apply it on an image.

Applying pixel-wise QA bitmask

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241]);

var filteredS2 = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 35))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

// Sort the collection and pick the most cloudy image

var filteredS2Sorted = filteredS2.sort({

property: 'CLOUDY_PIXEL_PERCENTAGE',

ascending: false

});

var image = filteredS2Sorted.first();

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.centerObject(image);

Map.addLayer(image, rgbVis, 'Full Image', false);

// Write a function for Cloud masking

function maskS2clouds(image) {

var qa = image.select('QA60');

var cloudBitMask = 1 << 10;

var cirrusBitMask = 1 << 11;

var mask = qa.bitwiseAnd(cloudBitMask).eq(0).and(

qa.bitwiseAnd(cirrusBitMask).eq(0));

return image.updateMask(mask)

.select('B.*')

.copyProperties(image, ['system:time_start']);

}

var imageMasked = ee.Image(maskS2clouds(image));

Map.addLayer(imageMasked, rgbVis, 'Masked Image');Exercise

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241]);

var filteredS2 = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 35))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

// Sort the collection and pick the most cloudy image

var filteredS2Sorted = filteredS2.sort({

property: 'CLOUDY_PIXEL_PERCENTAGE',

ascending: false

});

var image = filteredS2Sorted.first();

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.centerObject(image);

Map.addLayer(image, rgbVis, 'Full Image', false);

// Write a function for Cloud masking

function maskS2clouds(image) {

var qa = image.select('QA60');

var cloudBitMask = 1 << 10;

var cirrusBitMask = 1 << 11;

var mask = qa.bitwiseAnd(cloudBitMask).eq(0).and(

qa.bitwiseAnd(cirrusBitMask).eq(0));

return image.updateMask(mask)

.select('B.*')

.copyProperties(image, ['system:time_start']);

}

var imageMasked = ee.Image(maskS2clouds(image));

Map.addLayer(imageMasked, rgbVis, 'Masked Image (QA60 Band)', false);

// Use Google's Cloud Score+ Mask

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// Link S2 and CS+ results.

var imageWithCs = image.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.6;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var imageMaskedCs = ee.Image(maskLowQA(imageWithCs));

Map.addLayer(imageMaskedCs, rgbVis, 'Masked Image (Cloud Score+)');

// Google CloudScore+ dataset provides state-of-the-art

// cloud masks for Sentinel-2 images.

// It grades each image pixel on continuous scale between 0 and 1,

// 0 = 'not clear' (occluded),

// 1 = 'clear' (unoccluded)

// Exercise

// Delete the 'geometry' variable and add a point at your chosen location

// Run the script and compare the results of the CloudScore+ mask with

// the QA60 mask

// Adjust the 'clearThreshold' value to suit your scene

// Hint:

// clearThreshold values between 0.50 and 0.65 generally work well

// Higher values will remove thin clouds, haze & cirrus shadows.Learn more about the Cloud Score+ project.

05. Reducers

When writing parallel computing code, a Reduce operation

allows you to compute statistics on a large amount of inputs. In Earth

Engine, you need to run reduction operation when creating composites,

calculating statistics, doing regression analysis etc. The Earth Engine

API comes with a large number of built-in reducer functions (such as

ee.Reducer.sum(), ee.Reducer.histogram(),

ee.Reducer.linearFit() etc.) that can perform a variety of

statistical operations on input data. You can run reducers using the

reduce() function. Earth Engine supports running reducers

on all data structures that can hold multiple values, such as Images

(reducers run on different bands), ImageCollection, FeatureCollection,

List, Dictionary etc. The script below introduces basic concepts related

to reducers.

// Computing stats on a list

var myList = ee.List.sequence(1, 10);

print(myList)

// Use a reducer to compute average value

var mean = myList.reduce(ee.Reducer.mean());

print(mean);

var geometry = ee.Geometry.Polygon([[

[82.60642647743225, 27.16350437805251],

[82.60984897613525, 27.1618529901377],

[82.61088967323303, 27.163695288375266],

[82.60757446289062, 27.16517483230927]

]]);

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

Map.centerObject(geometry);

// Apply a reducer on a image collection

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry))

.select('B.*');

print(filtered.size());

var collMean = filtered.reduce(ee.Reducer.mean());

print('Reducer on Collection', collMean);

var image = ee.Image('COPERNICUS/S2/20190223T050811_20190223T051829_T44RPR');

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(image, rgbVis, 'Image');

Map.addLayer(geometry, {color: 'red'}, 'Farm');

// If we want to compute the average value in each band,

// we can use reduceRegion instead

var stats = image.reduceRegion({

reducer: ee.Reducer.mean(),

geometry: geometry,

scale: 10,

maxPixels: 1e10

});

print(stats);

// Result of reduceRegion is a dictionary.

// We can extract the values using .getNumber() function

print('Average value in B4', stats.getNumber('B4'));Exercise

var geometry = ee.Geometry.Polygon([[

[82.60642647743225, 27.16350437805251],

[82.60984897613525, 27.1618529901377],

[82.61088967323303, 27.163695288375266],

[82.60757446289062, 27.16517483230927]

]]);

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

var image = ee.Image('COPERNICUS/S2_HARMONIZED/20190223T050811_20190223T051829_T44RPR');

Map.addLayer(image, rgbVis, 'Image');

Map.addLayer(geometry, {color: 'red'}, 'Farm');

Map.centerObject(geometry);

var ndvi = image.normalizedDifference(['B8', 'B4']).rename('ndvi');

// Exercise

// Compute the average NDVI for the farm from the given image

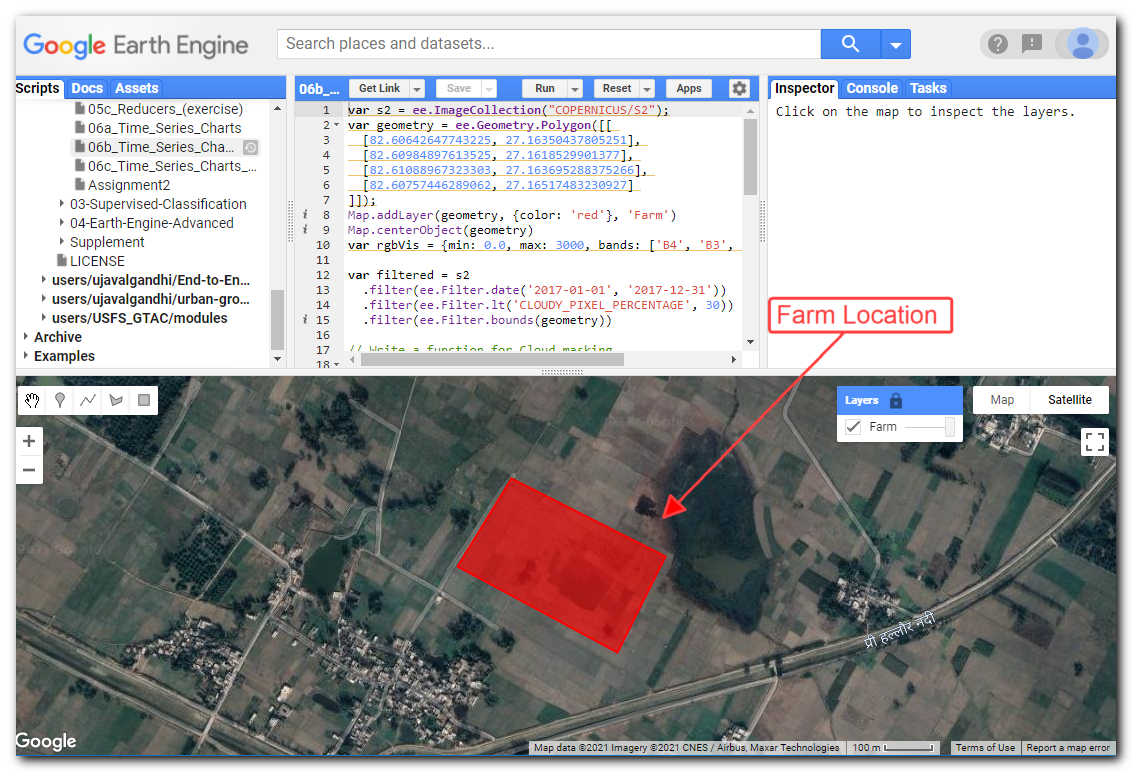

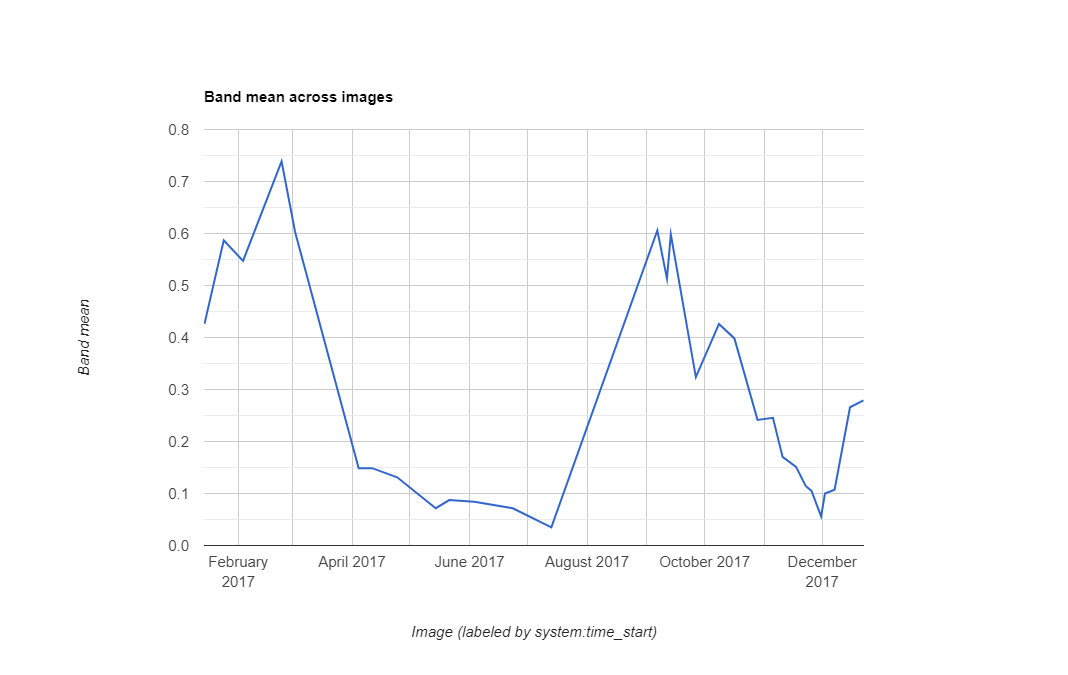

// Hint: Use the reduceRegion() function06. Time-Series Charts

Now we can put together all the skills we have learnt so far -

filter, map, reduce, and cloud-masking to create a chart of average NDVI

values for a given farm over 1 year. Earth Engine API comes with support

for charting functions based on the Google Chart API. Here we use the

ui.Chart.image.series() function to create a time-series

chart.

Computing NDVI Time-series for a Farm

NDVI Time-series showing Dual-Cropping Cycle

var s2 = ee.ImageCollection('COPERNICUS/S2_HARMONIZED');

var geometry = ee.Geometry.Polygon([[

[82.60642647743225, 27.16350437805251],

[82.60984897613525, 27.1618529901377],

[82.61088967323303, 27.163695288375266],

[82.60757446289062, 27.16517483230927]

]]);

Map.addLayer(geometry, {color: 'red'}, 'Farm');

Map.centerObject(geometry);

var filtered = s2

.filter(ee.Filter.date('2017-01-01', '2018-01-01'))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

var maskLowQA = function(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

};

// map() the function to mask all images

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

// Function to scale pixel values to reflectance

var scaleValues = function(image) {

var scaledImage = image.multiply(0.0001);

// This creates a new image and we lose the properties

// copy the system:time_start property

// from the originalimage

return scaledImage

.copyProperties(image, ['system:time_start']);

};

// Function that computes NDVI for an image and adds it as a band

var addNDVI = function(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename('ndvi');

return image.addBands(ndvi);

};

// Map the functions over the collection

var withNdvi = filteredMasked

.map(scaleValues)

.map(addNDVI);

// Display a time-series chart

var chart = ui.Chart.image.series({

imageCollection: withNdvi.select('ndvi'),

region: geometry,

reducer: ee.Reducer.mean(),

scale: 10

}).setOptions({

lineWidth: 1,

pointSize: 2,

title: 'NDVI Time Series',

interpolateNulls: true,

vAxis: {title: 'NDVI'},

hAxis: {title: '', format: 'YYYY-MMM'}

});

print(chart);Exercise

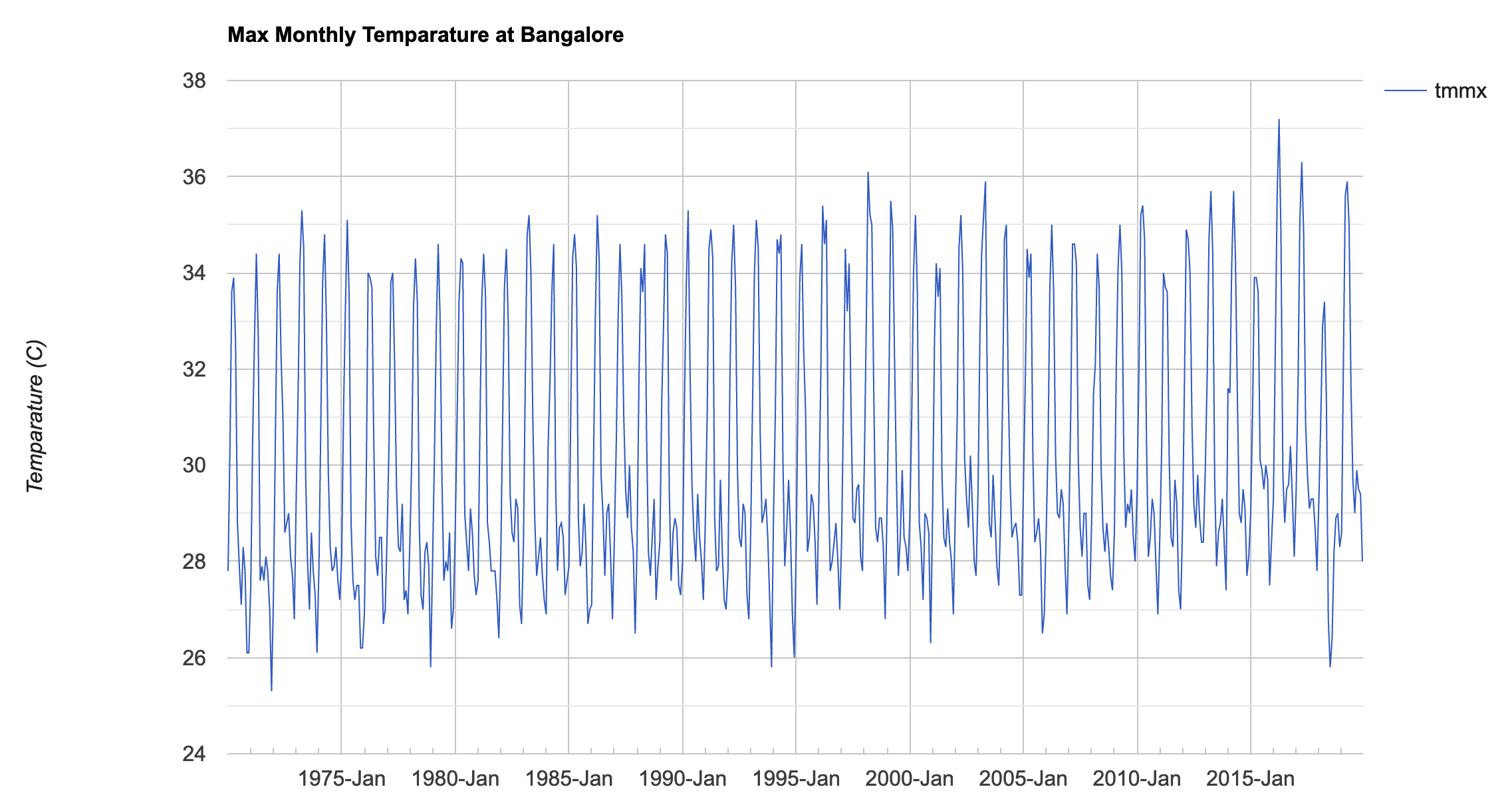

Assignment 2

Assignment2 Expected Output

var terraclimate = ee.ImageCollection("IDAHO_EPSCOR/TERRACLIMATE");

var geometry = ee.Geometry.Point([77.54849920033682, 12.91215102400037]);

// Assignment

// Use TerraClimate dataset to chart a 50 year time series

// of temparature at any location

// Workflow

// Load the TerraClimate collection

// Select the 'tmmx' band

// Scale the band values

// Filter the scaled collection to the desired date range

// Use ui.Chart.image.series() function to create the chart

// Hint1

// The 'tmnx' band has a scaling factor of 0.1 as per

// https://developers.google.com/earth-engine/datasets/catalog/IDAHO_EPSCOR_TERRACLIMATE#bands

// This means that we need to multiply each pixel value by 0.1

// to obtain the actual temparature value

// map() a function and multiply each image

var tmax = terraclimate.select('tmmx');

// Function that applies the scaling factor to the each image

// Multiplying creates a new image that doesn't have the same properties

// Use copyProperties() function to copy timestamp to new image

var scaleImage = function(image) {

return image.multiply(0.1)

.copyProperties(image,['system:time_start']);

};

var tmaxScaled = tmax.map(scaleImage);

// Hint2

// You will need to specify pixel resolution as the scale parameter

// in the charting function

// Use projection().nominalScale() to find the

// image resolution in meters

var image = ee.Image(terraclimate.first())

print(image.projection().nominalScale())Module 3: Supervised Classification

Introduction to Machine Learning and Supervised Classification

Supervised classification is arguably the most important classical machine learning techniques in remote sensing. Applications range from generating Land Use/Land Cover maps to change detection. Google Earth Engine is unique suited to do supervised classification at scale. The interactive nature of Earth Engine development allows for iterative development of supervised classification workflows by combining many different datasets into the model. This module covers basic supervised classification workflow, accuracy assessment, hyperparameter tuning and change detection.

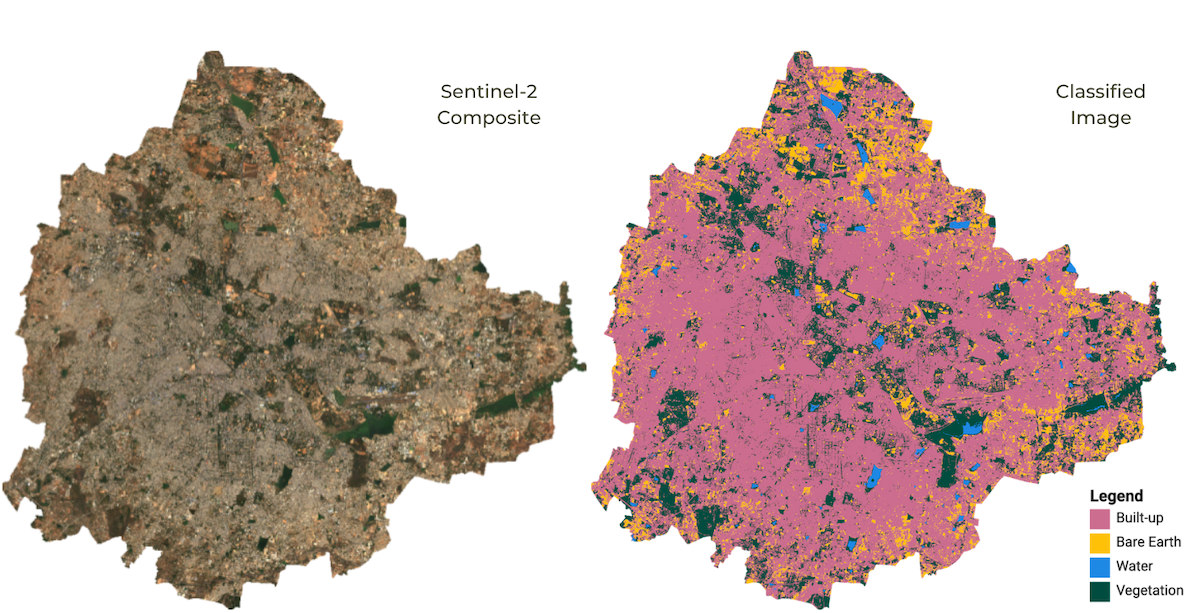

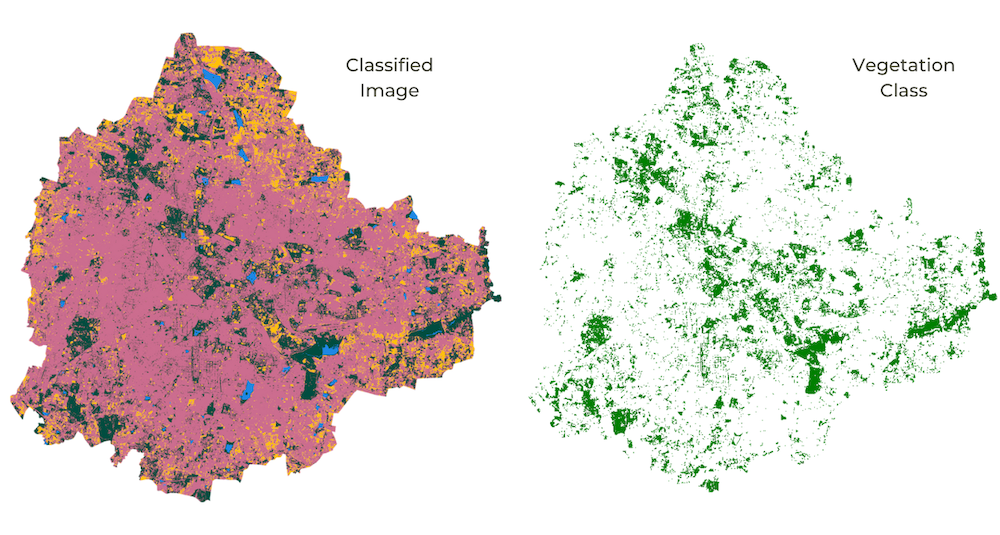

01. Basic Supervised Classification

We will learn how to do a basic land cover classification using

training samples collected from the Code Editor using the High

Resolution basemap imagery provided by Google Maps. This method requires

no prior training data and is quite effective to generate high quality

classification samples anywhere in the world. The goal is to classify

each source pixel into one of the following classes - urban, bare, water

or vegetation. Using the drawing tools in the code editor, you create 4

new feature collection with points representing pixels of that class.

Each feature collection has a property called landcover

with values of 0, 1, 2 or 3 indicating whether the feature collection

represents urban, bare, water or vegetation respectively. We then train

a Random Forest classifier using these training set to build a

model and apply it to all the pixels of the image to create a 4 class

image.

Fun fact: The classifiers in Earth Engine API have names starting with smile - such as

ee.Classifier.smileRandomForest(). The smile part refers to the Statistical Machine Intelligence and Learning Engine (SMILE) JAVA library which is used by Google Earth Engine to implement these algorithms.

Supervised Classification Output

var city = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/bangalore_boundary');

var geometry = city.geometry();

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

// The following collections were created using the

// Drawing Tools in the code editor

var urban = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/urban_gcps');

var bare = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/bare_gcps');

var water = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/water_gcps');

var vegetation = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/vegetation_gcps');

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

var gcps = urban.merge(bare).merge(water).merge(vegetation);

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50).train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// // Classify the image.

var classified = composite.classify(classifier);

// Choose a 4-color palette

// Assign a color for each class in the following order

// Urban, Bare, Water, Vegetation

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

// Display the GCPs

// We use the style() function to style the GCPs

var palette = ee.List(palette);

var landcover = ee.List([0, 1, 2, 3]);

var gcpsStyled = ee.FeatureCollection(

landcover.map(function(lc){

var color = palette.get(landcover.indexOf(lc));

var markerStyle = { color: 'white', pointShape: 'diamond',

pointSize: 4, width: 1, fillColor: color};

return gcps.filter(ee.Filter.eq('landcover', lc))

.map(function(point){

return point.set('style', markerStyle);

});

})).flatten();

Map.addLayer(gcpsStyled.style({styleProperty:"style"}), {}, 'GCPs');

Map.centerObject(gcpsStyled);Exercise

// Perform supervised classification for your city

// Delete the geometry below and draw a polygon

// over your chosen city

var geometry = ee.Geometry.Polygon([[

[77.4149, 13.1203],

[77.4149, 12.7308],

[77.8090, 12.7308],

[77.8090, 13.1203]

]]);

Map.centerObject(geometry);

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Exercise

// Add training points for 4 classes

// Assign the 'landcover' property as follows

// urban: 0

// bare: 1

// water: 2

// vegetation: 3

// After adding points, uncomments lines below

// var gcps = urban.merge(bare).merge(water).merge(vegetation);

// // Overlay the point on the image to get training data.

// var training = composite.sampleRegions({

// collection: gcps,

// properties: ['landcover'],

// scale: 10,

// tileScale: 16

// });

// print(training);

// // Train a classifier.

// var classifier = ee.Classifier.smileRandomForest(50).train({

// features: training,

// classProperty: 'landcover',

// inputProperties: composite.bandNames()

// });

// // // Classify the image.

// var classified = composite.classify(classifier);

// // Choose a 4-color palette

// // Assign a color for each class in the following order

// // Urban, Bare, Water, Vegetation

// var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

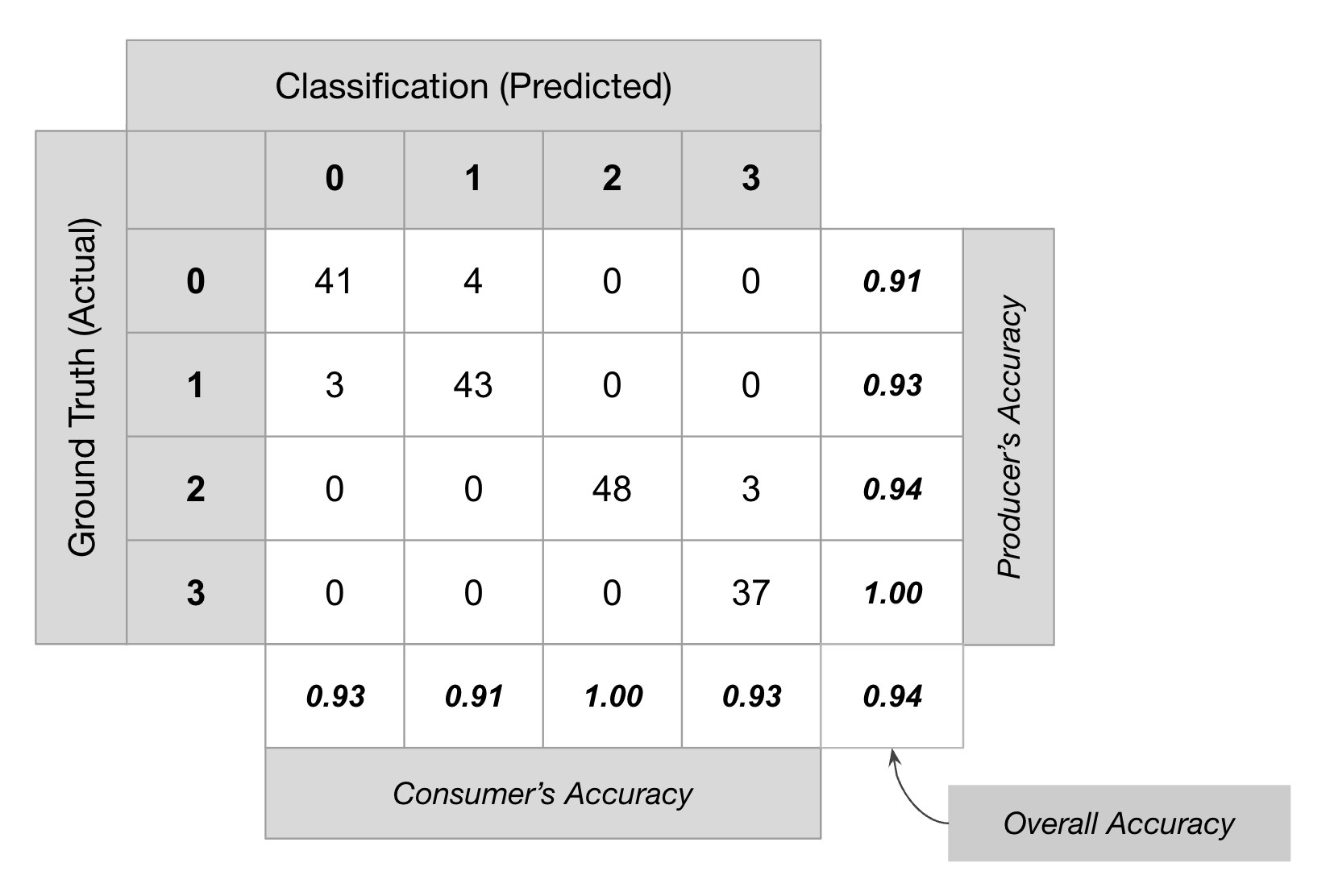

// Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');02. Accuracy Assessment

It is important to get a quantitative estimate of the accuracy of the

classification. To do this, a common strategy is to divide your training

samples into 2 random fractions - one used for training the

model and the other for validation of the predictions. Once a

classifier is trained, it can be used to classify the entire image. We

can then compare the classified values with the ones in the validation

fraction. We can use the ee.Classifier.confusionMatrix()

method to calculate a Confusion Matrix representing expected

accuracy.

Classification results are evaluated based on the following metrics

- Overall Accuracy: How many samples were classified correctly.

- Producer’s Accuracy: How well did the classification predict each class.

- Consumer’s Accuracy (Reliability): How reliable is the prediction in each class.

- Kappa Coefficient: How well the classification performed as compared to random assignment.

Accuracy Assessment

Don’t get carried away tweaking your model to give you the highest validation accuracy. You must use both qualitative measures (such as visual inspection of results) along with quantitative measures to assess the results.

// Load training samples

// This was created by exporting the merged 'gcps' collection

// using Export.table.toAsset()

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Load the region boundary of a basin

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Add a random column and split the GCPs into training and validation set

var gcps = gcps.randomColumn();

// This being a simpler classification, we take 60% points

// for validation. Normal recommended ratio is

// 70% training, 30% validation

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// Classify the image.

var classified = composite.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

//**************************************************************************

// Accuracy Assessment

//**************************************************************************

// Use classification map to assess accuracy using the validation fraction

// of the overall training set created above.

var test = classified.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

tileScale: 16,

scale: 10,

});

var testConfusionMatrix = test.errorMatrix('landcover', 'classification');

// Printing of confusion matrix may time out. Alternatively, you can export it as CSV

print('Confusion Matrix', testConfusionMatrix);

print('Test Accuracy', testConfusionMatrix.accuracy());

// Alternate workflow

// This is similar to machine learning practice

var validation = composite.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

var test = validation.classify(classifier);

var testConfusionMatrix = test.errorMatrix('landcover', 'classification');

// Printing of confusion matrix may time out. Alternatively, you can export it as CSV

print('Confusion Matrix', testConfusionMatrix);

print('Test Accuracy', testConfusionMatrix.accuracy());Exercise

// Load training samples

// This was created by exporting the merged 'gcps' collection

// using Export.table.toAsset()

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Load the region boundary of a basin

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Add a random column and split the GCPs into training and validation set

var gcps = gcps.randomColumn();

// This being a simpler classification, we take 60% points

// for validation. Normal recommended ratio is

// 70% training, 30% validation

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// Classify the image.

var classified = composite.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

//**************************************************************************

// Accuracy Assessment

//**************************************************************************

// Use classification map to assess accuracy using the validation fraction

// of the overall training set created above.

var test = classified.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

tileScale: 16,

scale: 10,

});

var testConfusionMatrix = test.errorMatrix('landcover', 'classification');

// Printing of confusion matrix may time out. Alternatively, you can export it as CSV

print('Confusion Matrix', testConfusionMatrix);

print('Test Accuracy', testConfusionMatrix.accuracy());

// Exercise

// Calculate and print the following assessment metrics

// 1. Producer's accuracy

// 2. Consumer's accuracy

// 3. F1-score

// Hint: Look at the ee.ConfusionMatrix module for appropriate methods03. Feature Engineering

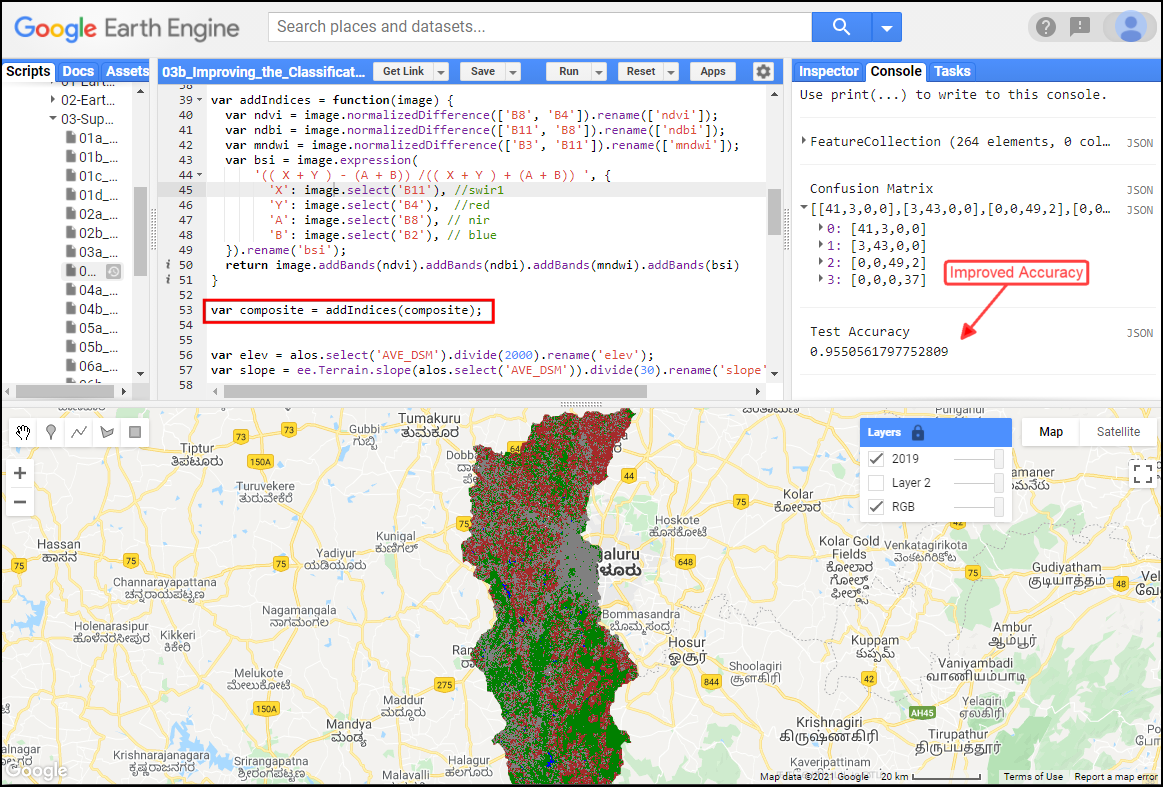

We can make more a effective machine learning model by adding more relevant inputs to the classification task. This process is known as Feature Engineering. Instead of using just the spectral bands as inputs to the model, we can add transformations (such as Spectral Indicies) and other relevant inputs (such as Elevation and Slope). This new Features help the classifier learn the patterns in the data more effectively.

Here we take the same example as before and augment it with the following new features:

- Spectral Indices: We add bands for different spectral indices such as - NDVI, NDBI, MNDWI and BSI.

- Elevation and Slope: We also add slope and elevation bands from the ALOS DEM.

After adding bands with this new inputs, our training features have more parameters and result is a much improved classification.

Improved Classification Accuracy with use of Spectral Indices and Elevation Data

// Load training samples

// This was created by exporting the merged 'gcps' collection

// using Export.table.toAsset()

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Load the region boundary of a basin

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Add New Features to the Model

// 1. Add Indices

var addIndices = function(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

var ndbi = image.normalizedDifference(['B11', 'B8']).rename(['ndbi']);

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

var bsi = image.expression(

'(( X + Y ) - (A + B)) /(( X + Y ) + (A + B)) ', {

'X': image.select('B11'), //swir1

'Y': image.select('B4'), //red

'A': image.select('B8'), // nir

'B': image.select('B2'), // blue

}).rename('bsi');

return image.addBands(ndvi).addBands(ndbi).addBands(mndwi).addBands(bsi);

};

var composite = addIndices(composite);

// 2. Add Slope and Elevation

// Use ALOS World 3D

var alos = ee.ImageCollection('JAXA/ALOS/AW3D30/V4_1');

// This comes as a collection of images

// We mosaic it to create a single image

// Need to set the projection correctly on the mosaic

// for the slope computation

var proj = alos.first().projection();

var elevation = alos.select('DSM').mosaic()

.setDefaultProjection(proj)

.rename('elev');

var slope = ee.Terrain.slope(elevation)

.rename('slope');

var composite = composite.addBands(elevation).addBands(slope);

print('Composite Bands', composite);

// Train the model with new features

// Add a random column and split the GCPs into training and validation set

var gcps = gcps.randomColumn();

// This being a simpler classification, we take 60% points

// for validation. Normal recommended ratio is

// 70% training, 30% validation

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

print('Training Feature', training.first());

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50)

.train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// Classify the image.

var classified = composite.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

//**************************************************************************

// Accuracy Assessment

//**************************************************************************

// Use classification map to assess accuracy using the validation fraction

// of the overall training set created above.

var test = classified.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

tileScale: 16,

scale: 10,

});

var testConfusionMatrix = test.errorMatrix('landcover', 'classification');

// Printing of confusion matrix may time out. Alternatively, you can export it as CSV

print('Confusion Matrix', testConfusionMatrix);

print('Test Accuracy', testConfusionMatrix.accuracy());Exercise

// Exercise

// Improve your classification from Exercise 01c

// Add new features to your model using the code below

// to your own classification

// Copy the block of code at the end of your script

// ******** Start Copy/Paste ************

// Add New Features to the Model

var addIndices = function(image) {

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

var ndbi = image.normalizedDifference(['B11', 'B8']).rename(['ndbi']);

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

var bsi = image.expression(

'(( X + Y ) - (A + B)) /(( X + Y ) + (A + B)) ', {

'X': image.select('B11'), //swir1

'Y': image.select('B4'), //red

'A': image.select('B8'), // nir

'B': image.select('B2'), // blue

}).rename('bsi');

return image.addBands(ndvi).addBands(ndbi).addBands(mndwi).addBands(bsi);

};

var composite = addIndices(composite);

// Calculate Slope and Elevation

// Use ALOS World 3D

var alos = ee.ImageCollection('JAXA/ALOS/AW3D30/V4_1');

// This comes as a collection of images

// We mosaic it to create a single image

// Need to set the projection correctly on the mosaic

// for the slope computation

var proj = alos.first().projection();

var elevation = alos.select('DSM').mosaic()

.setDefaultProjection(proj)

.rename('elev');

var slope = ee.Terrain.slope(elevation)

.rename('slope');

var composite = composite.addBands(elevation).addBands(slope);

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50).train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// // Classify the image.

var classified = composite.classify(classifier);

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification (S2 + Features)');

// ********** End Copy/Paste *************04. Using Satellite Embeddings

Satellite Embedding is a wew global dataset produced by Google DeepMind’s AlphaEarth Foundations model. The model ingests time-series of many different earth observation datasets to produce a unified digital representation of each pixel - called embeddings. The embeddings are provided as an analysis-ready dataset in Earth Engine with yearly images from 2017-current. Embeddings can be used as input features to the classification model and results in higher accuracy outputs.

// Load training samples

// This was created by exporting the merged 'gcps' collection

// using Export.table.toAsset()

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Load the region boundary of a basin

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Use Satellite Embeddings as Features

var embeddings = ee.ImageCollection('GOOGLE/SATELLITE_EMBEDDING/V1/ANNUAL');

var filteredEmbeddings = embeddings

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

var embeddingsImage = filteredEmbeddings.mosaic();

print('Satellite Embedding Image', embeddingsImage);

// Train a classifier.

// Add a random column and split the GCPs into training and validation set

var gcps = gcps.randomColumn();

// This being a simpler classification, we take 60% points

// for validation. Normal recommended ratio is

// 70% training, 30% validation

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));

// Overlay the point on the image to get training data.

var training = embeddingsImage.sampleRegions({

collection: trainingGcp,

properties: ['landcover'],

scale: 10,

tileScale: 16

});

print('Training Feature', training.first());

var classifier = ee.Classifier.smileKNN()

.train({

features: training,

classProperty: 'landcover',

inputProperties: embeddingsImage.bandNames()

});

// Classify the image.

var classified = embeddingsImage.classify(classifier);

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

//**************************************************************************

// Accuracy Assessment

//**************************************************************************

// Use classification map to assess accuracy using the validation fraction

// of the overall training set created above.

var test = classified.sampleRegions({

collection: validationGcp,

properties: ['landcover'],

tileScale: 16,

scale: 10,

});

var testConfusionMatrix = test.errorMatrix('landcover', 'classification');

// Printing of confusion matrix may time out. Alternatively, you can export it as CSV

print('Confusion Matrix', testConfusionMatrix);

print('Test Accuracy', testConfusionMatrix.accuracy());Exercise

// Exercise

// Improve your classification from Exercise 01c

// Use satellite embeddings as features

// for your own classification

// Copy the block of code at the end of your script

// ******** Start Copy/Paste ************

// Use Satellite Embeddings as Features

var embeddings = ee.ImageCollection('GOOGLE/SATELLITE_EMBEDDING/V1/ANNUAL');

var filteredEmbeddings = embeddings

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

var embeddingsImage = filteredEmbeddings.mosaic();

print('Satellite Embedding Image', embeddingsImage);

var gcps = urban.merge(bare).merge(water).merge(vegetation);

// Overlay the point on the image to get training data.

var training = embeddingsImage.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

print(training.first());

// Train a classifier.

var classifier = ee.Classifier.smileKNN().train({

features: training,

classProperty: 'landcover',

inputProperties: embeddingsImage.bandNames()

});

// Classify the image.

var classified = embeddingsImage.classify(classifier);

Map.centerObject(geometry);

// Choose a 4-color palette

// Assign a color for each class in the following order

// Urban, Bare, Water, Vegetation

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette},

'Classification (Embeddings)');

// ******** End Copy/Paste ************05. Exporting Classification Results

When working with complex classifiers over large regions, you may get

a User memory limit exceeded or Computation timed out

error in the Code Editor. The reason for this is that there is a fixed

time limit and smaller memory allocated for code that is run with the

On-Demand Computation mode. For larger computations, you can

use the Batch mode with the Export functions.

Exports run in the background and can run longer than 5-minutes time

allocated to the computation code run from the Code Editor. This allows

you to process very large and complex datasets.

See more tips and suggestions for scaling your workflows at Debugging Errors and Scaling Your Analysis.

// Load training samples

// This was created by exporting the merged 'gcps' collection

// using Export.table.toAsset()

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Load the region boundary of a basin

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Use Satellite Embeddings as Features

var embeddings = ee.ImageCollection('GOOGLE/SATELLITE_EMBEDDING/V1/ANNUAL');

var filteredEmbeddings = embeddings

.filter(ee.Filter.date(startDate, endDate))

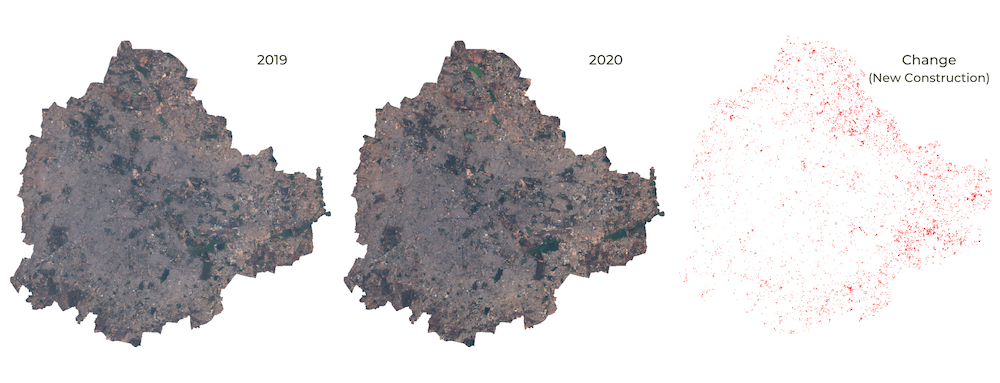

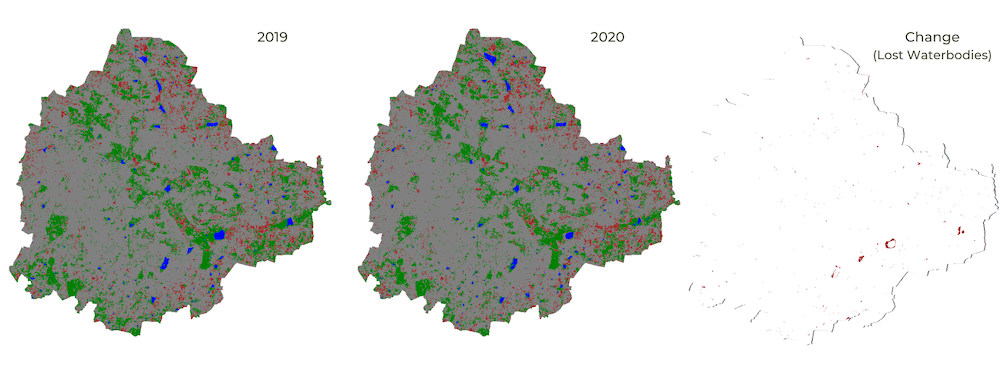

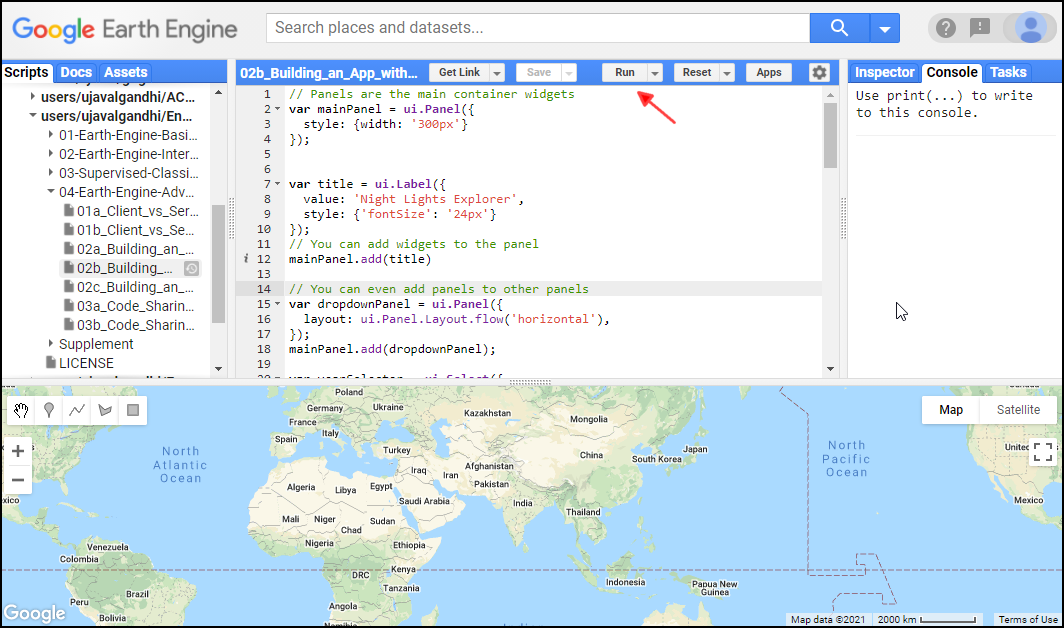

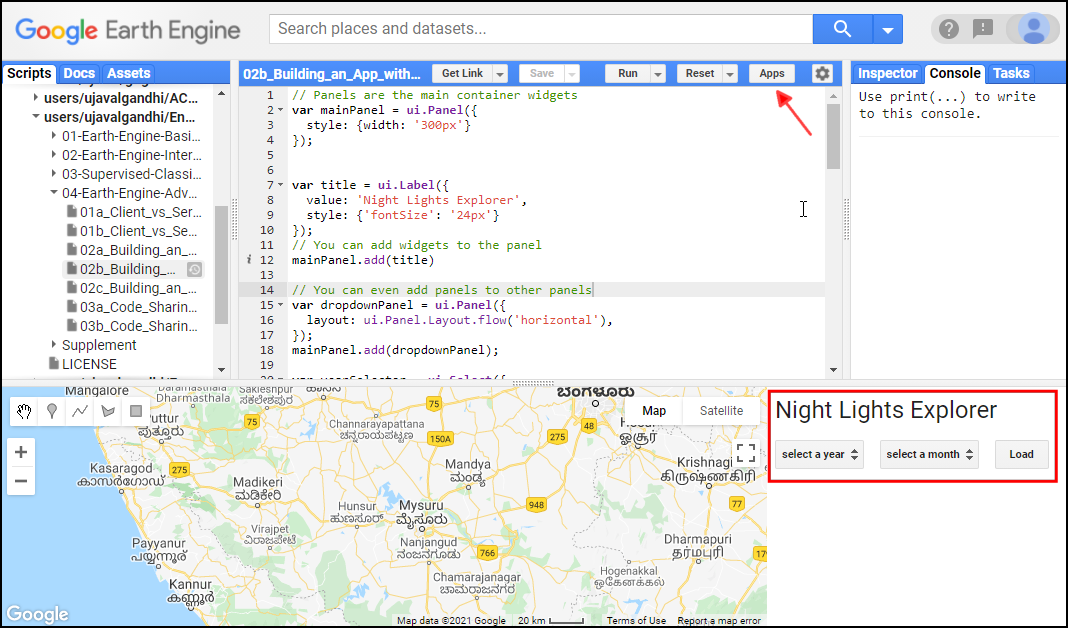

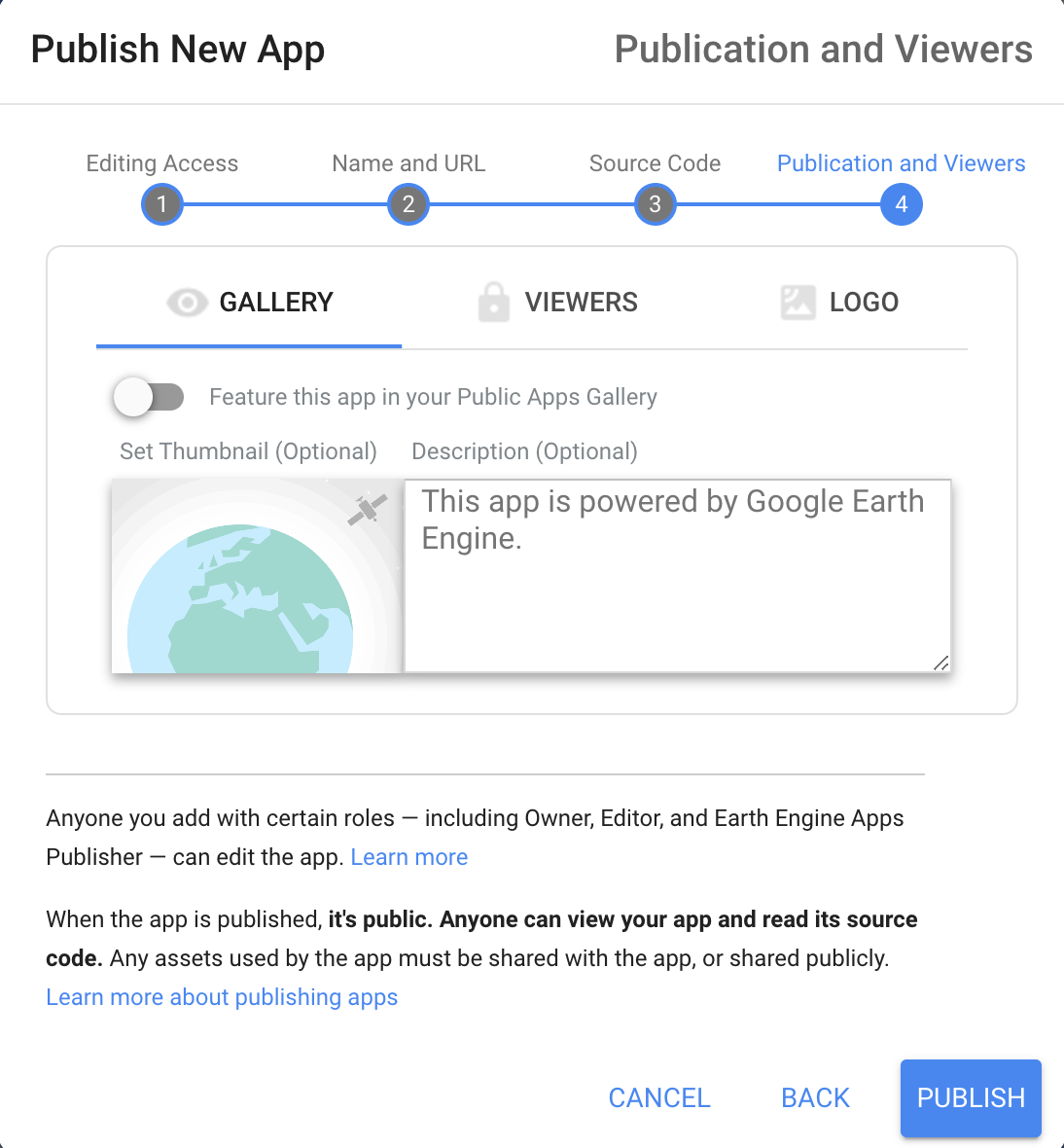

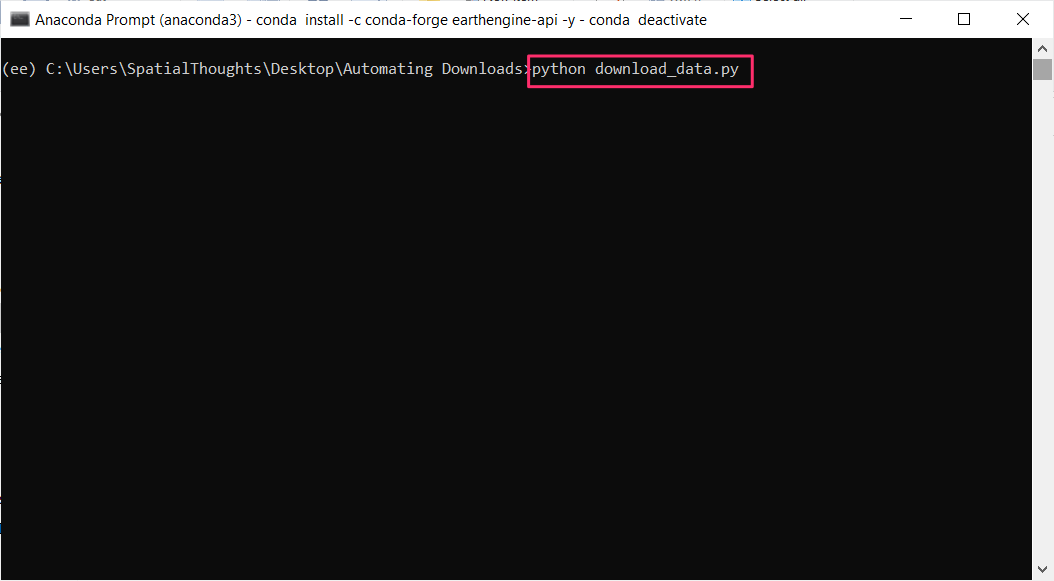

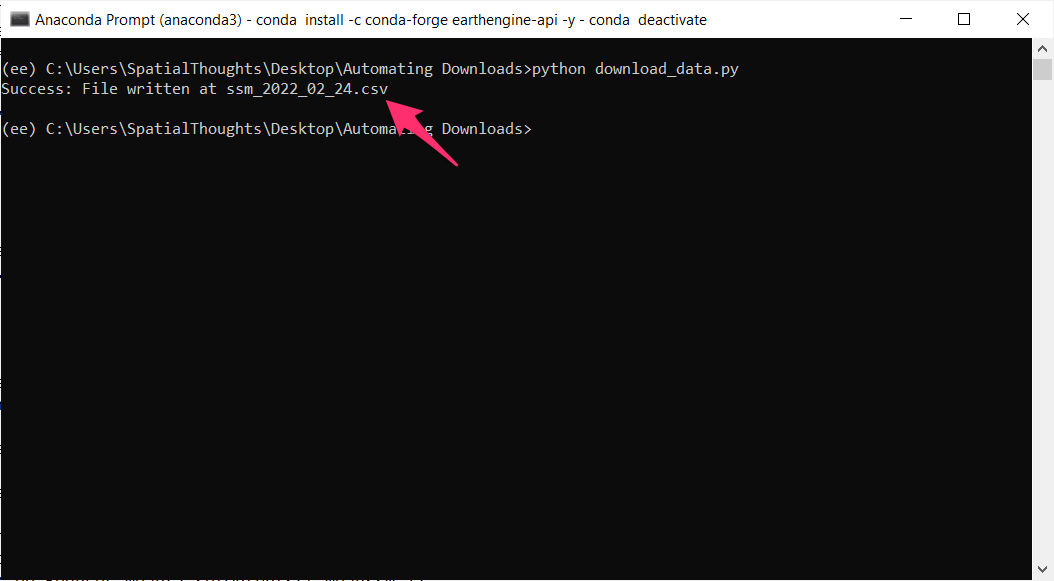

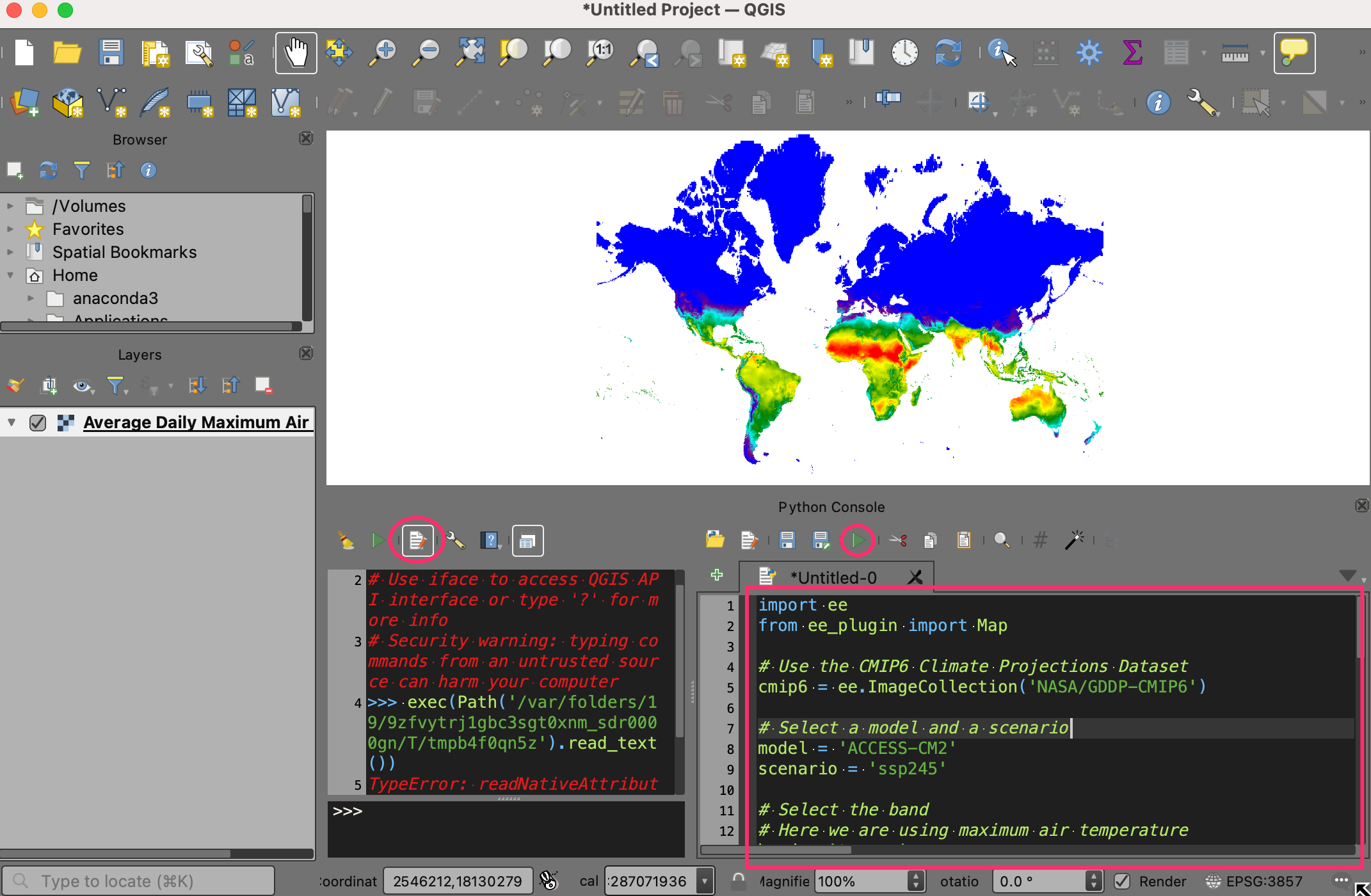

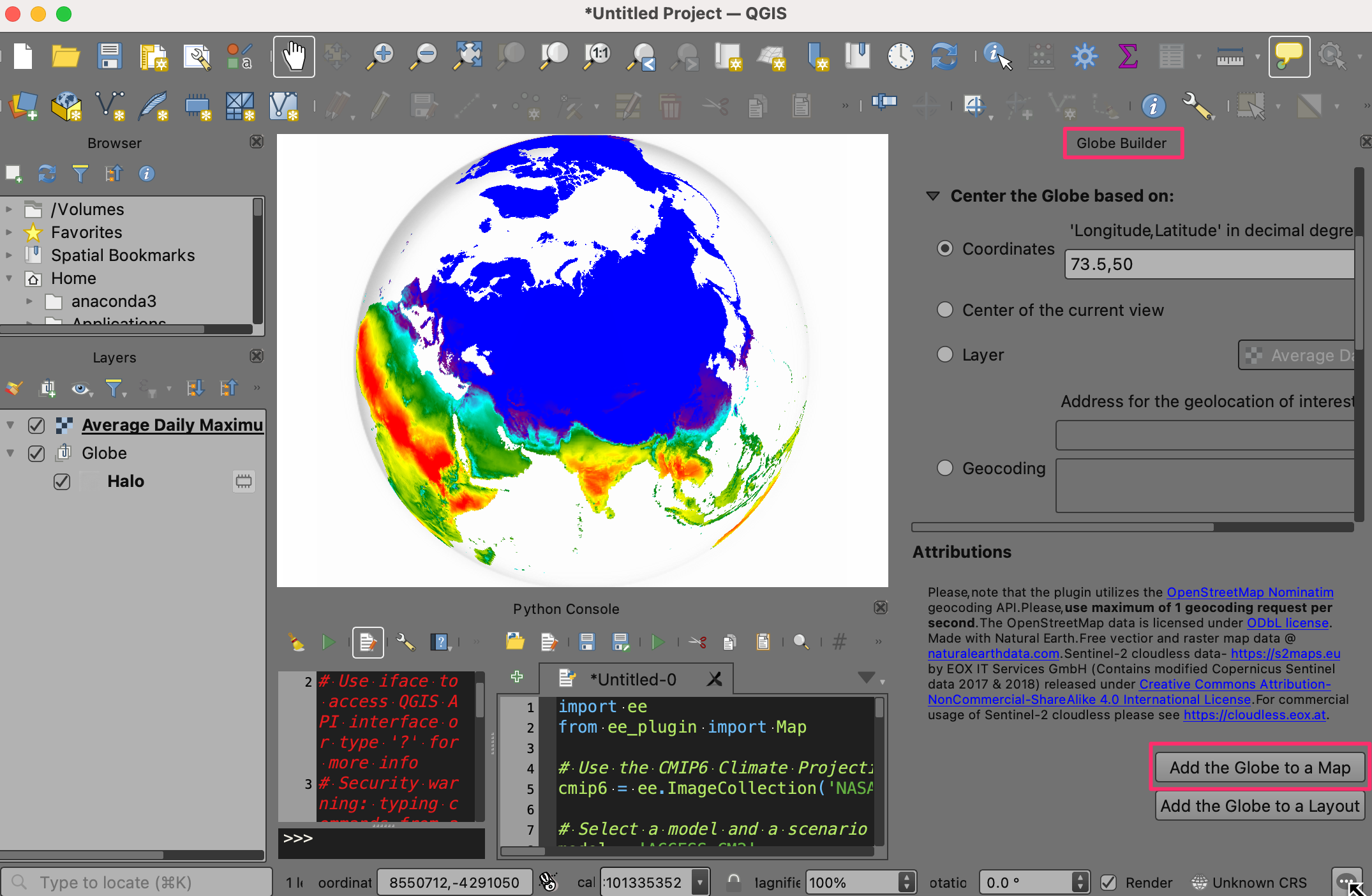

.filter(ee.Filter.bounds(geometry));