Google Earth Engine for Water Resources Management (Full Course)

Application-focused Introduction to Google Earth Engine.

Ujaval Gandhi

- Introduction

- Setting up the Environment

- Module 1: Google Earth Engine Fundamentals

- Module 2: Surface Water Mapping

- Module 3: Precipitation Time Series Analysis

- Module 4: Land Use Land Cover Classification

- Module 5: Flood Mapping

- Module 6: Drought Monitoring

- Module 7: Earth Engine Apps

- Supplement

- Data Credits

- License

- Citing and Referencing

Introduction

GIS and Remote Sensing plays a critical role in the management of water resources. Many practitioners in this field are constrained by the availability of tools and computing resources to use these techniques effectively. Recent advances in cloud computing technology have given rise to platforms such as Google Earth Engine, which provide free access to a large pool of computational resources and datasets. The course is designed for researchers in the water sector, academicians, water managers, and stakeholders with basic knowledge of Remote Sensing. It will enable them to leverage this platform for water resource management applications.

You will learn Google Earth Engine programming by building the following 5 complete applications:

- Surface Water Mapping

- Precipitation Time Series Analysis

- Land Use Land Cover Classification

- Flood Mapping

- Drought Monitoring

Note: Certification and Support are only available for participants in our paid instructor-led classes.

Setting up the Environment

Sign-up for Google Earth Engine

If you already have a Google Earth Engine account, you can skip this step.

Visit our GEE Sign-Up Guide for step-by-step instructions.

Get the Course Materials

The course material and exercises are in the form of Earth Engine scripts shared via a code repository.

- Click this link to open Google Earth Engine code editor and add the repository to your account.

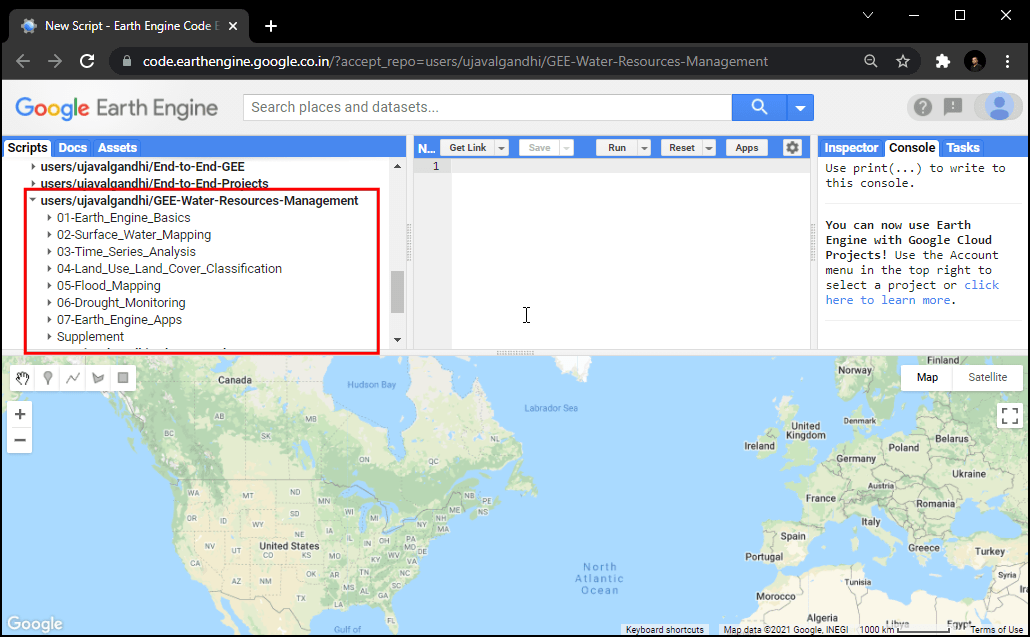

- If successful, you will have a new repository named

users/ujavalgandhi/GEE-Water-Resources-Managementin the Scripts tab in the Reader section. - Verify that your code editor looks like below

Code Editor After Adding the Class Repository

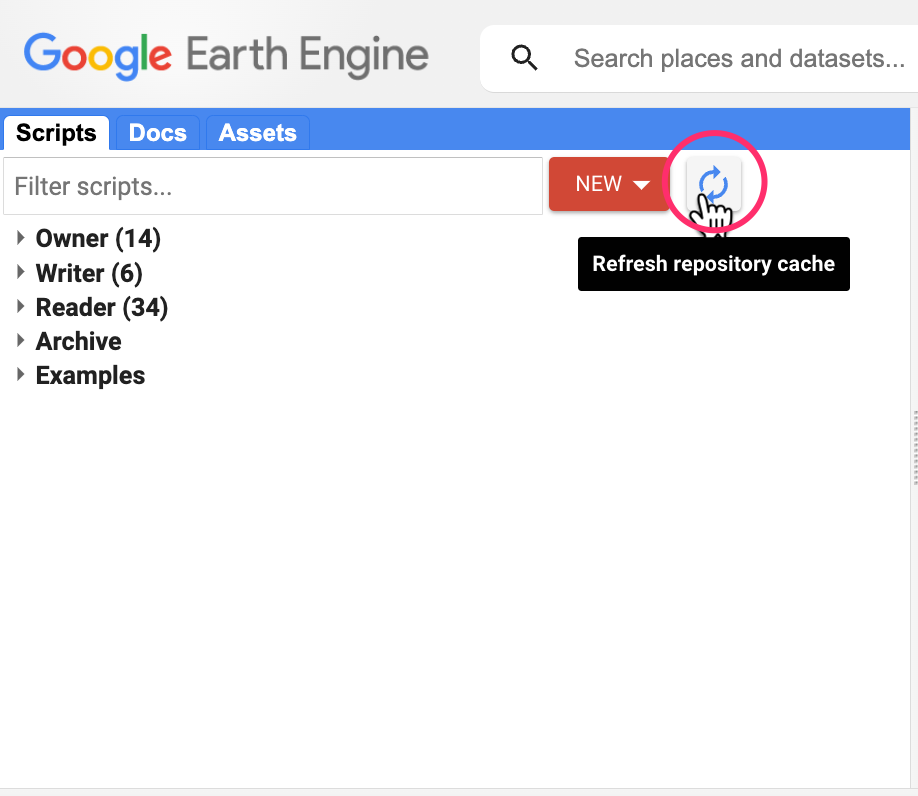

If you do not see the repository in the Reader section, click Refresh repository cache button in your Scripts tab and it will show up.

Refresh repository cache

Get the Course Videos

The course is accompanied by a set of videos covering the all the modules. These videos are recorded from our live instructor-led classes and are edited to make them easier to consume for self-study. We have 2 versions of the videos

YouTube

We have created a YouTube Playlist with separate videos for each script and exercise to enable effective online-learning. Access the YouTube Playlist ↗

Vimeo

We are also making combined full-length video for each module available on Vimeo. These videos can be downloaded for offline learning. Access the Vimeo Playlist ↗

Module 1: Google Earth Engine Fundamentals

In Module 1, you will gain the essential skills to find datasets, filter them to your region, clip them to your boundary, calculate remote sensing indices, extract water-bodies using thresholding techniques and export raster data.

01. Hello World

This script introduces the basic Javascript syntax and the video covers the programming concepts you need to learn when using Earth Engine. To learn more, visit Introduction to JavaScript for Earth Engine section of the Earth Engine User Guide.

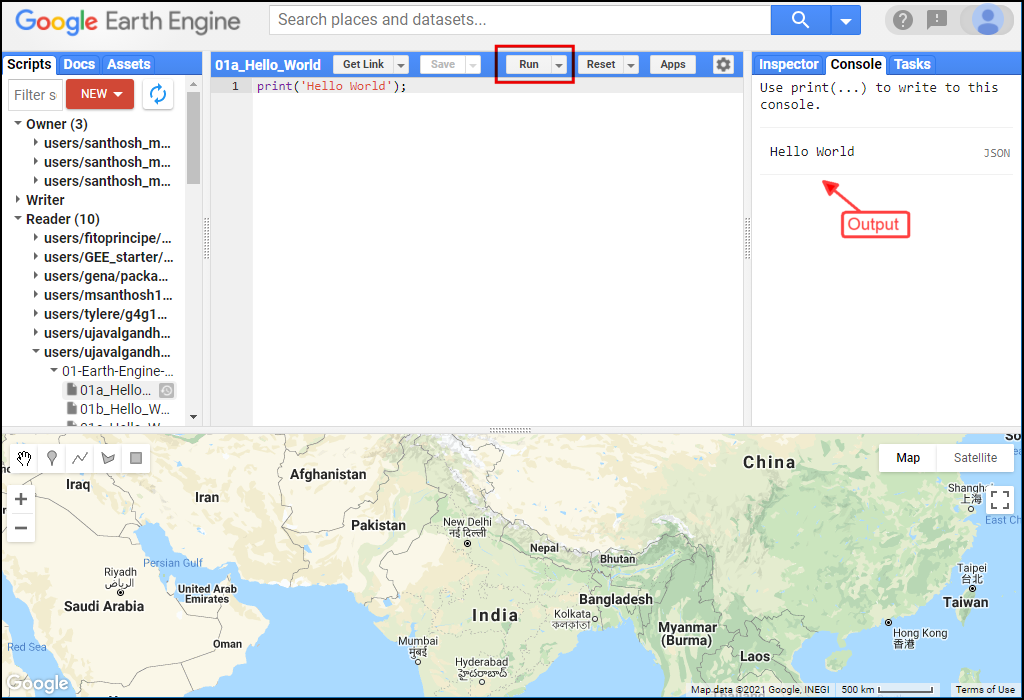

The Code Editor is an Integrated Development Environment (IDE) for Earth Engine Javascript API. It offers an easy way to type, debug, run and manage code. Type the code below and click Run to execute it and see the output in the Console tab.

Tip: You can use the keyboard shortcut Ctrl+Enter to run the code in the Code Editor

Hello World

print('Hello World');

// Variables

var city = 'Bengaluru';

var country = 'India';

print(city, country);

var population = 8400000;

print(population);

// List

var majorCities = ['Mumbai', 'Delhi', 'Chennai', 'Kolkata'];

print(majorCities);

// Dictionary

var cityData = {

'city': city,

'population': 8400000,

'elevation': 930

};

print(cityData);

// Function

var greet = function(name) {

return 'Hello ' + name;

};

print(greet('World'));

// This is a commentExercise

Saving Your Work

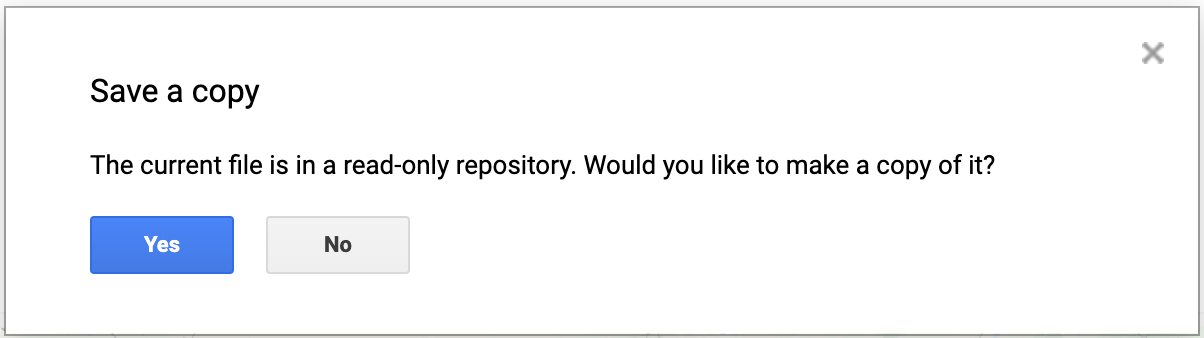

When you modify any script for the course repository, you may want to save a copy for yourself. If you try to click the Save button, you will get an error message like below

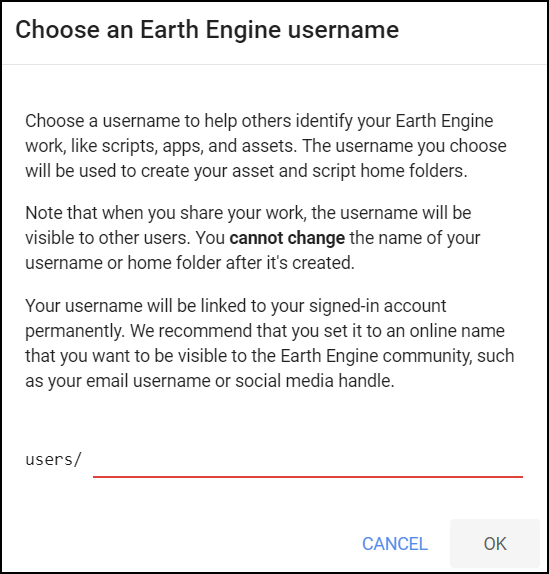

This is because the shared class repository is a Read-only repository. You can click Yes to save a copy in your repository. If this is the first time you are using Earth Engine, you will be prompted to choose a Earth Engine username. Choose the name carefully, as it cannot be changed once created.

After entering your username, your home folder will be created. After that, you will be prompted to enter a new repository. A repository can help you organize and share code. Your account can have multiple repositories and each repository can have multiple scripts inside it. To get started, you can create a repository named default. Finally, you will be able to save the script.

02. Working with Image Collections

Most datasets in Earth Engine come as a ImageCollection.

An ImageCollection is a dataset that consists of images takes at

different time and locations - usually from the same satellite or data

provider. You can load a collection by searching the Earth Engine

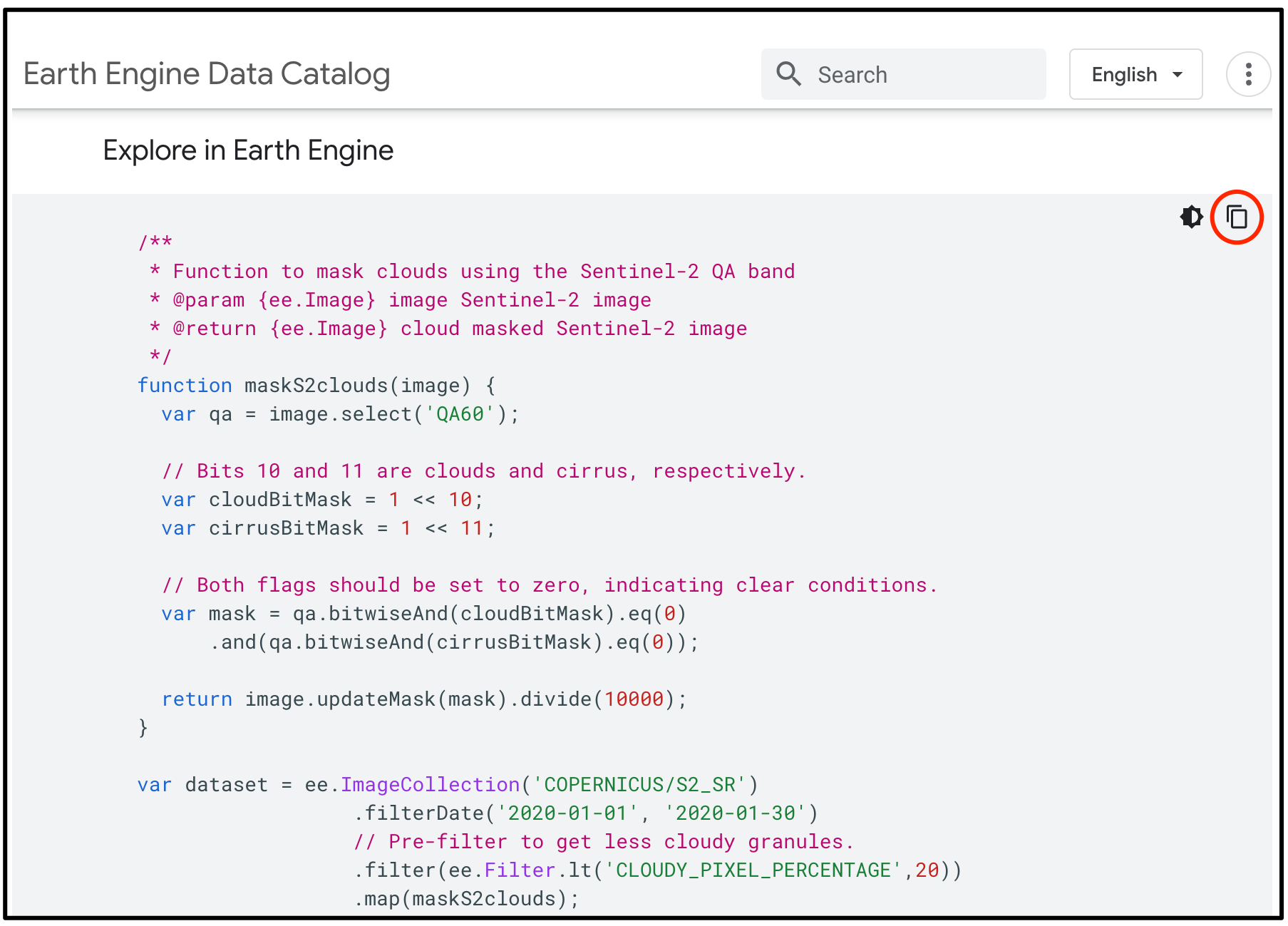

Data Catalog for the ImageCollection ID. Search for the

Sentinel-2 Level 2A dataset and you will find its id

COPERNICUS/S2_SR. Visit the Sentinel-2,

Level 2A page and see Explore in Earth Engine section to

find the code snippet to load and visualize the collection. This snippet

is a great starting point for your work with this dataset. Click the

Copy Code Sample button and paste the code into the

code editor. Click Run and you will see the image tiles load in

the map.

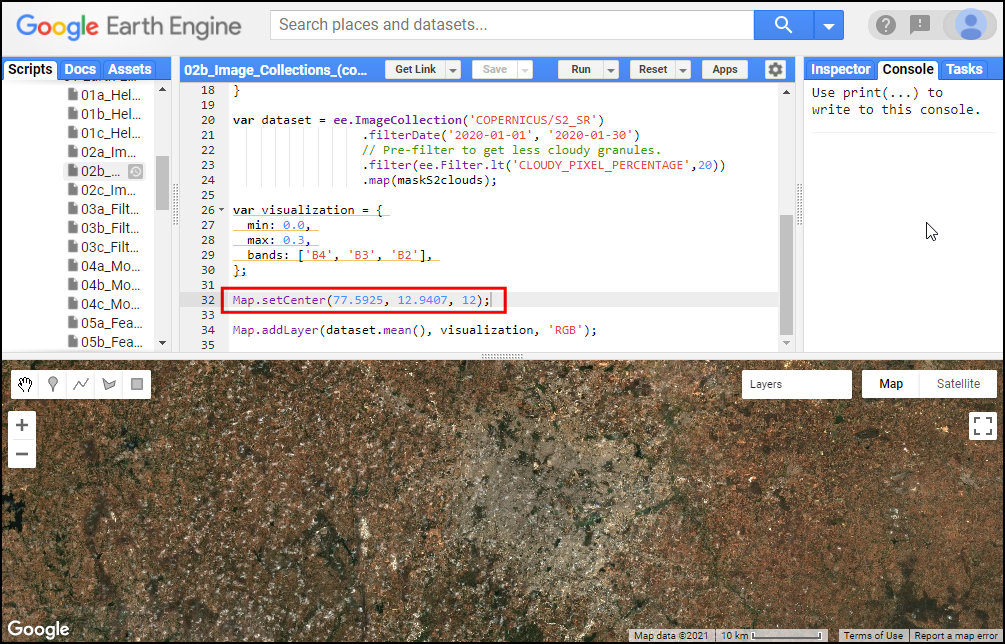

In the code snippet, You will see a function

Map.setCenter() which sets the viewport to a specific

location and zoom level. The function takes the X coordinate

(longitude), Y coordinate (latitude) and Zoom Level parameters ranging

from 1 (whole world) to 22 (pixel level). Replace the X and Y

coordinates with the coordinates of your city and click Run to

see the images of your city.

/**

* Function to mask clouds using the Sentinel-2 QA band

* @param {ee.Image} image Sentinel-2 image

* @return {ee.Image} cloud masked Sentinel-2 image

*/

function maskS2clouds(image) {

var qa = image.select('QA60');

// Bits 10 and 11 are clouds and cirrus, respectively.

var cloudBitMask = 1 << 10;

var cirrusBitMask = 1 << 11;

// Both flags should be set to zero, indicating clear conditions.

var mask = qa.bitwiseAnd(cloudBitMask).eq(0)

.and(qa.bitwiseAnd(cirrusBitMask).eq(0));

return image.updateMask(mask).divide(10000);

}

// Map the function over one month of data and take the median.

// Load Sentinel-2 TOA reflectance data.

var dataset = ee.ImageCollection('COPERNICUS/S2_HARMONIZED')

.filterDate('2018-01-01', '2018-01-31')

// Pre-filter to get less cloudy granules.

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 20))

.map(maskS2clouds);

var rgbVis = {

min: 0.0,

max: 0.3,

bands: ['B4', 'B3', 'B2'],

};

Map.setCenter(77.61, 12.97, 10);

Map.addLayer(dataset.median(), rgbVis, 'RGB');Exercise

/**

* Function to mask clouds using the Sentinel-2 QA band

* @param {ee.Image} image Sentinel-2 image

* @return {ee.Image} cloud masked Sentinel-2 image

*/

function maskS2clouds(image) {

var qa = image.select('QA60');

// Bits 10 and 11 are clouds and cirrus, respectively.

var cloudBitMask = 1 << 10;

var cirrusBitMask = 1 << 11;

// Both flags should be set to zero, indicating clear conditions.

var mask = qa.bitwiseAnd(cloudBitMask).eq(0)

.and(qa.bitwiseAnd(cirrusBitMask).eq(0));

return image.updateMask(mask).divide(10000);

}

// Map the function over one month of data and take the median.

// Load Sentinel-2 TOA reflectance data.

var dataset = ee.ImageCollection('COPERNICUS/S2_HARMONIZED')

.filterDate('2018-01-01', '2018-01-31')

// Pre-filter to get less cloudy granules.

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 20))

.map(maskS2clouds);

var rgbVis = {

min: 0.0,

max: 0.3,

bands: ['B4', 'B3', 'B2'],

};

Map.setCenter(77.61, 12.97, 10);

Map.addLayer(dataset.median(), rgbVis, 'RGB');03. Filtering Image Collections

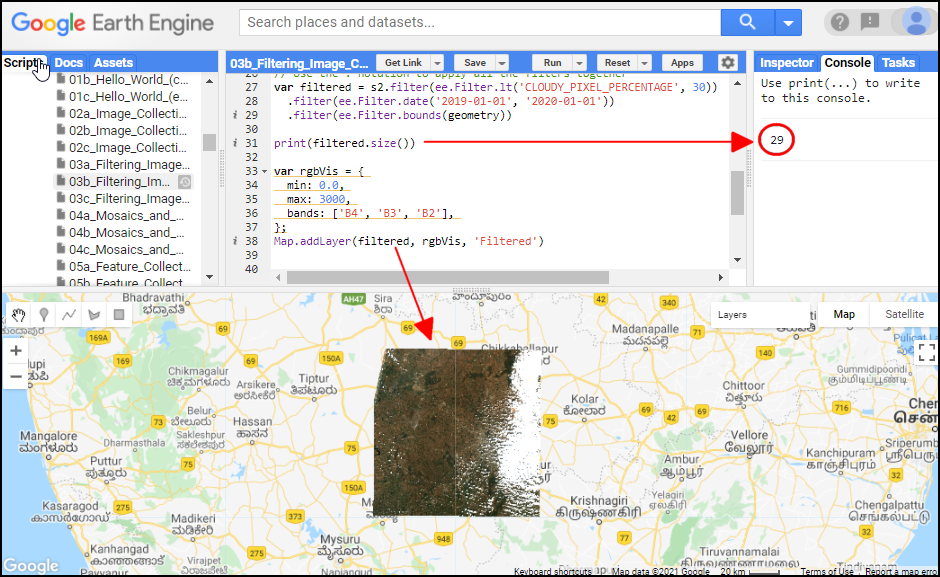

The collection contains all imagery ever collected by the sensor. The

entire collections are not very useful. Most applications require a

subset of the images. We use filters to select the

appropriate images. There are many types of filter functions, look at

ee.Filter... module to see all available filters. Select a

filter and then run the filter() function with the filter

parameters.

We will learn about 3 main types of filtering techniques

- Filter by metadata: You can apply a filter on the

image metadata using filters such as

ee.Filter.eq(),ee.Filter.lt()etc. You can filter by PATH/ROW values, Orbit number, Cloud cover etc. - Filter by date: You can select images in a

particular date range using filters such as

ee.Filter.date(). - Filter by location: You can select the subset of

images with a bounding box, location or geometry using the

ee.Filter.bounds(). You can also use the drawing tools to draw a geometry for filtering.

After applying the filters, you can use the .size()

function to check how many images match the filter criteria.

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241])

Map.centerObject(geometry, 10)

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

// Filter by metadata

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

// Filter by date

var filtered = s2.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

// Filter by location

var filtered = s2.filter(ee.Filter.bounds(geometry))

// Let's apply all the 3 filters together on the collection

// First apply metadata fileter

var filtered1 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

// Apply date filter on the results

var filtered2 = filtered1.filter(

ee.Filter.date('2019-01-01', '2020-01-01'))

// Lastly apply the location filter

var filtered3 = filtered2.filter(ee.Filter.bounds(geometry))

// Instead of applying filters one after the other, we can 'chain' them

// Use the . notation to apply all the filters together

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry))

print(filtered.size());Exercise

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241]);

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

print(filtered.size());

// Exercise

// Delete the 'geometry' import

// Add a point at your chosen location

// Change the filter to find images from January 2023

// Note: If you are in a very cloudy region,

// make sure to adjust the CLOUDY_PIXEL_PERCENTAGE04. Mosaics and Composites

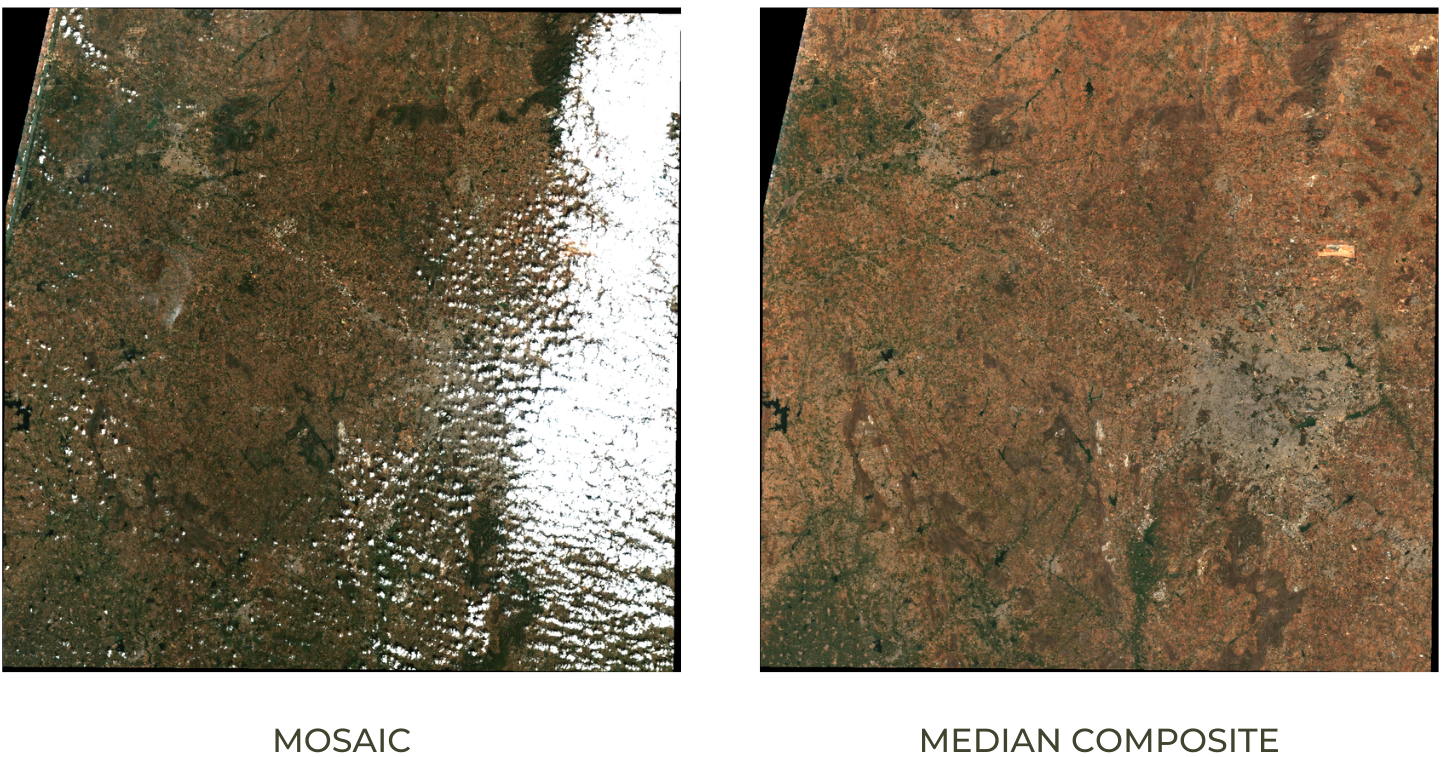

The default order of the collection is by date. So when you display

the collection, it implicitly creates a mosaic with the latest pixels on

top. You can call .mosaic() on a ImageCollection to create

a mosaic image from the pixels at the top (i.e) first image in the

stack.

We can also create a composite image by applying selection criteria

to each pixel from all pixels in the stack. Here we use the

.median() function to create a composite where each pixel

value is the median of all pixels values at each pixel from the stack

for all matching bands.

Open in Code Editor ↗Tip: If you need to create a mosaic where the images are in a specific order, you can use the

.sort()function to sort your collection by a property first.

Mosaic vs. Median Composite

var geometry = ee.Geometry.Point([77.60412933051538, 12.952912912328241])

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry))

var mosaic = filtered.mosaic()

var medianComposite = filtered.median();

Map.centerObject(geometry, 10)

Map.addLayer(filtered, rgbVis, 'Filtered Collection');

Map.addLayer(mosaic, rgbVis, 'Mosaic');

Map.addLayer(medianComposite, rgbVis, 'Median Composite')Exercise

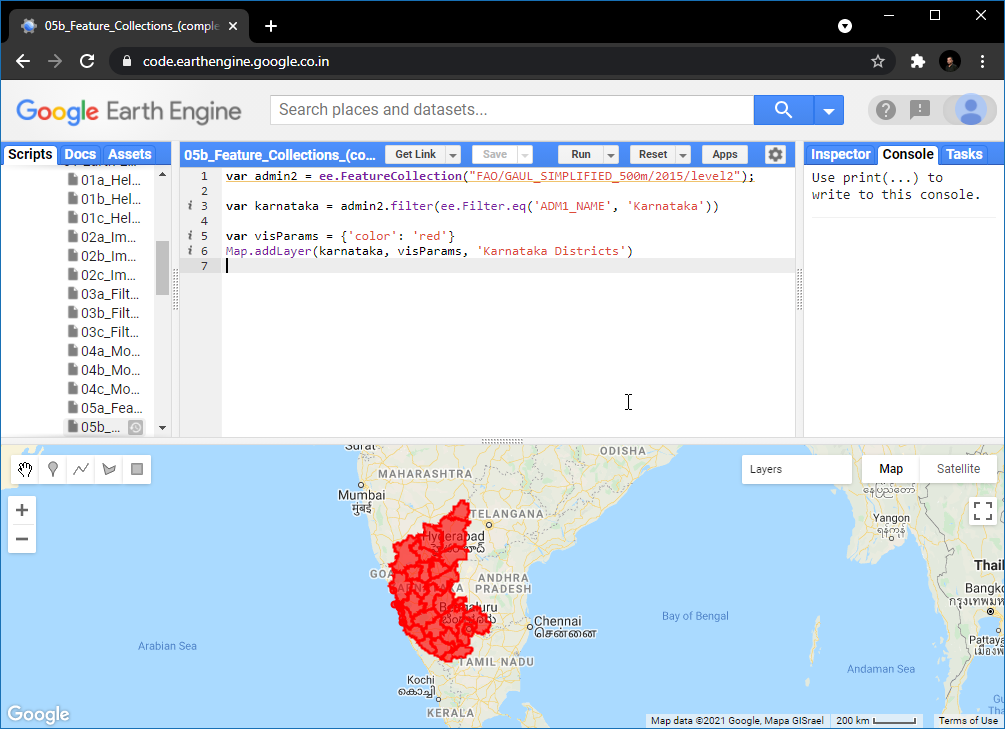

05. Feature Collections

Feature Collections are similar to Image Collections - but they contain Features, not images. They are equivalent to a Vector Layers in a GIS. We can load, filter and display Feature Collections using similar techniques that we have learned so far.

Search for GAUL Second Level Administrative Boundaries and

load the collection. This is a global collection that contains all

Admin2 boundaries. We can apply a filter using the

ADM1_NAME property to get all Admin2 boundaries

(i.e. Districts) from a state.

var admin2 = ee.FeatureCollection("FAO/GAUL_SIMPLIFIED_500m/2015/level2");

var filtered = admin2

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'))

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'));

Map.centerObject(filtered);

Map.addLayer(filtered, {'color': 'red'}, 'Selected Admin2');Exercise

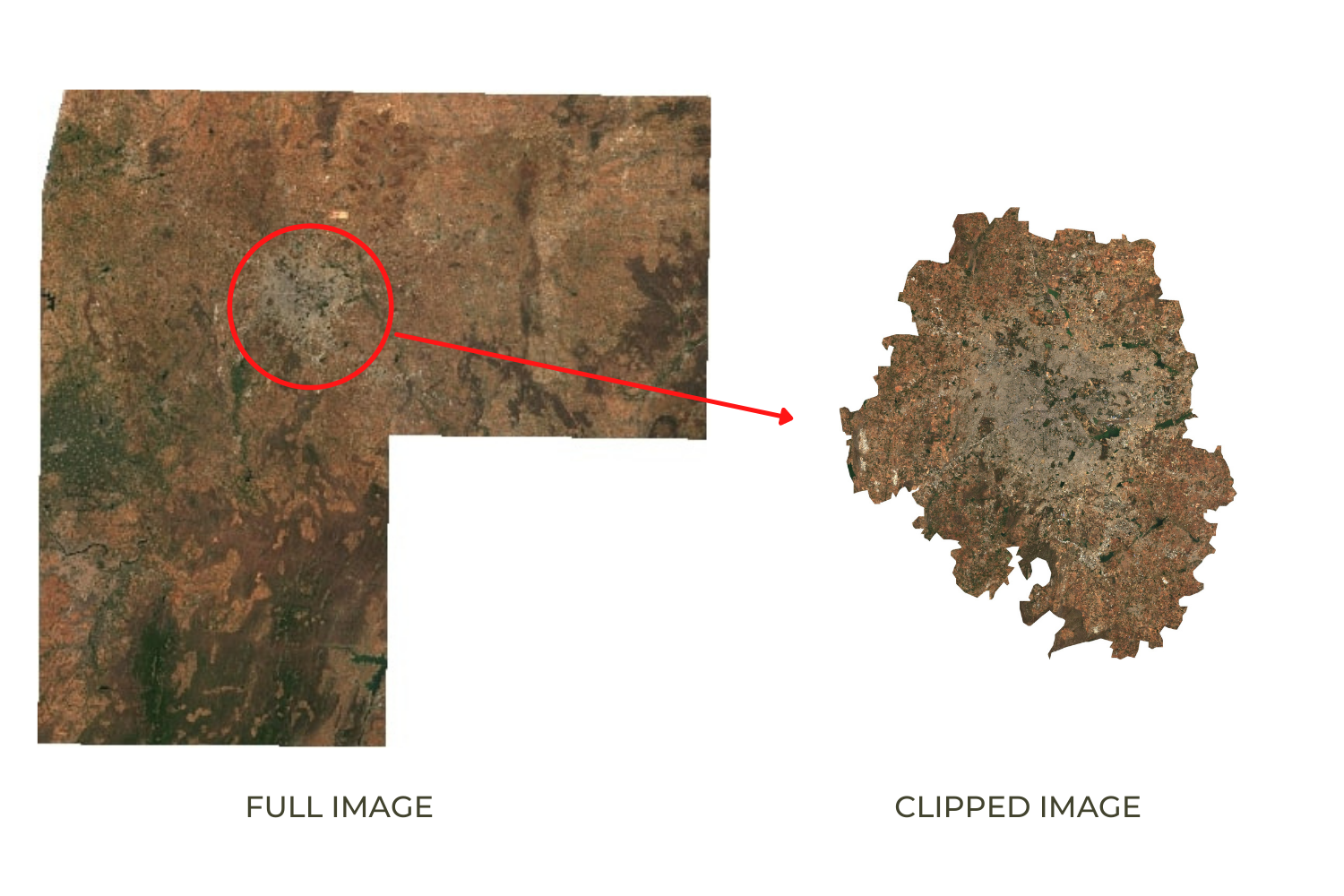

06. Clipping

It is often desirable to clip the images to your area of interest.

You can use the clip() function to mask an image using

geometry.

While in Desktop softwares, clipping is used to remove the unnecessary portion of a large image to save computation time, but in the Earth Engine, clipping can increase the computation time. As described in the Earth Engine Coding Best Practices guide, avoid clipping or do it at the very end of your process.

Full Image vs. Clipped Image

var admin2 = ee.FeatureCollection("FAO/GAUL_SIMPLIFIED_500m/2015/level2");

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'))

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'));

var geometry = filteredAdmin2.geometry();

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

var clipped = image.clip(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.centerObject(geometry);

Map.addLayer(clipped, rgbVis, 'Clipped Composite');Exercise

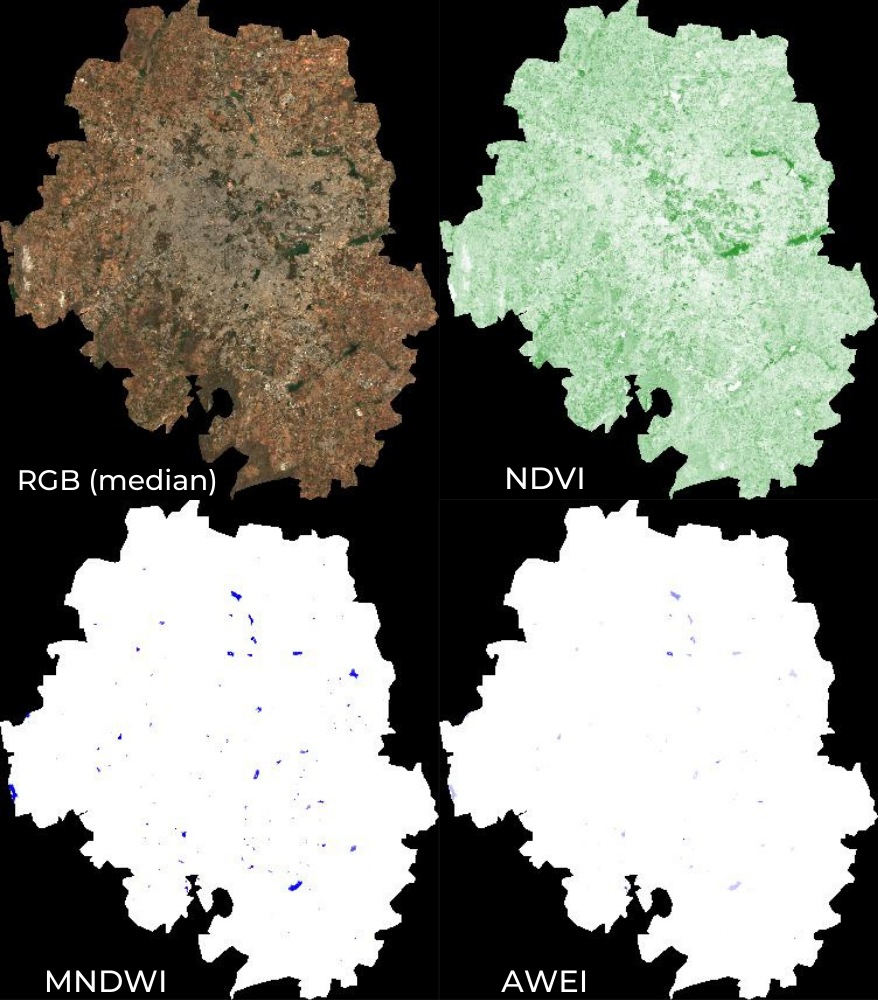

07. Calculating Indices

Spectral Indices are central to many aspects of remote sensing. For

almost all research/work, you will need to compute one spectral indices.

The most commonly used formula for calculating an index is computing the

Normalized Difference between 2 bands. Earth Engine provides a

helper function normalizedDifference() to help calculate

normalized indices, such as Normalized Difference Vegetation Index

(NDVI) or Modified Normalized Difference Water Index (MNDWI).

Some indices - such as the Automated Water Extraction Index (AWEI) -

require calculation using a more complex formula.In such cases, you can

use the expression() function which allow you to to

construct formula with common arithmetic operators. The script below

shows how to implement common water-related indices in Earth Engine.

Note that the AWEI formula expects the pixel values to be reflectances,

so we need to apply a scaling factor of 0.0001 to convert the Sentinel-2

band values to reflectances.

RGB, MNDWI, NDVI and AWEI images

var admin2 = ee.FeatureCollection("FAO/GAUL_SIMPLIFIED_500m/2015/level2");

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'))

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'));

var geometry = filteredAdmin2.geometry();

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

// Calculate Normalized Difference Vegetation Index (NDVI)

// 'NIR' (B8) and 'RED' (B4)

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

// Calculate Modified Normalized Difference Water Index (MNDWI)

// 'GREEN' (B3) and 'SWIR1' (B11)

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

// Calculate Automated Water Extraction Index (AWEI)

// AWEI is a Multi-band Index that uses the following bands

// 'BLUE' (B2), GREEN' (B3), 'NIR' (B8), 'SWIR1' (B11) and 'SWIR2' (B12)

// Formula for AWEI is as follows

// AWEI = 4 * (GREEN - SWIR2) - (0.25 * NIR + 2.75 * SWIR1)

// For more complex indices, you can use the expression() function

// Note:

// For the AWEI formula, the pixel values need to converted to reflectances

// Multiplyng the pixel values by 'scale' gives us the reflectance value

// The scale value is 0.0001 for Sentinel-2 dataset

var awei = image.expression(

'4*(GREEN - SWIR1) - (0.25*NIR + 2.75*SWIR2)', {

'GREEN': image.select('B3').multiply(0.0001),

'NIR': image.select('B8').multiply(0.0001),

'SWIR1': image.select('B11').multiply(0.0001),

'SWIR2': image.select('B12').multiply(0.0001),

}).rename('awei');

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

var ndviVis = {min:0, max:1, palette: ['white', 'green']}

var ndwiVis = {min:0, max:0.5, palette: ['white', 'blue']}

Map.centerObject(geometry, 10)

Map.addLayer(image.clip(geometry), rgbVis, 'Image');

Map.addLayer(ndvi.clip(geometry), ndviVis, 'ndvi')

Map.addLayer(mndwi.clip(geometry), ndwiVis, 'mndwi')

Map.addLayer(awei.clip(geometry), ndwiVis, 'awei') Exercise

There is another version of AWEI called AWEIsh. This index is useful where there are mountain or building shadows. This works best where there are shadows but no bright reflective surfaces (snow, roof). The expression for AWEI (sh) is as follows

AWEI (sh) = BLUE + 2.5*GREEN - 1.5*(NIR + SWIR1) - 0.25*SWIR2We have implemented both versions of AWEI in the script. Add them to the map and visualize both of them.

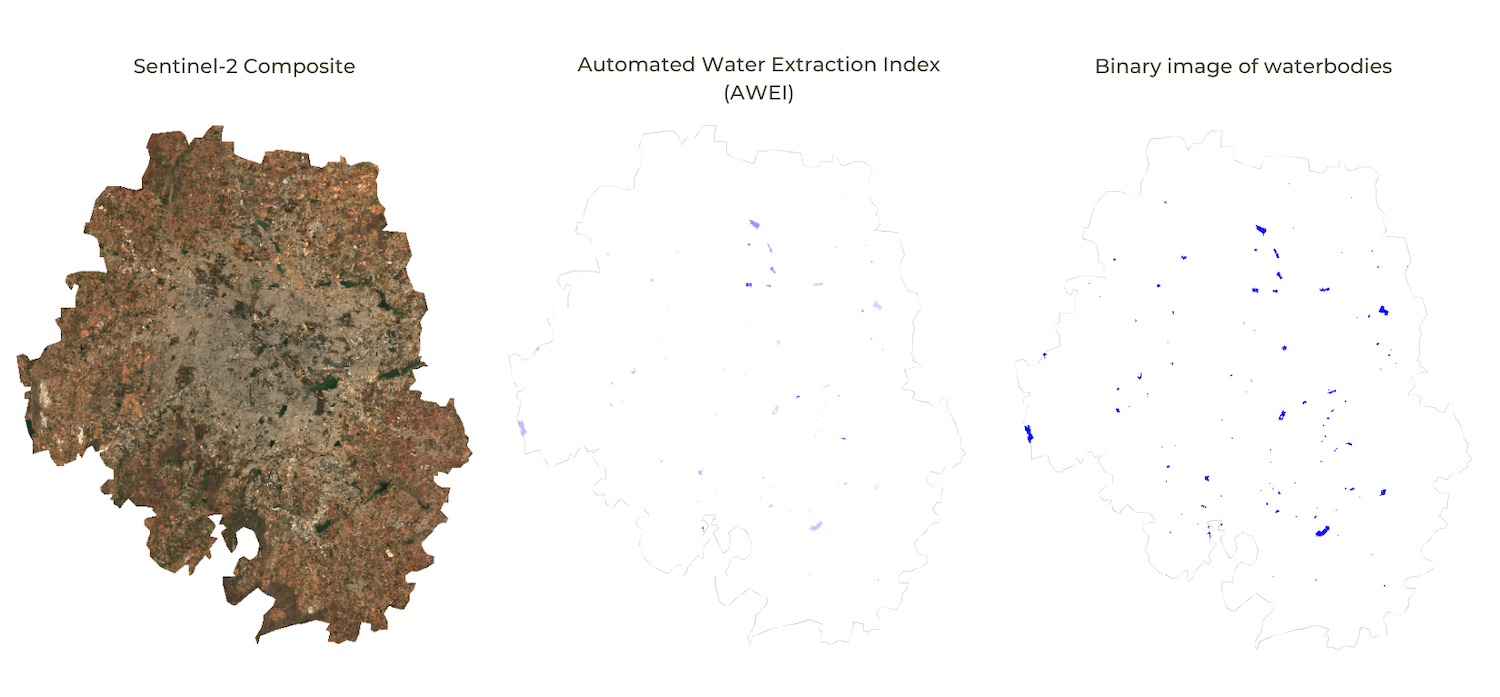

08. Computation on Images

Inspecting the MNDWI or AWEI index images, you will see that water

pixels have a higher value than other pixels. If we wanted to extract

those pixels, we can apply a threshold value to select all pixels above

a certain value. The result will be a binary image with pixel values 1

or 0. For all the pixels that matched the condition, the resulting value

will be 1, and for other pixels it will be 0. The conditions can be

written using the conditional operators like greater than

.gt(), lesser than .lt(), equal to

.eq(), greater than or equal to .gte(), etc.

We can also combine multiple layers to create a condition using the

boolean operators like AND .and(), OR

.or() functions. The script below shows how to implement

static thresholding in Earth Engine.

Extracting Waterbodies using a Simple Threshold

var admin2 = ee.FeatureCollection("FAO/GAUL_SIMPLIFIED_500m/2015/level2");

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'))

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'));

var geometry = filteredAdmin2.geometry();

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

var awei = image.expression(

'4*(GREEN - SWIR1) - (0.25*NIR + 2.75*SWIR2)', {

'GREEN': image.select('B3').multiply(0.0001),

'NIR': image.select('B8').multiply(0.0001),

'SWIR1': image.select('B11').multiply(0.0001),

'SWIR2': image.select('B12').multiply(0.0001),

}).rename('awei');

// Simple Thresholding

var waterMndwi = mndwi.gt(0);

var waterAwei = awei.gt(0);

// Combining Multiple Conditions

var waterMultiple = ndvi.lt(0).and(mndwi.gt(0));

var rgbVis = {min: 0, max: 3000, bands: ['B4', 'B3', 'B2']};

var waterVis = {min:0, max:1, palette: ['white', 'blue']};

Map.centerObject(geometry, 10);

Map.addLayer(image.clip(geometry), rgbVis, 'Image');

Map.addLayer(waterMndwi.clip(geometry), waterVis, 'MNDWI - Simple threshold');

Map.addLayer(waterAwei.clip(geometry), waterVis, 'AWEI - Simple threshold');

Map.addLayer(waterMultiple.clip(geometry), waterVis, 'MNDWI and NDVI Threshold');Exercise

Replace the selected region with the region of your choice and and adjust the MNDWI thresholds to extract the waterbodies.

Try different thresholds and add images to the map to compare

- Hint: Try negative thresholds -0.1 and -0.2

- Hint: Try positive thresholds 0.1 and 0.2

var admin2 = ee.FeatureCollection("FAO/GAUL_SIMPLIFIED_500m/2015/level2");

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'))

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'));

var geometry = filteredAdmin2.geometry();

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

// Simple Thresholding

var waterMndwi = mndwi.gt(0);

// Excercise

// Replace the selected region with your choice

// And adjust the MNDWI thresholds to extract the waterbodies

// Try different thresholds and add images to the map to compare

// Hint: Try negative thresholds -0.1 and -0.2

// Hint: Try positive thresholds 0.1 and 0.2

var rgbVis = {min: 0, max: 3000, bands: ['B4', 'B3', 'B2']};

var waterVis = {min:0, max:1, palette: ['black', 'white']};

Map.centerObject(geometry, 10);

Map.addLayer(image.clip(geometry), rgbVis, 'Image');

Map.addLayer(waterMndwi.clip(geometry), waterVis, 'MNDWI (Threshold 0)');09. Export

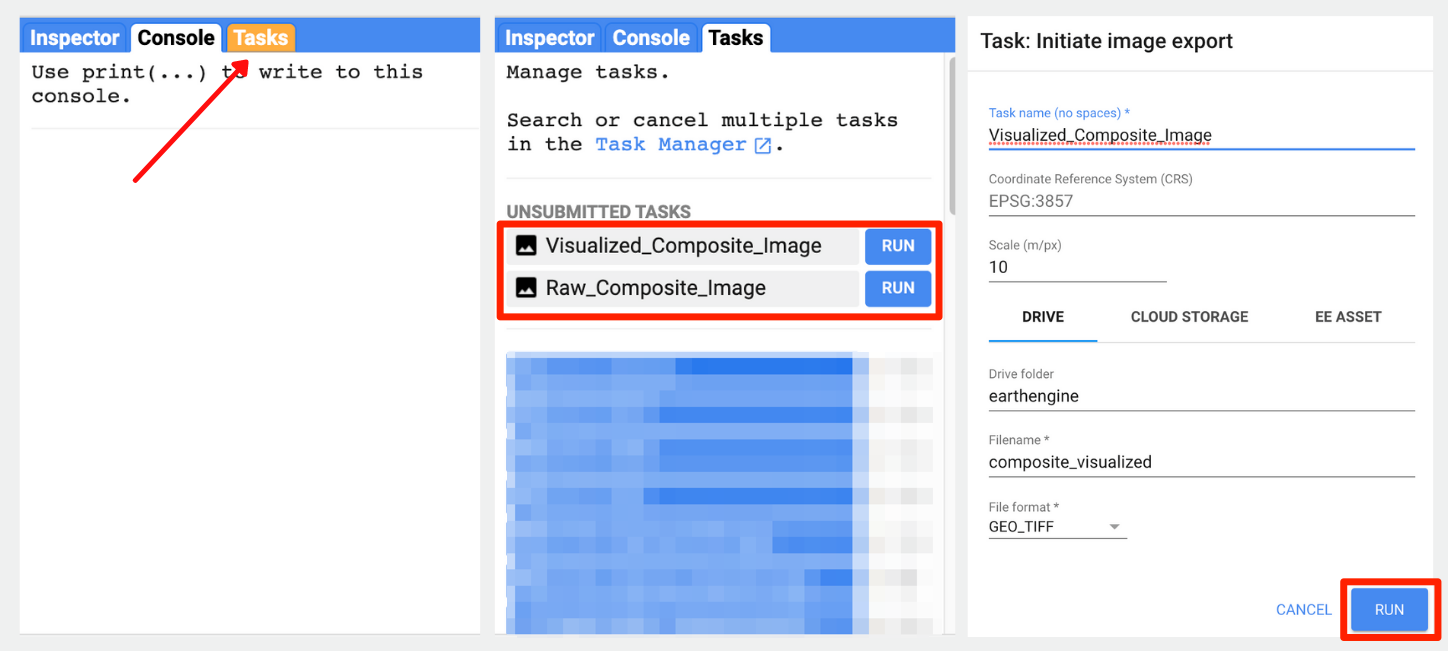

Earth Engine allows for exporting both vector and raster data to be

used in an external program. Vector data can be exported as a

CSV or a Shapefile, while Rasters can be

exported as GeoTIFF files. We will now export the

Sentinel-2 Composite as a GeoTIFF file.

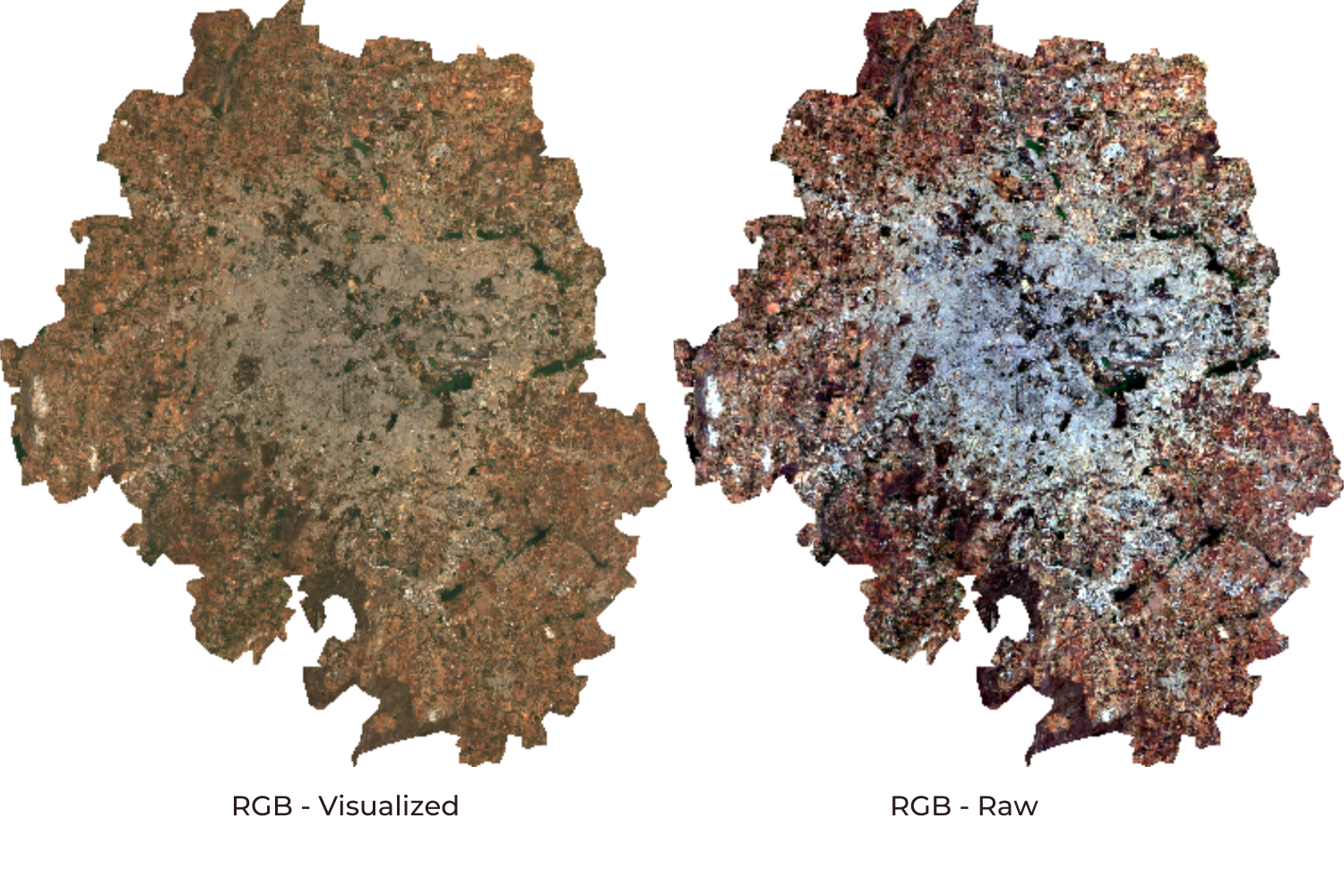

During the export, we can export either a raw image or visualized image. The raw image will retain the original pixel values and is ideal if the image needs to be used for further analysis. The visualized image will generate an RGB visualization of the image. The visualized image will look exactly like you see it in Earth Engine with the visualization parameters, but original pixel values will be lost. The visualized image should not use it for analysis purposes.

Tip: Code Editor supports autocompletion of API functions using the combination Ctrl+Space. Type a few characters of a function and press Ctrl+Space to see autocomplete suggestions. You can also use the same key combination to fill all parameters of the function automatically.

var admin2 = ee.FeatureCollection("FAO/GAUL_SIMPLIFIED_500m/2015/level2");

var s2 = ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED");

var filteredAdmin2 = admin2

.filter(ee.Filter.eq('ADM1_NAME', 'Karnataka'))

.filter(ee.Filter.eq('ADM2_NAME', 'Bangalore Urban'));

var geometry = filteredAdmin2.geometry();

var filteredS2 = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry));

var image = filteredS2.median();

var ndvi = image.normalizedDifference(['B8', 'B4']).rename(['ndvi']);

var mndwi = image.normalizedDifference(['B3', 'B11']).rename(['mndwi']);

var waterMndwi = mndwi.gt(0);

var rgbVis = {min: 0, max: 3000, bands: ['B4', 'B3', 'B2']};

var waterVis = {min:0, max:1, palette: ['black', 'white']};

Map.centerObject(geometry, 10);

Map.addLayer(image.clip(geometry), rgbVis, 'Image');

Map.addLayer(waterMndwi.clip(geometry), waterVis, 'MNDWI');

var exportImage = image.select(['B4', 'B3', 'B2']);

Export.image.toDrive({

image: exportImage.clip(geometry),

description: 'Raw_Composite_Image',

folder: 'earthengine',

fileNamePrefix: 'composite',

region: geometry,

scale: 10,

maxPixels: 1e10});

var visualizedImage = image.visualize(rgbVis);

Export.image.toDrive({

image: visualizedImage.clip(geometry),

description: 'Visualized_Composite_Image',

folder: 'earthengine',

fileNamePrefix: 'composite_visualized',

region: geometry,

scale: 10,

maxPixels: 1e10

});Once you run this script, the Tasks tab will be highlighted. Switch to the tab and you will see the tasks waiting. Click Run next to each task to start the process. On clicking the Run button, you will be prompted for a confirmation dialog. Verify the settings and click Run to start the export.

Console, Tasks and Confirmation.

Once the Export finishes, a GeoTiff file for each export task will be added to your Google Drive in the specified folder. You can download them and use it any program that reads GeoTiff files.

Visualized vs Raw

Module 2: Surface Water Mapping

In this Module, we will learn how to extract surface water polygons from a chosen watershed. We will be using in HydroSheds and JRC - Global Surface Water datasets. We will map the surface water for a chosen year, mask non-water pixels, apply morphological filters to remove fill holes and convert the raster image into polygons.

01. Load Global Surface Water Data

Lets load the JRC

Global Surface Water Mapping Layers. This data contains the

spatio-temporal distribution of surface water. It is a single image with

7 bands, where each band contains unique information. Let’s use the

occurrence band, which includes information on the

frequency of the water present from 1984-2020. The pixel value ranges

from 0-100, where 0 represents No trace of water in any year, and 100

represents water present in all 36 years.

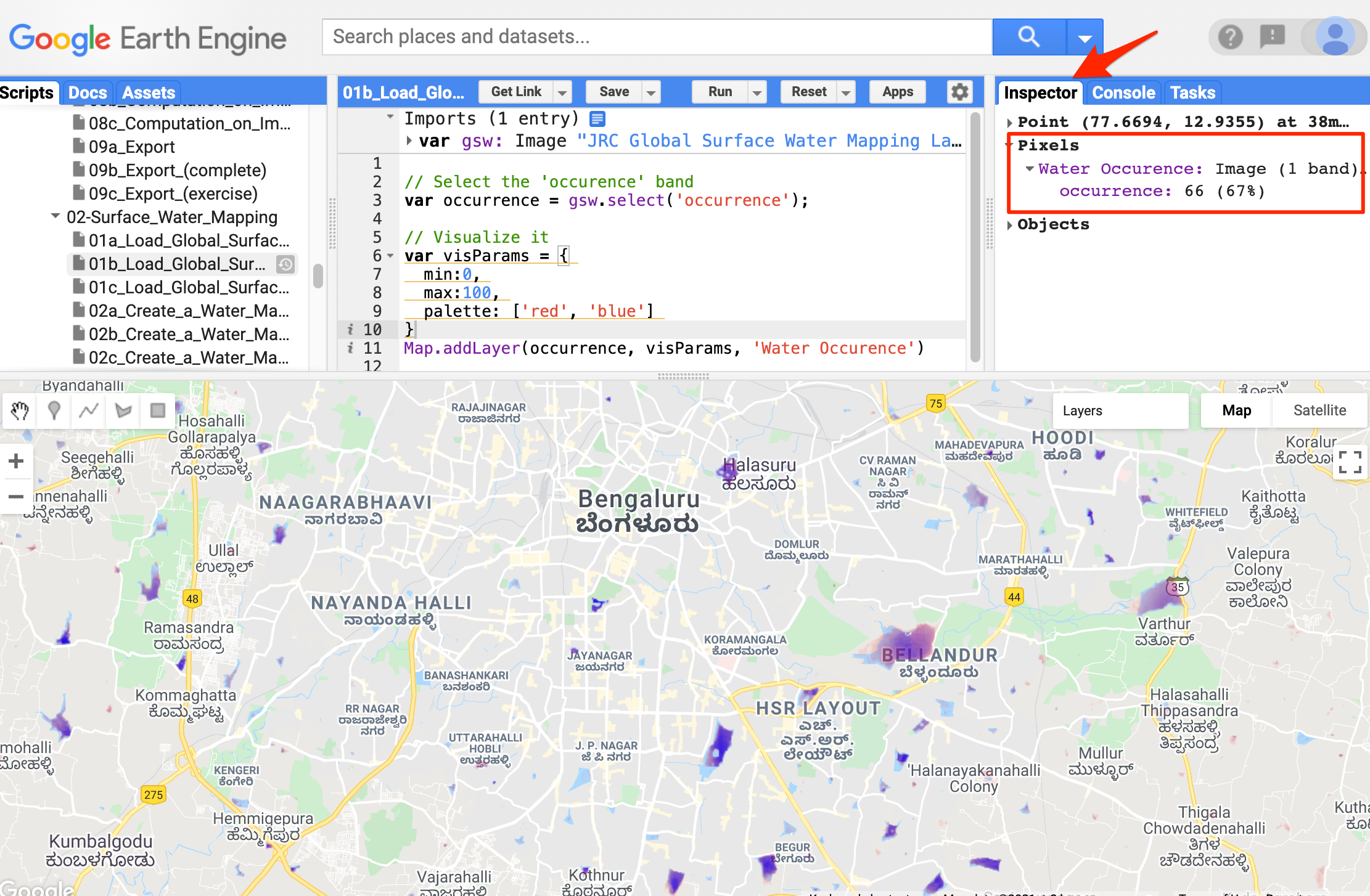

Use the Inspector to find the frequency of water present in the location.

Open in Code Editor ↗

Frequency of water present over Bellandur lake

Exercise

The max_extent band gives information on whether a pixel

ever contained water or not, 0 represents the pixel has no water present

in any of the months, and 1 represents the pixel has contained water in

it for at least 1 month.

Load the max_extent band and visualize it with correct

parameters.

02. Create a Water Mask

Earth Engine has a mask function to represent the No

Data values in an Image. Any masked pixel of an image will not be

used for analysis or visualization. There are two types of masks

selfMask and updateMask.

The selfMask will remove all the pixel values with

zero. In the max_extent band visualization, we saw

the pixel value is either 0 or 1, the pixel value with 0 contains no

information, but the pixel value with 1 represents the water occurrence.

So to remove the No Data pixel within the image, we can use the selfMask

function. This will mask all pixel value with 0.

The updateMask will mask a primary image using

a mask image. A mask image is an image with 0 1 pixel value.

The primary image pixels overlaying with 0-pixel value in mask image

will be set as No Data and masked out.

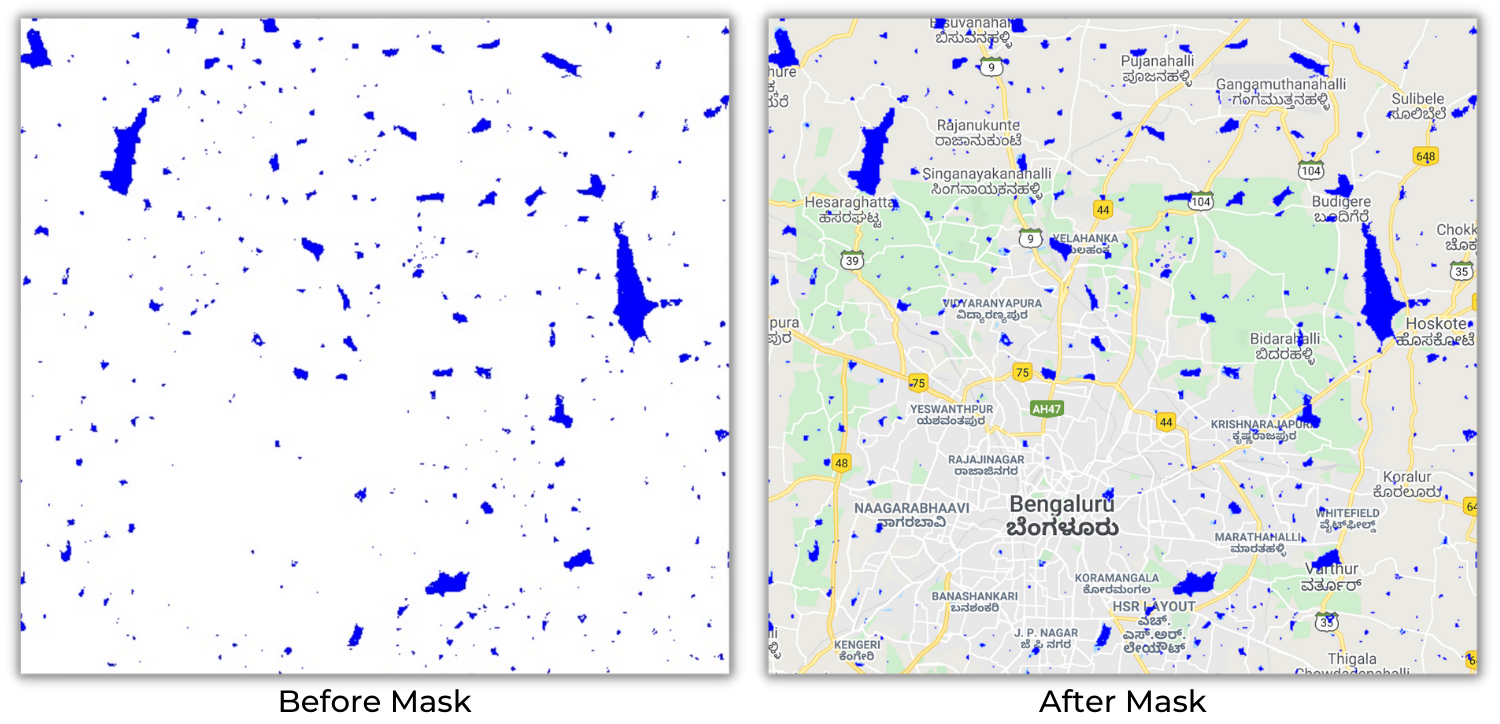

Before applying Mask vs After applying Mask

var gsw = ee.Image("JRC/GSW1_4/GlobalSurfaceWater");

// Select the 'max_extent' band

var water = gsw.select('max_extent');

// max_extent band has values 0 or 1

// We can remove the 0 values by masking the layer

// Mask needs to have 0 or 1 values

// 0 - remove the pixel

// 1 - keep the pixel

var masked = water.updateMask(water)

// There is a handy short-hand for masking out 0 values for a layer

// You can call selfMask() which will mask the image with itself

// Effectively removing 0 values and retaining non-zero values

var masked = water.selfMask()

Map.addLayer(masked, {}, 'Water');Exercise

The seasonality band gives information on the number of

months water was present in a pixel. Pixel value ranges from 1 to 12, 1

represents water present for 1 month, and 12 represents water present

for 12 months in a year.

Select all pixels in which water present for more than 5 months in a year.

03. Find Lost Waterbodies

The transition band has water class changes between 1986

and 2020. It has 10

classes representing different changes, in which classes 3

and 6 represent permanent and seasonal water

loss. We can calculate the total area of surface water lost by

selecting both classes.

The binary operator eq is used to select a

particular class and create a binary image representing the availability

of the class. The boolean operator or is used to

create a binary image where either class value is present.

var gsw = ee.Image("JRC/GSW1_4/GlobalSurfaceWater");

// Select the 'transition' band

var water = gsw.select('transition');

var lostWater = water.eq(3).or(water.eq(6));

var visParams = {

min:0,

max:1,

palette: ['white','red']

};

// Mask '0' value pixels

var lostWater = lostWater.selfMask();

Map.addLayer(lostWater, visParams, 'Lost Water'); Exercise

Use the transition band and find the total surface water

gain.

04. Get Yearly Water History

The JRC Yearly Water Classification History image collection contains the spatiotemporal map of surface water from 1984 to 2020. A total of 37 images represent 1 image for each year.

Let us filter this collection to visualize the surface water map of the year 2020. This data contains 4 bands, where bands 2 and 3 indicate water presence.

After filtering the Image Collection, the resultant would be a collection even if it contains just 1 image. We can use the function

.first()to extract the particular image.

var gswYearly = ee.ImageCollection("JRC/GSW1_4/YearlyHistory");

// Filter using the 'year' property

var filtered = gswYearly.filter(ee.Filter.eq('year', 2020));

var gsw2020 = ee.Image(filtered.first());

// Select permanent or seasonal water

var water2020 = gsw2020.eq(2).or(gsw2020.eq(3));

// Mask '0' value pixels

var water2020 = water2020.selfMask();

var visParams = {

min:0,

max:1,

palette: ['white','blue']

};

Map.addLayer(water2020, visParams, '2020 Water');Exercise

Use the JRC Yearly Water Classification History dataset

and visualize the surface water map for the year 2010.

05. Locate a SubBasin

The HydroSHEDS is a series

of data primarily developed by the World Wildlife Fund. It

contains a global level geo-reference stream network, watershed

boundaries, and drainage directions. We will use the

hydroBASINS, a feature collection containing drainage basin

boundaries. This data is created using SRTM DEM data. There are multiple

levels of hydroBASINS, ranging from 1 to 11. In level 1, the basin

boundaries are delineated as huge groups. Moving levels up, these

boundaries will be sub-divided into smaller boundaries.

We will use the Level 7 hydroBASINS feature collection to filter and locate a sun-basin called Arkavathy. The hydroBASINS doesn’t contain basin names, so basins should be visually located and filtered using the HYBAS_ID property to filter a particular basin boundary.

var hydrobasins = ee.FeatureCollection("WWF/HydroSHEDS/v1/Basins/hybas_7");

var basin = hydrobasins

.filter(ee.Filter.eq('HYBAS_ID', 4071139640));

Map.centerObject(basin);

Map.addLayer(basin, {color: 'grey'}, 'Arkavathy Sub Basin');Exercise

Using the Level 7 hydroBASINS, locate and filter a watershed basin in your region of interest.

06. Create a Surface Water Map

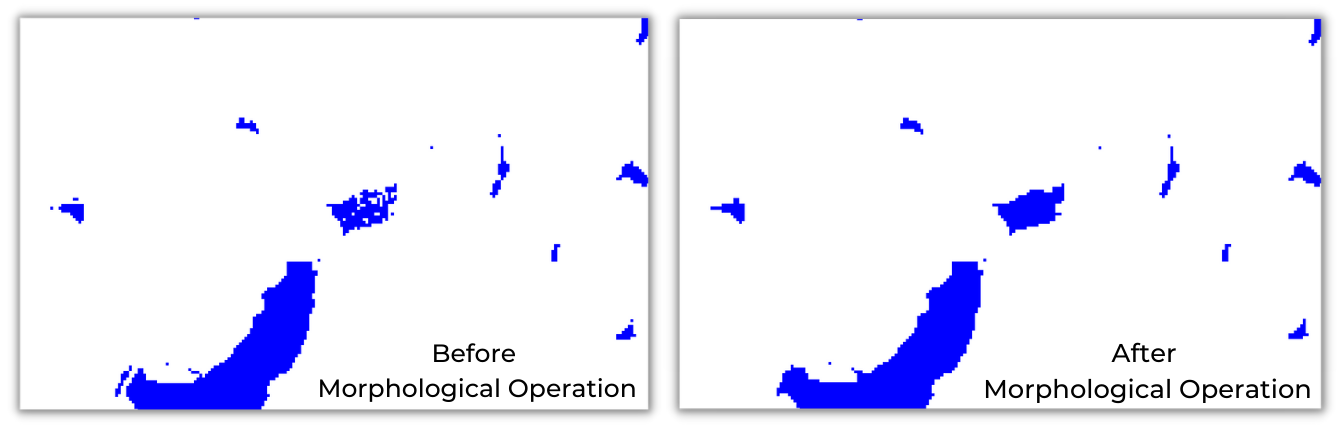

A raster image output can be post-processed using morphological operations to create a clean-looking surface map. There are different types of operations like Morphological Opening, Morphological Closing, and Majority Filtering.

Warning: We are processing the image here to clean it up to create a mapping product, but this changes the structure of the image and alters the statistics. Skip this step if your goal is to detect water pixels or compute the surface water area.

- The opening operation can reduce the salt and pepper noise in the image.

- The closing operation can fill holes and create a complete class.

- A majority filter will smooth the image. This operation should be used for mapping purposes only and not used while calculating statistics, as it will significantly alter the result.

Earth Engine has many functions to perform morphological operations

under ee.Image. Let’s use the Max_extent band

from Global Surface Water and fill holes to create a surface water map.

An Erosion operation follows a Dilation operation to

perform a closing operation, which translates as the focalMin

function chained with focalMax in the earth engine. Different

kernelType are available, let’s use the Square kernel

with 30 as search radius as the JRC’s Global Surface

Water spatial resolution is 30m. We can also define a custom kernel if

the required kernel is unavailable in kernelType.

Before Operation vs After Operation

var gsw = ee.Image("JRC/GSW1_4/GlobalSurfaceWater");

var hydrobasins = ee.FeatureCollection("WWF/HydroSHEDS/v1/Basins/hybas_7");

var basin = hydrobasins

.filter(ee.Filter.eq('HYBAS_ID', 4071139640))

var geometry = basin.geometry()

Map.centerObject(geometry, 10)

var water = gsw.select('max_extent');

var clipped = water.clip(geometry);

var visParams = {min:0, max:1, palette: ['white','blue']};

Map.addLayer(clipped, visParams, 'Surface Water') ;

// Do unmask() to replace masked pixels with 0

// This avoids extra pixels along the edges

var clipped = clipped.unmask(0);

// Perform a morphological closing to fill holes in waterbodies

var waterProcessed = clipped

.focalMax({

'radius':30,

'units': 'meters',

'kernelType': 'square'})

.focalMin({

'radius':30,

'units': 'meters',

'kernelType': 'square'});

Map.addLayer(waterProcessed, visParams, 'Surface Water (Processed)');Exercise

By default, the total iteration is 1. We can increase it as required, so the kernel will pass through the image to perform the operation as many times as required.

Set the iteration parameter to 2 and execute the morphological closing operation.

var gsw = ee.Image("JRC/GSW1_4/GlobalSurfaceWater");

var hydrobasins = ee.FeatureCollection("WWF/HydroSHEDS/v1/Basins/hybas_7");

var basin = hydrobasins

.filter(ee.Filter.eq('HYBAS_ID', 4071139640));

var geometry = basin.geometry();

Map.centerObject(geometry, 10);

var water = gsw.select('max_extent');

var clipped = water.clip(geometry);

var visParams = {min:0, max:1, palette: ['white','blue']};

Map.addLayer(clipped, visParams, 'Surface Water') ;

// Do unmask() to replace masked pixels with 0

// This avoids extra pixels along the edges

var clipped = clipped.unmask(0);

// Perform a morphological closing to fill holes in waterbodies

var waterProcessed = clipped

.focalMax({

'radius':30,

'units': 'meters',

'kernelType': 'square'})

.focalMin({

'radius':30,

'units': 'meters',

'kernelType': 'square'});

Map.addLayer(waterProcessed, visParams, 'Surface Water (Processed)');

// Exercise

// Replace the HYBAS_ID with the id of your selected basin

// Add an 'iterations' parameter with value 2 to

// focalMax and focalMin functions to further process the data07. Convert Raster to Vector

After creating a final raster map, we can vectorize it. The

reduceToVectors function should be used with

countEvery reducer to convert a raster to a vector. This

reducer will connect all linked pixels of the same class into a single

feature and return a feature collection. By default, the connectedness

of a pixel is checked in cardinal directions only. To check the

connectedness of a pixel in the diagonal direction,

eightConnected should be set as true.

var gsw = ee.Image("JRC/GSW1_4/GlobalSurfaceWater");

var hydrobasins = ee.FeatureCollection("WWF/HydroSHEDS/v1/Basins/hybas_7");

var basin = hydrobasins

.filter(ee.Filter.eq('HYBAS_ID', 4071139640));

var geometry = basin.geometry();

Map.centerObject(geometry, 10);

var water = gsw.select('max_extent');

var clipped = water.clip(geometry);

var visParams = {min:0, max:1, palette: ['white','blue']};

Map.addLayer(clipped, visParams, 'Surface Water', false) ;

// Do unmask() to replace masked pixels with 0

// This avoids extra pixels along the edges

var clipped = clipped.unmask(0);

// Perform a morphological closing to fill holes in waterbodies

var waterProcessed = clipped

.focal_max({

'radius':30,

'units': 'meters',

'kernelType': 'square'})

.focal_min({

'radius':30,

'units': 'meters',

'kernelType': 'square'});

var vector = waterProcessed.selfMask().reduceToVectors({

reducer: ee.Reducer.countEvery(),

geometry: geometry,

scale: 30,

maxPixels: 1e10,

eightConnected: false

});

var visParams = {color: 'blue'};

Map.addLayer(vector, visParams, 'Surface Water Polygons'); Exercise

The final feature collection will contain the class value from the pixel as the label property. Since the raster is a binary image with 0 - 1 value, the label with value 1 will represent the water bodies.

Use the eq filter and filter the water bodies alone.

var gsw = ee.Image("JRC/GSW1_4/GlobalSurfaceWater");

var hydrobasins = ee.FeatureCollection("WWF/HydroSHEDS/v1/Basins/hybas_7");

var basin = hydrobasins.filter(ee.Filter.eq('HYBAS_ID', 4071139640))

var geometry = basin.geometry()

Map.centerObject(geometry, 10)

var water = gsw.select('max_extent');

var clipped = water.clip(geometry)

var visParams = {min:0, max:1, palette: ['white','blue']}

Map.addLayer(clipped, visParams, 'Surface Water', false)

// Do unmask() to replace masked pixels with 0

// This avoids extra pixels along the edges

var clipped = clipped.unmask(0);

// Perform a morphological closing to fill holes in waterbodies

var waterProcessed = clipped

.focal_max({

'radius':30,

'units': 'meters',

'kernelType': 'square'})

.focal_min({

'radius':30,

'units': 'meters',

'kernelType': 'square'});

var vector = waterProcessed.selfMask().reduceToVectors({

reducer: ee.Reducer.countEvery(),

geometry: geometry,

scale: 30,

maxPixels: 1e10,

eightConnected: false

});

Map.addLayer(vector, {color: 'cyan'}, 'All Surface Water Polygons');

// Exercise

// 'vector' is a FeatureCollection of water polygons

// The following block adds a property 'area'

// to each polygon with its area in sq.meters.

var vectorArea = vector.map(function(x){

var a = ee.Feature(x).area({maxError: 1});

return x.set({'area':a});

});

// Apply a filter on the 'vectorArea' FeatureCollection

// to select water polygons larger than 1 Acre

// 1 Acre = 4046.86 sq. meters.

// Display the results on the mapModule 3: Precipitation Time Series Analysis

The main advantage of the gridded precipitation datasets is that they offer a consistent long-term time-series. We will cover the basics of gridded rainfall datasets, how to aggregate them to the requirement temporal resolution, prepare a time-series and perform pixel-wise trend analysis.

But to fully leverage the computation power of Earth Engine for our analysis, we must first learn about techniques for writing efficient parallel computing code. The Earth Engine API uses Map/Reduce paradigm for parallel computing.

01. Mapping a Function

This script introduces the basics of the Earth Engine API. Here we map a list of objects over a function to perform a task on all the objects. While defining a function, it should return a result after computation, which can be stored in a variable. To learn more, visit the Earth Engine Objects and Methods section of the Earth Engine User Guide.

Apart from other regular strings and numbers, the earth engine can

understand dates. Under ee.Date there are many functions by

which dates can be defined or converted from one format to another.

// Let's see how to take a list of numbers and add 1 to each element

var myList = ee.List.sequence(1, 10);

// Define a function that takes a number and adds 1 to it

var myFunction = function(number) {

return number + 1;

};

print(myFunction(1));

//Re-Define a function using Earth Engine API

var myFunction = function(number) {

return ee.Number(number).add(1);

};

// Map the function of the list

var newList = myList.map(myFunction);

print(newList);

// Let's write another function to square a number

var squareNumber = function(number) {

return ee.Number(number).pow(2);

};

var squares = myList.map(squareNumber);

print(squares);

// Extracting value from a list

var value = newList.get(0);

print(value);

// Casting

// Let's try to do some computation on the extracted value

//var newValue = value.add(1)

//print(newValue)

// You get an error because Earth Engine doesn't know what is the type of 'value'

// We need to cast it to appropriate type first

var value = ee.Number(value);

var newValue = value.add(1);

print(newValue);

// Dates

// For any date computation, you should use ee.Date module

var date = ee.Date.fromYMD(2019, 1, 1);

var futureDate = date.advance(1, 'year');

print(futureDate);Exercise

Define the function createDate, map the

weeks list as input. The function should return dates

incremented by the week number.

02. Reducers

A Reduce operation allows you to compute statistics on a

large amount of inputs. In Earth Engine, you need to run reduction

operation when creating composites, calculating statistics, doing

regression analysis etc. The Earth Engine API comes with a large number

of built-in reducer functions (such as ee.Reducer.sum(),

ee.Reducer.histogram(), ee.Reducer.linearFit()

etc.) that can perform a variety of statistical operations on input

data. You can run reducers using the reduce() function.

Earth Engine supports running reducers on all data structures that can

hold multiple values, such as Images (reducers run on different bands),

ImageCollection, FeatureCollection, List, Dictionary etc. The script

below introduces basic concepts related to reducers.

// Computing stats on a list

var myList = ee.List.sequence(1, 10);

print(myList);

// Use a reducer to compute min and max in the list

var mean = myList.reduce(ee.Reducer.mean());

print(mean);

var geometry = ee.Geometry.Polygon([[

[82.60642647743225, 27.16350437805251],

[82.60984897613525, 27.1618529901377],

[82.61088967323303, 27.163695288375266],

[82.60757446289062, 27.16517483230927]

]]);

var s2 = ee.ImageCollection("COPERNICUS/S2_HARMONIZED");

Map.centerObject(geometry);

// Apply a reducer on a image collection

var filtered = s2.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date('2019-01-01', '2020-01-01'))

.filter(ee.Filter.bounds(geometry))

.select('B.*');

print(filtered.size());

var collMean = filtered.reduce(ee.Reducer.mean());

print('Reducer on Collection', collMean);

var image = ee.Image('COPERNICUS/S2_HARMONIZED/20190223T050811_20190223T051829_T44RPR');

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(image, rgbVis, 'Image');

Map.addLayer(geometry, {color: 'red'}, 'Farm');

// If we want to compute the average value in each band,

// we can use reduceRegion instead

var stats = image.reduceRegion({

reducer: ee.Reducer.mean(),

geometry: geometry,

scale: 100,

maxPixels: 1e10

});

print(stats);

// Result of reduceRegion is a dictionary.

// We can extract the values using .get() function

print('Average value in B4', stats.get('B4'));Exercise

// Below is a list of pixel values in an image

var pixelValues = ee.List(

[1200, 2800, 1800, 1300, 3400, 2100, 1600]

);

// Exercise

// a. Divide each number by 10000 to get reflectances.

// Hint: map() a function on the list

// b. Calculate the mean and standard deviation of computed reflectances.

// Hint: reduce() the results03. Calculating Total Rainfall

The CHIRPS Pentad is 5 days composite of rainfall at ~6 km spatial resolution. To compute this data, both satellite and grounds values are considered. To read more about the methodology, visit nature.com

Using this image collection, lets filter all the images for 2017. To

get a yearly rainfall we can reduce the collection using

reduce function with sum reducer. This will return

a single global image where each pixel represent the total annual

rainfall value in mm at that location. Now to get a annual

average rainfall at a particular region of interest, we can do a

reduceRegions with a mean reducer over the region.

This reducer will reduce the region’s image and return a dictionary. The

dictionary will contain the average value of all the bands in the image,

which can be extracted using the get function.

var geometry = ee.Geometry.Polygon([[

[77.43833, 13.0497],

[77.43833, 12.8141],

[77.72123, 12.8141],

[77.72123, 13.0497]

]]);

Map.addLayer(geometry, {}, 'ROI');

var chirps = ee.ImageCollection("UCSB-CHG/CHIRPS/PENTAD");

var startDate = ee.Date.fromYMD(2017, 1,1);

var endDate = startDate.advance(1, 'year');

var filtered = chirps

.filter(ee.Filter.date(startDate, endDate));

// Calculate yearly rainfall

var total = filtered.reduce(ee.Reducer.sum());

var palette = ['#ffffcc','#a1dab4','#41b6c4','#2c7fb8','#253494'];

var visParams = {

min:0,

max: 2000,

palette: palette

};

Map.addLayer(total, visParams, 'Total Precipitation');

Map.centerObject(geometry, 10);

// Calculate average rainfall across a region

var stats = total.reduceRegion({

reducer: ee.Reducer.mean(),

geometry: geometry,

scale: 5566,

});

print(stats);

print('Average rainfall across ROI :', stats.getNumber('precipitation_sum'));Output

Average rainfall across ROI :

1345.867775034789Exercise

Update the date rage for monsoon season(1 June - 30 September) in India.

04. Aggregating Time Series

In the previous exercise, we aggregated to get the average rainfall across a region. Let’s compute a time series representing the total rainfall of each month in 2019.

Earth Engine has a function called ee.Date.fromYMD to

create a date from Year, Month, and Date.

Using this function and .advance(), we can create the

filter date to filter the image collection to a particular month. Then

with reduce function with sum as reducer, the

monthly image collection is aggregated to the monthly total image. This

newly created image won’t have any properties. Hence using the

.set() function, we have to assign the Year,

Month, system:time_start, and system:time_end

properties. With the above procedure in the sequence, we can create a

function to input the month’s number and return the monthly total

precipitation image.

We can create the month’s list using ee.List.sequence

and map it to the function built. Since the mapped object is of type

List, the resultant will also be a list with 12 images. This list of

images can be converted back as Image Collection using

ee.ImageCollection.fromYMD. Once we have the collection

print, it will contain 12 images representing the month’s total

precipitation.

The system:time_start is a unique property that the earth engine can natively understand. It is the Unix Timestamp that is used to create a time series chart.

var chirps = ee.ImageCollection("UCSB-CHG/CHIRPS/PENTAD");

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var yearFiltered = chirps

.filter(ee.Filter.date(startDate, endDate));

// CHIRPS collection has 1 image for every pentad (5-days)

// The collection is filtered for 1 year and the time-series

// now has 72 images

print(yearFiltered);

// We need to aggregate this time series to compute

// monthly images

// Create a list of months

var months = ee.List.sequence(1, 12);

// Write a function that takes a month number

// and returns a monthly image

var createMonthlyImage = function(month) {

var startDate = ee.Date.fromYMD(year, month, 1);

var endDate = startDate.advance(1, 'month');

var monthFiltered = yearFiltered

.filter(ee.Filter.date(startDate, endDate));

// Calculate total precipitation

var total = monthFiltered.reduce(ee.Reducer.sum());

return total.set({

'system:time_start': startDate.millis(),

'system:time_end': endDate.millis(),

'year': year,

'month': month});

};

// map() the function on the list of months

// This creates a list with images for each month in the list

var monthlyImages = months.map(createMonthlyImage);

// Create an imagecollection

var monthlyCollection = ee.ImageCollection.fromImages(monthlyImages);

print('Monthly Collection', monthlyCollection);Exercise

Filter the monthlyCollection for the months June, July,

August and September.

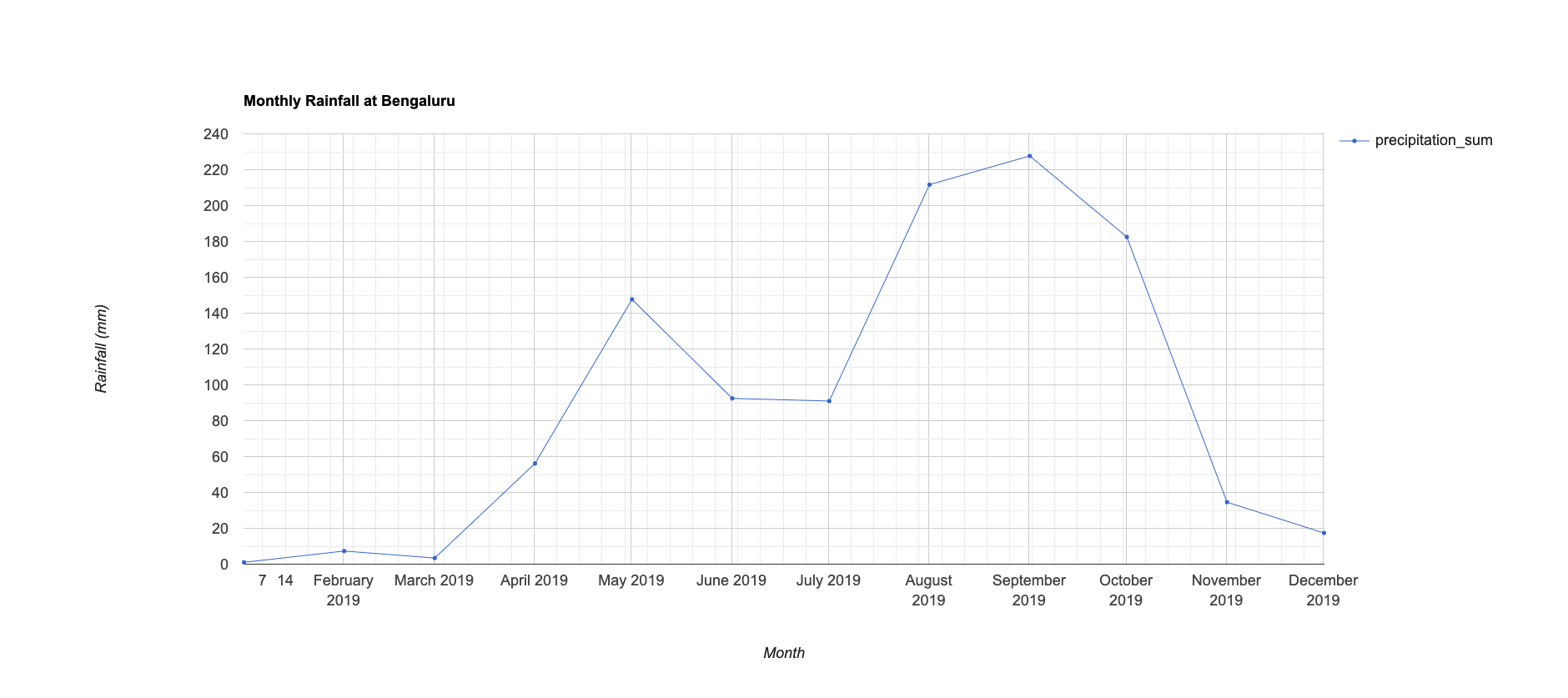

05. Charting Monthly Rainfall

Now we can put together all the skills we have learned so far -

filter, map, and reduce to create a chart of monthly total rainfall in

the year 2019 over a region. Earth Engine API supports charting

functions based on the Google Chart API. There are various charts to

use, from which we use the ui.Chart.image.series() function

to create a time-series chart.

Image series chart accepts an image collection,

geometry, scale, and reducer. The geometry in

the region of interest, reducer in the reduction operation the region of

interest should be aggregated if the geometry is a polygon. Scale is the

resolution at which the image should be reduced. Using

.setOptions, we can customize the chart to our needs.

Charting itself is vast, and all function are self explanatory. Google Earth Engine documentation provides all types of charting examples

var geometry = ee.Geometry.Point([77.58253382230242, 12.942038375108362]);

Map.addLayer(geometry, {}, 'POI')

var chirps = ee.ImageCollection("UCSB-CHG/CHIRPS/PENTAD")

var year = 2019

var startDate = ee.Date.fromYMD(year, 1, 1)

var endDate = startDate.advance(1, 'year')

var yearFiltered = chirps

.filter(ee.Filter.date(startDate, endDate))

// CHIRPS collection has 1 image for every pentad (5-days)

// The collection is filtered for 1 year and the time-series

// now has 72 images

print(yearFiltered)

// We need to aggregate this time series to compute

// monthly images

// Create a list of months

var months = ee.List.sequence(1, 12)

// Write a function that takes a month number

// and returns a monthly image

var createMonthlyImage = function(month) {

var startDate = ee.Date.fromYMD(year, month, 1);

var endDate = startDate.advance(1, 'month');

var monthFiltered = yearFiltered

.filter(ee.Filter.date(startDate, endDate));

// Calculate total precipitation

var total = monthFiltered.reduce(ee.Reducer.sum());

return total.set({

'system:time_start': startDate.millis(),

'system:time_end': endDate.millis(),

'year': year,

'month': month});

};

// map() the function on the list of months

// This creates a list with images for each month in the list

var monthlyImages = months.map(createMonthlyImage);

// Create an imagecollection

var monthlyCollection = ee.ImageCollection.fromImages(monthlyImages);

print(monthlyCollection);

// Create a chart of monthly rainfall for a location

var chart = ui.Chart.image.series({

imageCollection: monthlyCollection,

region: geometry,

reducer: ee.Reducer.mean(),

scale: 5566

}).setOptions({

lineWidth: 1,

pointSize: 3,

title: 'Monthly Rainfall at Bengaluru',

vAxis: {title: 'Rainfall (mm)'},

hAxis: {title: 'Month', gridlines: {count: 12}}

});

print(chart);

2019 - Monthly rainfall

Exercise

Change the geometry to your location, compute the monthly total rainfall. Download the timeseries chart generated.

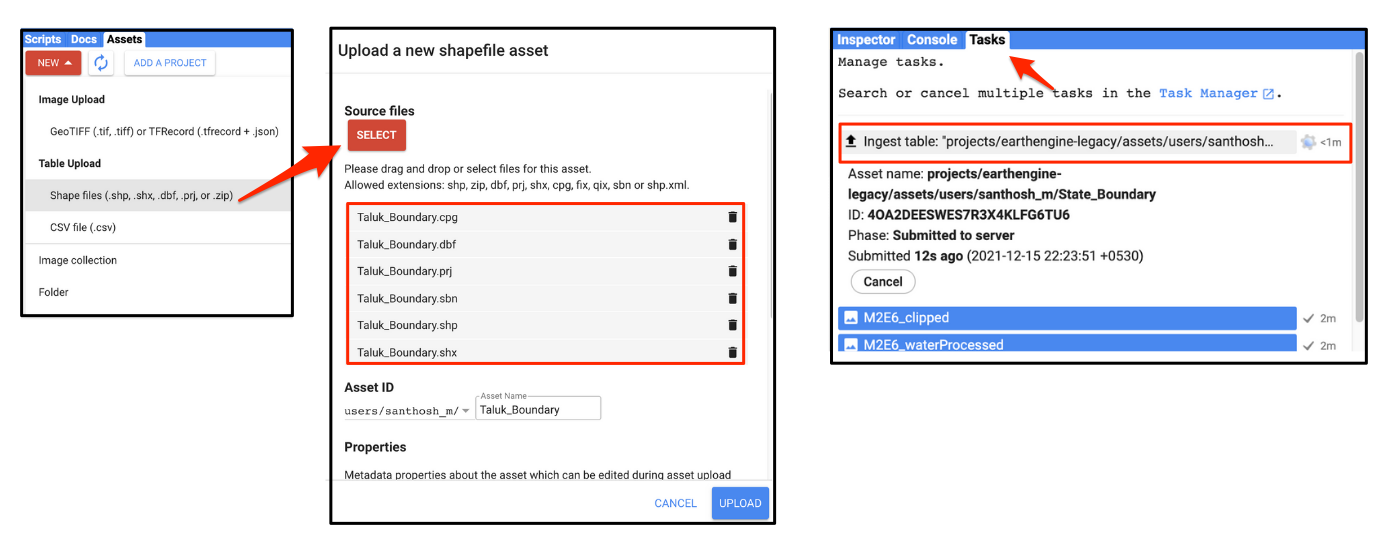

06. Import

You can import vector or raster data into Earth Engine. We will now

import a shapefile of Taluk for a state

in India. Unzip the Taluk.rar into a folder on your

computer. In the Code Editor, go to Assets → New → Table Upload →

Shape Files. Select the .cpj, .sbn,

.shp, .shx, .dbfand

.prj files. Enter Taluk_Boundary as the

Asset Name and click Upload. In the Tasks tab

you can see the progress of the upload. Once the ingestion is complete

you will have a new asset in the Assets tab. The shapefile will

be imported as a Feature Collection in Earth Engine. Select the

Taluk_Boundary asset and click Import. You can

then visualize the imported data.

Importing a Shapefile

// Import the collection

var taluks = ee.FeatureCollection("users/ujavalgandhi/gee-water-resources/kgis_taluks");

// Visualize the collection

Map.centerObject(taluks)

Map.addLayer(taluks, {color: 'gray'}, 'Taluks')

// Function to add a new property 'area_sqkm'

// Function takes a feature and returns the feature

// with a new property

var addArea = function(f) {

var area = ee.Number(f.get('SHAPE_STAr')).divide(1e6)

return f.set('area_sqkm', area)

}

var talukWithArea = taluks.map(addArea)

print(talukWithArea)Exercise

Explore the uploaded Feature Collection, SHAPE_STAr

property contains the area in meter_square, using addArea

function area is converted into kilometer_square and stored as

area_sqkm. Using this property filter all regions with area

greater than 1000 sq km.

// Let's import some data to Earth Engine

// Download the 'Taluk' shapefiles from K-GIS

// https://kgis.ksrsac.in/kgis/downloads.aspx

// Uncompress and upload the 'Taluk_Boundary.shp' shapefile

// Import the collection

var taluks = ee.FeatureCollection("users/ujavalgandhi/gee-water-resources/kgis_taluks");

// Function to add a new property 'area_sqkm'

// Function takes a feature and returns the feature

// with a new property

var addArea = function(f) {

var area = ee.Number(f.get('SHAPE_STAr')).divide(1e6)

return f.set('area_sqkm', area)

}

var talukWithArea = taluks.map(addArea)

print(talukWithArea)

// Exercise

// Apply a filter to select all polygons with area > 1000 sq. km.

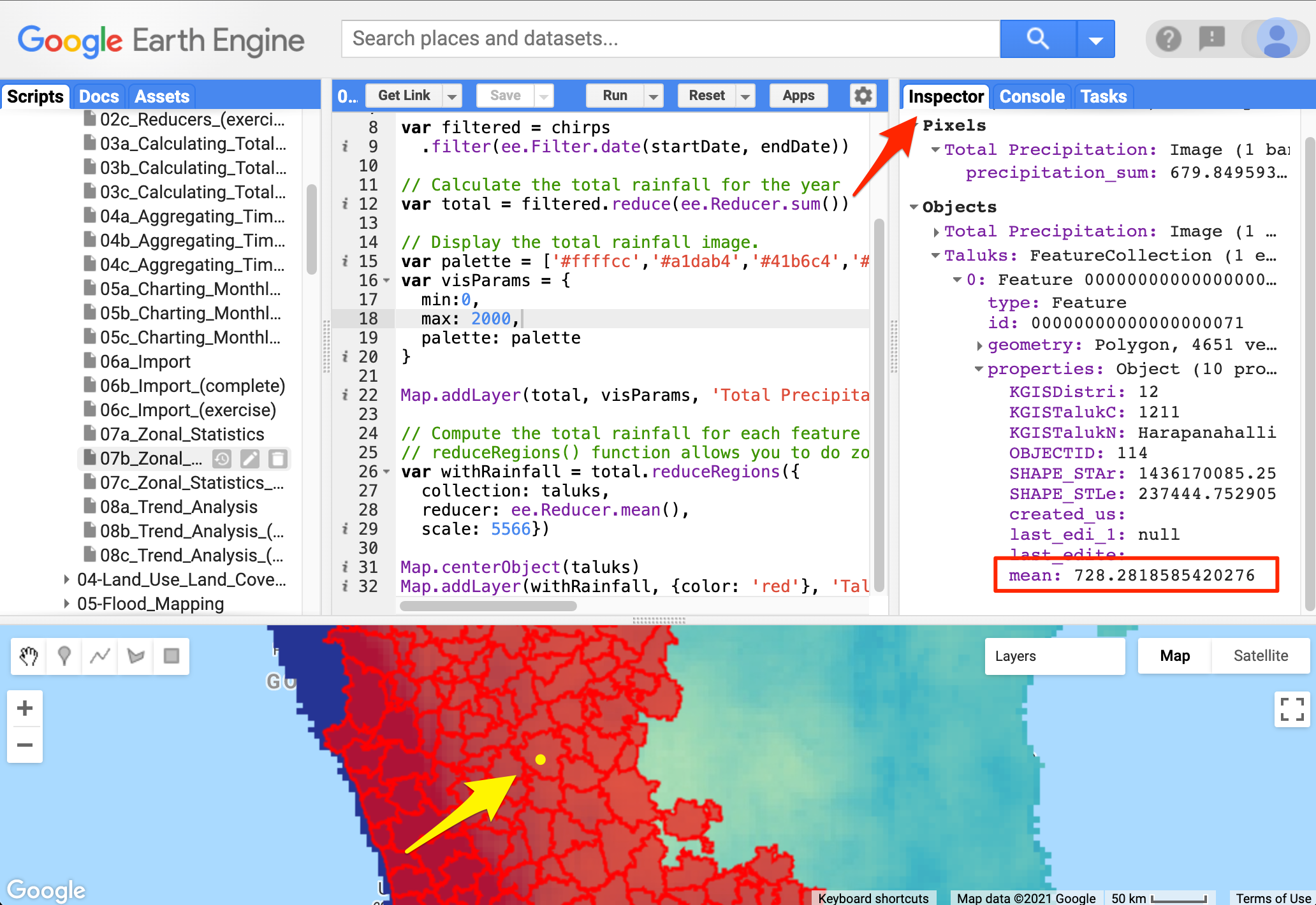

// Add the results to the map07. Zonal Statistics

Now that we have uploaded a shapefile with many Taluk boundaries let

us calculate the mean rainfall for each district. Before in

reduceRegion, we learned to reduce a single image over a

whole geometry. But by using the reduceRegions, we can

reduce the image for each geometry in the feature collection. This

function will return a Feature Collection, which contains all

the features with an additional property of the reduced value.

Zonal Statistics

var taluks = ee.FeatureCollection("users/ujavalgandhi/gee-water-resources/kgis_taluks");

var chirps = ee.ImageCollection('UCSB-CHG/CHIRPS/PENTAD');

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var filtered = chirps

.filter(ee.Filter.date(startDate, endDate));

// Calculate the total rainfall for the year

var total = filtered.reduce(ee.Reducer.sum());

// Display the total rainfall image.

var palette = ['#ffffcc','#a1dab4','#41b6c4','#2c7fb8','#253494'];

var visParams = {

min:0,

max: 2000,

palette: palette

};

Map.addLayer(total, visParams, 'Total Precipitation');

// Compute the average total rainfall for each feature

// reduceRegions() function allows you to do zonal stats

var withRainfall = total.reduceRegions({

collection: taluks,

reducer: ee.Reducer.mean(),

scale: 5566});

Map.centerObject(taluks);

Map.addLayer(withRainfall, {color: 'red'}, 'Taluks');Exercise

Write an export function to export the Feature Collection as a CSV file.

var taluks = ee.FeatureCollection("users/ujavalgandhi/gee-water-resources/kgis_taluks");

var chirps = ee.ImageCollection('UCSB-CHG/CHIRPS/PENTAD');

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year')

var filtered = chirps

.filter(ee.Filter.date(startDate, endDate));

// Calculate the total rainfall for the year

var total = filtered.reduce(ee.Reducer.sum());

// Display the total rainfall image.

var palette = ['#ffffcc','#a1dab4','#41b6c4','#2c7fb8','#253494'];

var visParams = {

min:0,

max: 2000,

palette: palette

};

Map.addLayer(total, visParams, 'Total Precipitation');

// Compute the total rainfall for each feature

// reduceRegions() function allows you to do zonal stats

var withRainfall = total.reduceRegions({

collection: taluks,

reducer: ee.Reducer.mean(),

scale: 5566});

Map.centerObject(taluks);

Map.addLayer(withRainfall, {color: 'blue'}, 'Taluks');

// Exercise

// Export the resulting FeatureCollection with average rainfall as a CSV

// Use the Export.table.toDrive() function

// You can use the .select() function to select only columns you need

// You can also rename the columns

var exportCol = withRainfall

.select(['KGISTalukN', 'mean'], ['taluk', 'average_rainfall']);

print(exportCol);

// Export the exportCol as a CSV

// Hint: Even though you have only 2 properties for each feature,

// the CSV will include internal properties system:index and .geo (the geometry)

// To only get the data columns in your CSV, specify the list of properties

// using the 'selectors' argument in Export.table.toDrive() function08. Trend Analysis

Trend Analysis is a technique that is used to find the pattern. Let us do a Non-Parametric Trend Analysis using the Sen Slope technique to find the pattern of rainfall over time.

The sens slope is a reduction operation that expects two-band X and

Y. The X is the constant band with value year, and the Y is the total

precipitation of that pixel in that year. After creating the image

collection in this format we can use the

ee.Reducer.sensSlope() with the collection to compute the

slope at each year.

This function returns an image with a positive pixel value representing the increase in precipitation and vice-versa.

An advantage of doing trend analysis in earth engine is computing pre-pixel calculation other wise which would be computation intestiive task to perform.

var taluks = ee.FeatureCollection("users/ujavalgandhi/gee-water-resources/kgis_taluks");

var chirps = ee.ImageCollection('UCSB-CHG/CHIRPS/PENTAD');

// We will compute the trend of total seasonal rainfall

// Rainy season is June - September

var createSeasonalImage = function(year) {

var startDate = ee.Date.fromYMD(year, 6, 1);

var endDate = ee.Date.fromYMD(year, 10, 1);

var seasonFiltered = chirps

.filter(ee.Filter.date(startDate, endDate));

// Calculate total precipitation

var total = seasonFiltered.reduce(ee.Reducer.sum());

return total.set({

'system:time_start': startDate.millis(),

'system:time_end': endDate.millis(),

'year': year,

});

};

// Aggregate Precipitation Data to Yearly Time-Series

var years = ee.List.sequence(1981, 2013);

var yearlyImages = years.map(createSeasonalImage);

print(yearlyImages);

var yearlyCol = ee.ImageCollection.fromImages(yearlyImages);

// Prepare data for Sen's Slope Estimation

// We need an image with 2 bands for X and Y varibles

// X = year

// Y = total precipitation

var processImage = function(image) {

var year = image.get('year');

var yearImage = ee.Image.constant(ee.Number(year)).toShort();

return ee.Image.cat(yearImage, image).rename(['year', 'prcp']).set('year', year);

};

var processedCol = yearlyCol.map(processImage);

print(processedCol);

// Calculate time series slope using sensSlope().

var sens = processedCol.reduce(ee.Reducer.sensSlope());

// The resulting image has 2 bands: slope and intercept

// We select the 'slope' band

var slope = sens.select('slope');

// Set visualisation parameters

var visParams = {min: -10, max: 10, palette: ['brown', 'white', 'blue']};

Map.addLayer(slope, visParams, 'Trend');Exercise

The trend analysis is done for a particular season. Change the filter dates to compute the annual precipitation trend.

var taluks = ee.FeatureCollection("users/ujavalgandhi/gee-water-resources/kgis_taluks");

var chirps = ee.ImageCollection('UCSB-CHG/CHIRPS/PENTAD');

// We will compute the trend of total seasonal rainfall

// Rainy season is June - September

var createSeasonalImage = function(year) {

var startDate = ee.Date.fromYMD(year, 6, 1);

var endDate = ee.Date.fromYMD(year, 10, 1);

var seasonFiltered = chirps

.filter(ee.Filter.date(startDate, endDate));

// Calculate total precipitation

var total = seasonFiltered.reduce(ee.Reducer.sum());

return total.set({

'system:time_start': startDate.millis(),

'system:time_end': endDate.millis(),

'year': year,

});

};

// Aggregate Precipitation Data

var years = ee.List.sequence(1981, 2013);

var yearlyImages = years.map(createSeasonalImage);

print(yearlyImages);

var yearlyCol = ee.ImageCollection.fromImages(yearlyImages);

// Prepare data for Sen's Slope Estimation

// We need an image with 2 bands for X and Y varibles

// X = year

// Y = total precipitation

var processImage = function(image) {

var year = image.get('year');

var yearImage = ee.Image.constant(ee.Number(year)).toShort();

return ee.Image.cat(yearImage, image).rename(['year', 'prcp']).set('year', year);

};

var processedCol = yearlyCol.map(processImage);

print(processedCol);

// Calculate time series slope using sensSlope().

var sens = processedCol.reduce(ee.Reducer.sensSlope());

// The resulting image has 2 bands: slope and intercept

// We select the 'slope' band

var slope = sens.select('slope');

// Set visualisation parameters

var visParams = {min: -10, max: 10, palette: ['brown', 'white', 'blue']};

Map.addLayer(slope, visParams, 'Trend');

// Exercise

// Change the code above to compute annual precipitation trendModule 4: Land Use Land Cover Classification

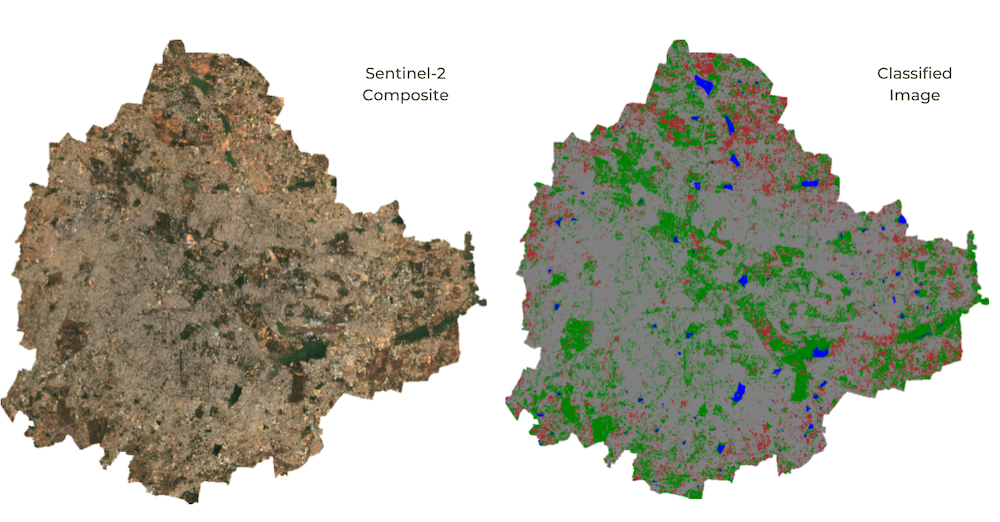

Supervised classification is one of the most popular machine learning technique used in remote sensing. Google Earth Engine is uniquely suited for supervised classification at a large scale and offers a flexible data model that allows you to easily incorporate variables from multiple data sources. The interactive nature of Earth Engine development allows for iterative development of supervised classification workflows by combining many different datasets into the model. This module covers basic supervised classification workflow, accuracy assessment, and area calculation.

01. Basic Supervised Classification

We will learn the technique to do a basic land cover classification

using training points collected using the Code Editor with the help of

the High-Resolution base map imagery provided by Google Maps. Even if no

field data is available for training the model, this method is very

effective in generating high-quality classification samples anywhere in

the world. The goal is to classify Sentinel 2A pixel into one of the

following classes - urban, bare, water, or vegetation.

Using the drawing tools in the code editor lets us create 4 new feature

collections with points representing pixels of each class. Each feature

collection will be created with a property landcover where

each collection has values of 0, 1, 2, or 3, representing urban, bare,

water, or vegetation, respectively. We use these collected samples to

train a Random Forest classifier and apply it to all the image

pixels to create a land cover image with 4 classes.

Fun fact: The classifiers in Earth Engine API have names starting with smile - such as

ee.Classifier.smileRandomForest(). The smile part refers to the Statistical Machine Intelligence and Learning Engine (SMILE) JAVA library which is used by Google Earth Engine to implement these algorithms.

var city = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/bangalore_boundary');

var geometry = city.geometry();

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

// The following collections were created using the

// Drawing Tools in the code editor

var urban = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/urban_gcps');

var bare = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/bare_gcps');

var water = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/water_gcps');

var vegetation = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/vegetation_gcps');

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

var gcps = urban.merge(bare).merge(water).merge(vegetation);

// Overlay the point on the image to get training data.

var training = composite.sampleRegions({

collection: gcps,

properties: ['landcover'],

scale: 10

});

// Train a classifier.

var classifier = ee.Classifier.smileRandomForest(50).train({

features: training,

classProperty: 'landcover',

inputProperties: composite.bandNames()

});

// // Classify the image.

var classified = composite.classify(classifier);

// Choose a 4-color palette

// Assign a color for each class in the following order

// Urban, Bare, Water, Vegetation

var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

// Select the Water class

var waterClass = classified.eq(2);

var waterVis = {min:0, max:1, palette: ['white', 'blue']};

Map.addLayer(waterClass.clip(geometry), waterVis, 'Water', false);

// Display the GCPs

// We use the style() function to style the GCPs

var palette = ee.List(palette);

var landcover = ee.List([0, 1, 2, 3]);

var gcpsStyled = ee.FeatureCollection(

landcover.map(function(lc){

var color = palette.get(landcover.indexOf(lc));

var markerStyle = { color: 'white', pointShape: 'diamond',

pointSize: 4, width: 1, fillColor: color};

return gcps.filter(ee.Filter.eq('landcover', lc))

.map(function(point){

return point.set('style', markerStyle);

});

})).flatten();

Map.addLayer(gcpsStyled.style({styleProperty:"style"}), {}, 'GCPs');

Map.centerObject(gcpsStyled);

Supervised Classification Output

Exercise

Select and city of your choice from urbanAreas data.

Create Feature collection with property landcover and

collect training points for every four classes urban, bare,

water, and vegetation. Then use the code to classify and

create a land cover of the city.

// Perform supervised classification for your city

// Delete the geometry below and draw a polygon

// over your chosen city

var geometry = ee.Geometry.Polygon([[

[77.4149, 13.1203],

[77.4149, 12.7308],

[77.8090, 12.7308],

[77.8090, 13.1203]

]]);

Map.centerObject(geometry);

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

var rgbVis = {min: 0.0, max: 3000, bands: ['B4', 'B3', 'B2']};

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Exercise

// Add training points for 4 classes

// Assign the 'landcover' property as follows

// urban: 0

// bare: 1

// water: 2

// vegetation: 3

// After adding points, uncomments lines below

// var gcps = urban.merge(bare).merge(water).merge(vegetation);

// // Overlay the point on the image to get training data.

// var training = composite.sampleRegions({

// collection: gcps,

// properties: ['landcover'],

// scale: 10,

// tileScale: 16

// });

// print(training);

// // Train a classifier.

// var classifier = ee.Classifier.smileRandomForest(50).train({

// features: training,

// classProperty: 'landcover',

// inputProperties: composite.bandNames()

// });

// // // Classify the image.

// var classified = composite.classify(classifier);

// // Choose a 4-color palette

// // Assign a color for each class in the following order

// // Urban, Bare, Water, Vegetation

// var palette = ['#cc6d8f', '#ffc107', '#1e88e5', '#004d40' ];

// Map.addLayer(classified.clip(geometry), {min: 0, max: 3, palette: palette}, 'Classification');

// // Select the Water class

// var waterClass = classified.eq(2);

// var waterVis = {min:0, max:1, palette: ['white', 'blue']};

// Map.addLayer(waterClass.clip(geometry), waterVis, 'Water', false);02. Accuracy Assessment

It is important to get a quantitative estimate of the accuracy of the

classification. To do this, a common strategy is to divide your training

samples into 2 random fractions - one used for training the

model and the other for validation of the predictions. Once a

classifier is trained, it can be used to classify the entire image. We

can then compare the classified values with the ones in the validation

fraction. We can use the ee.Classifier.confusionMatrix()

method to calculate a Confusion Matrix representing expected

accuracy.

Don’t get carried away tweaking your model to give you the highest validation accuracy. You must use both qualitative measures (such as visual inspection of results) along with quantitative measures to assess the results.

// Load training samples

// This was created by exporting the merged 'gcps' collection

// using Export.table.toAsset()

var gcps = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/exported_gcps');

// Load the region boundary of a basin

var boundary = ee.FeatureCollection('projects/spatialthoughts/assets/e2e/basin_boundary');

var geometry = boundary.geometry();

Map.centerObject(geometry);

var rgbVis = {

min: 0.0,

max: 3000,

bands: ['B4', 'B3', 'B2'],

};

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);

var endDate = startDate.advance(1, 'year');

var s2 = ee.ImageCollection('COPERNICUS/S2_SR_HARMONIZED');

var filtered = s2

.filter(ee.Filter.lt('CLOUDY_PIXEL_PERCENTAGE', 30))

.filter(ee.Filter.date(startDate, endDate))

.filter(ee.Filter.bounds(geometry));

// Load the Cloud Score+ collection

var csPlus = ee.ImageCollection('GOOGLE/CLOUD_SCORE_PLUS/V1/S2_HARMONIZED');

var csPlusBands = csPlus.first().bandNames();

// We need to add Cloud Score + bands to each Sentinel-2

// image in the collection

// This is done using the linkCollection() function

var filteredS2WithCs = filtered.linkCollection(csPlus, csPlusBands);

// Function to mask pixels with low CS+ QA scores.

function maskLowQA(image) {

var qaBand = 'cs';

var clearThreshold = 0.5;

var mask = image.select(qaBand).gte(clearThreshold);

return image.updateMask(mask);

}

var filteredMasked = filteredS2WithCs

.map(maskLowQA)

.select('B.*');

var composite = filteredMasked.median();

// Display the input composite.

Map.addLayer(composite.clip(geometry), rgbVis, 'image');

// Add a random column and split the GCPs into training and validation set

var gcps = gcps.randomColumn();

// This being a simpler classification, we take 60% points

// for validation. Normal recommended ratio is

// 70% training, 30% validation

var trainingGcp = gcps.filter(ee.Filter.lt('random', 0.6));

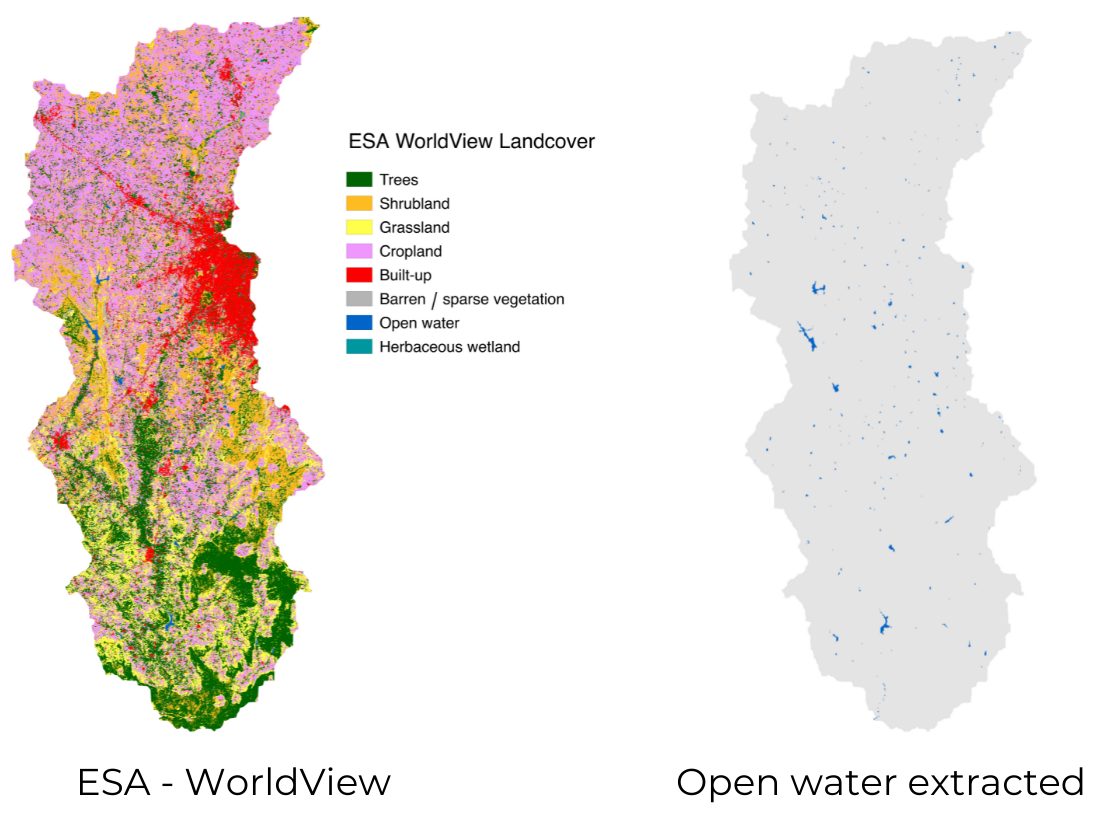

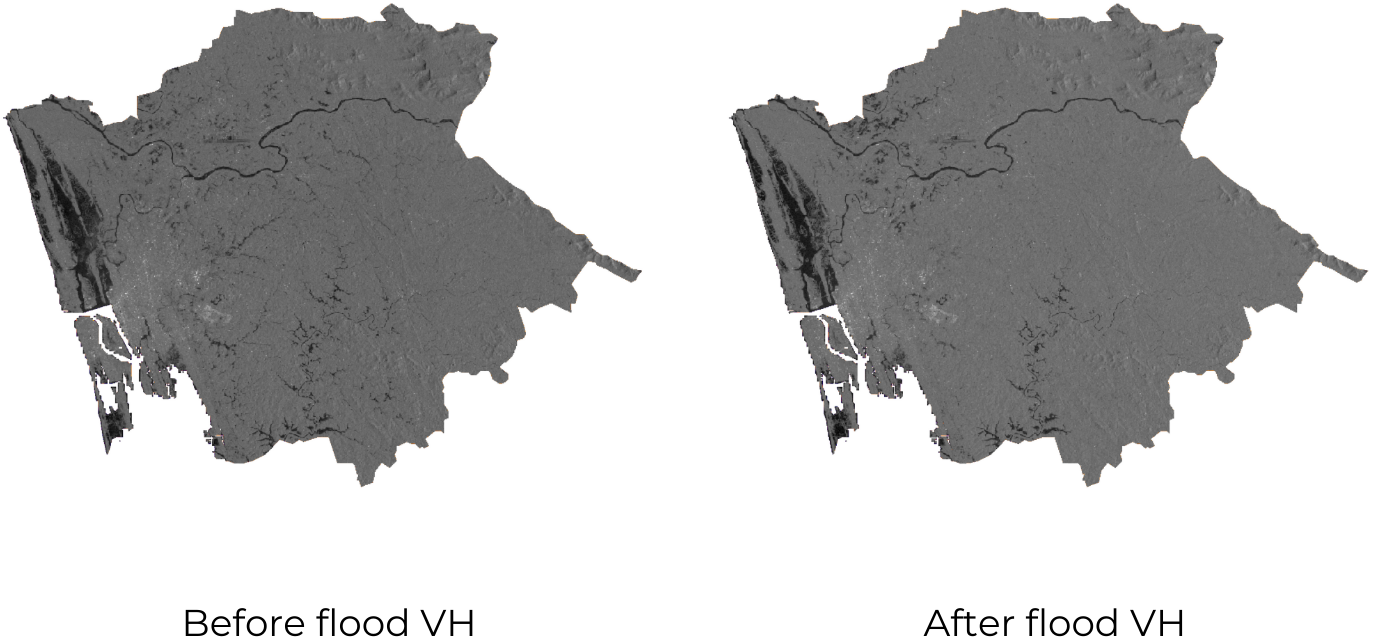

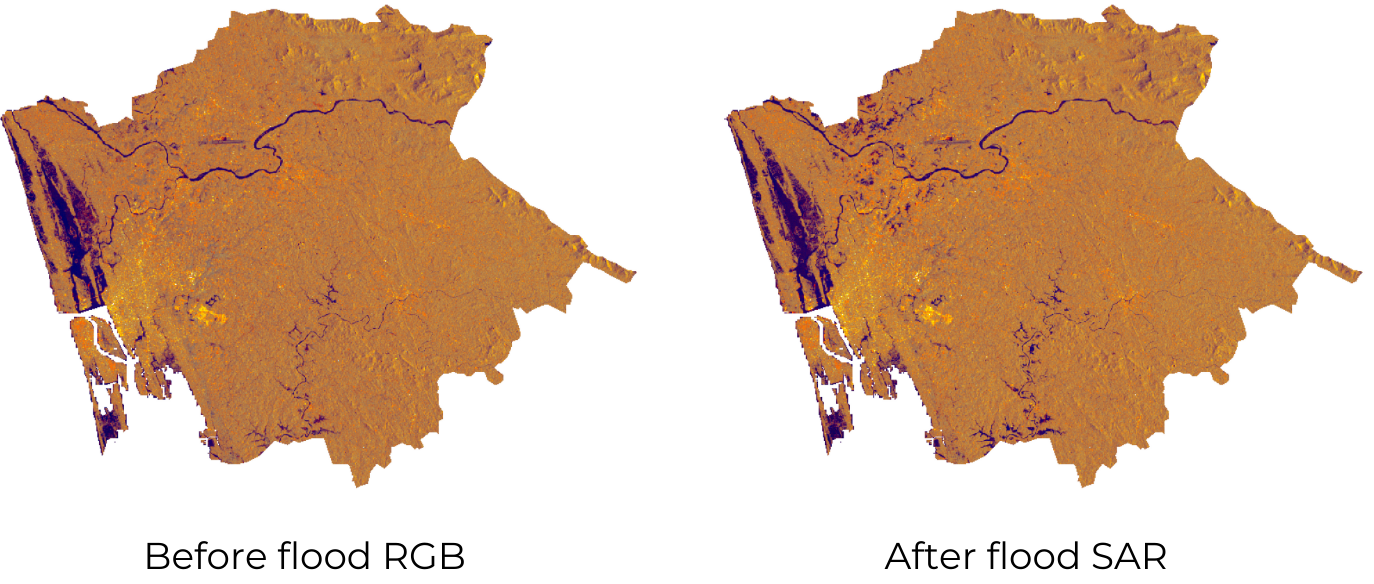

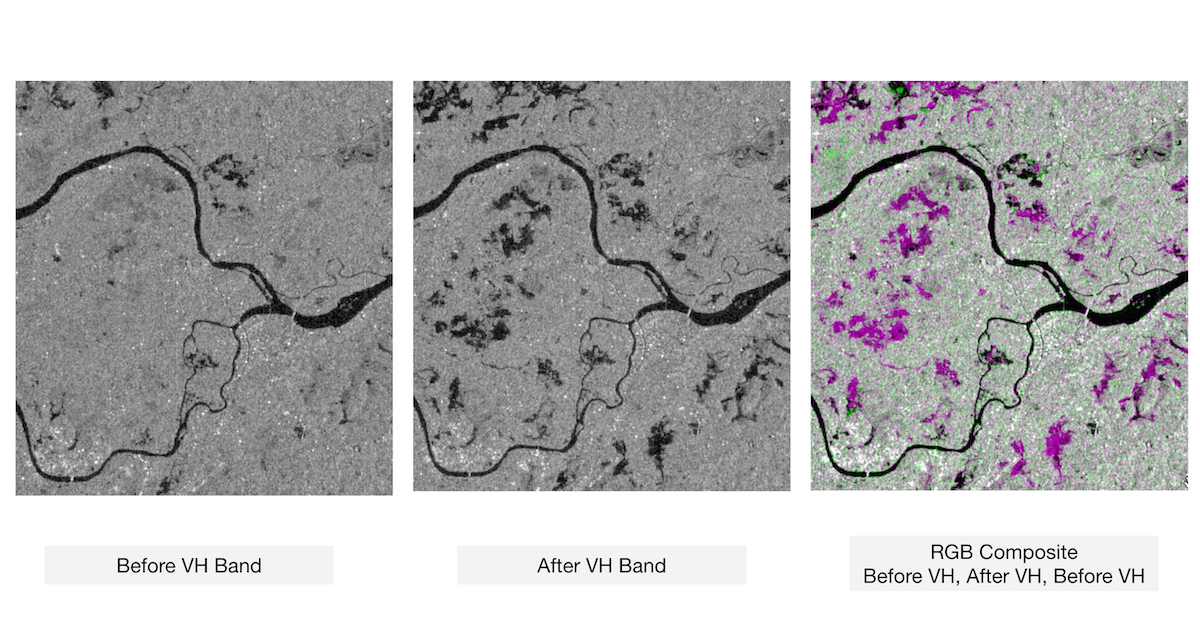

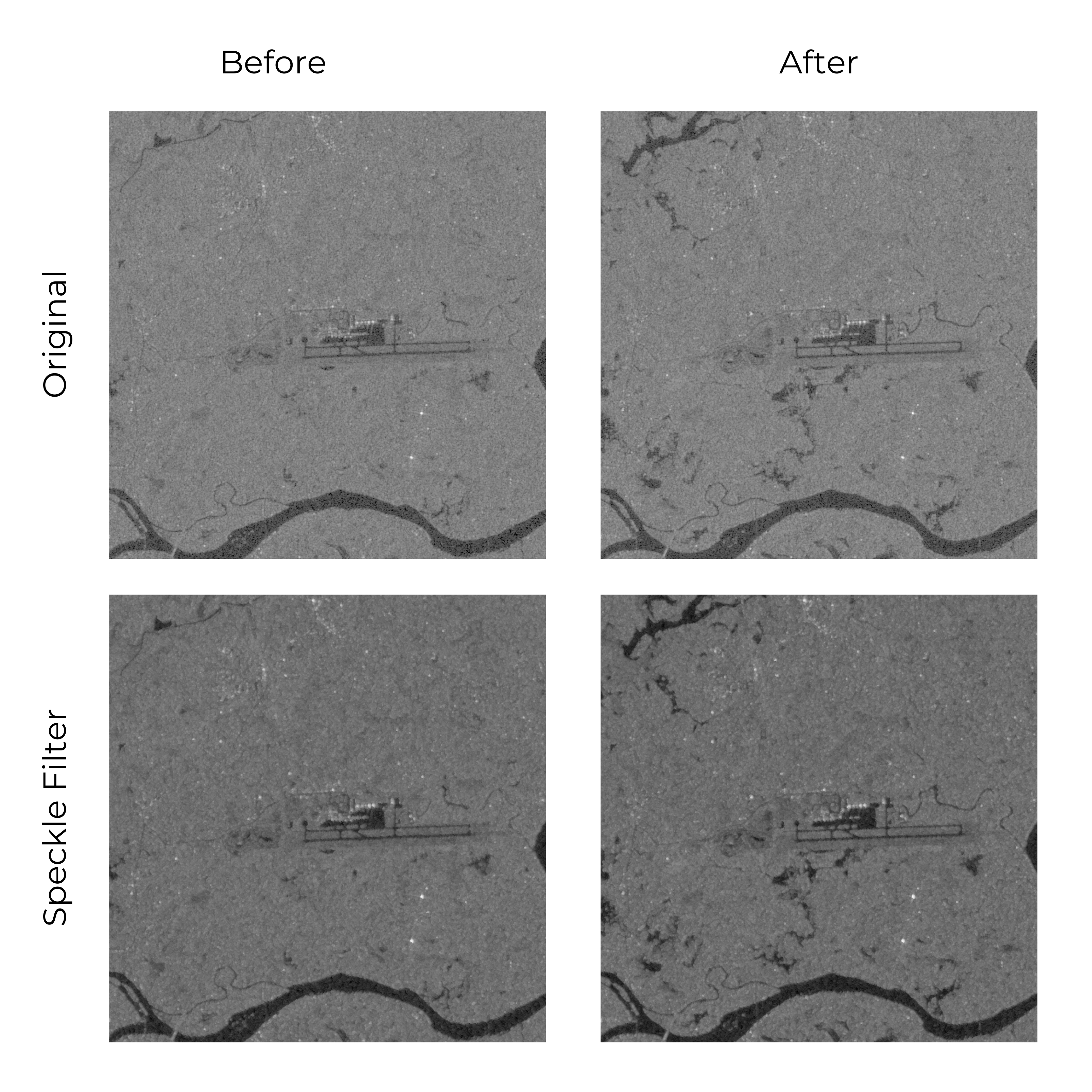

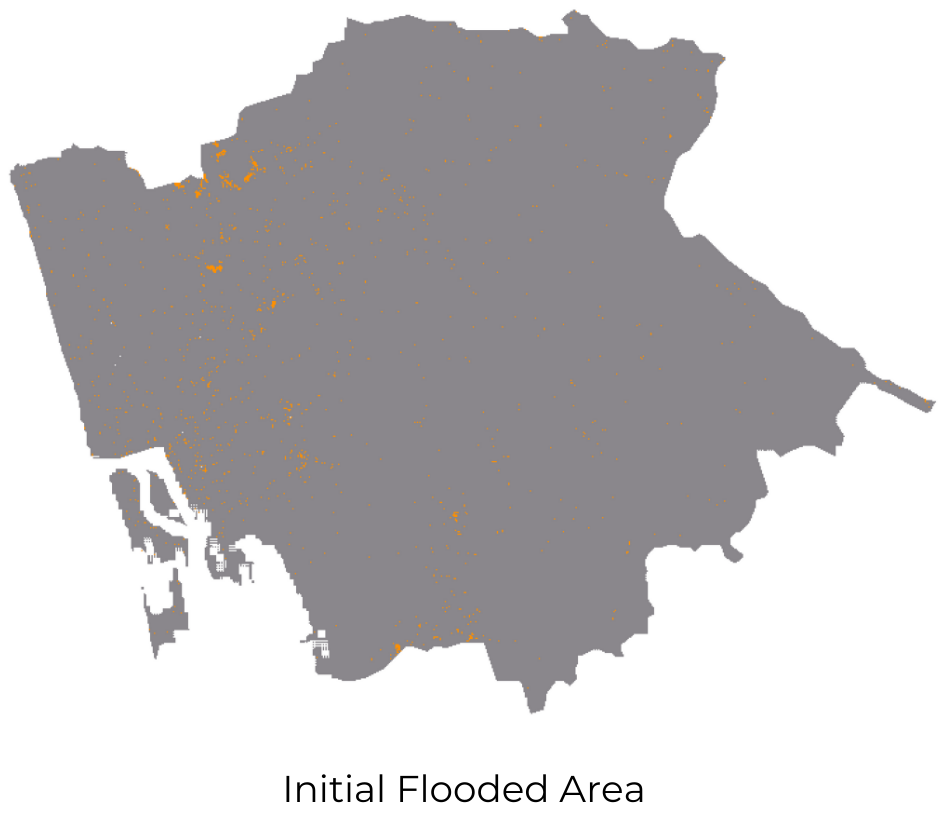

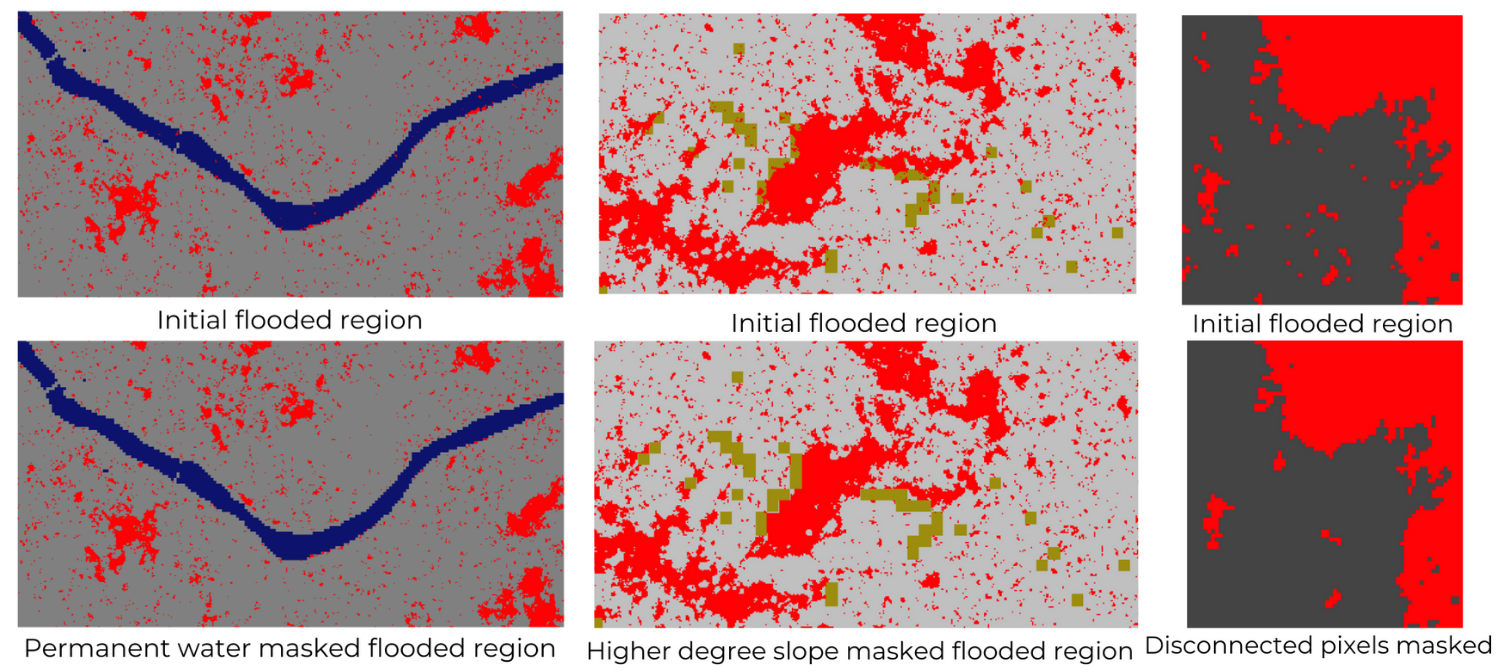

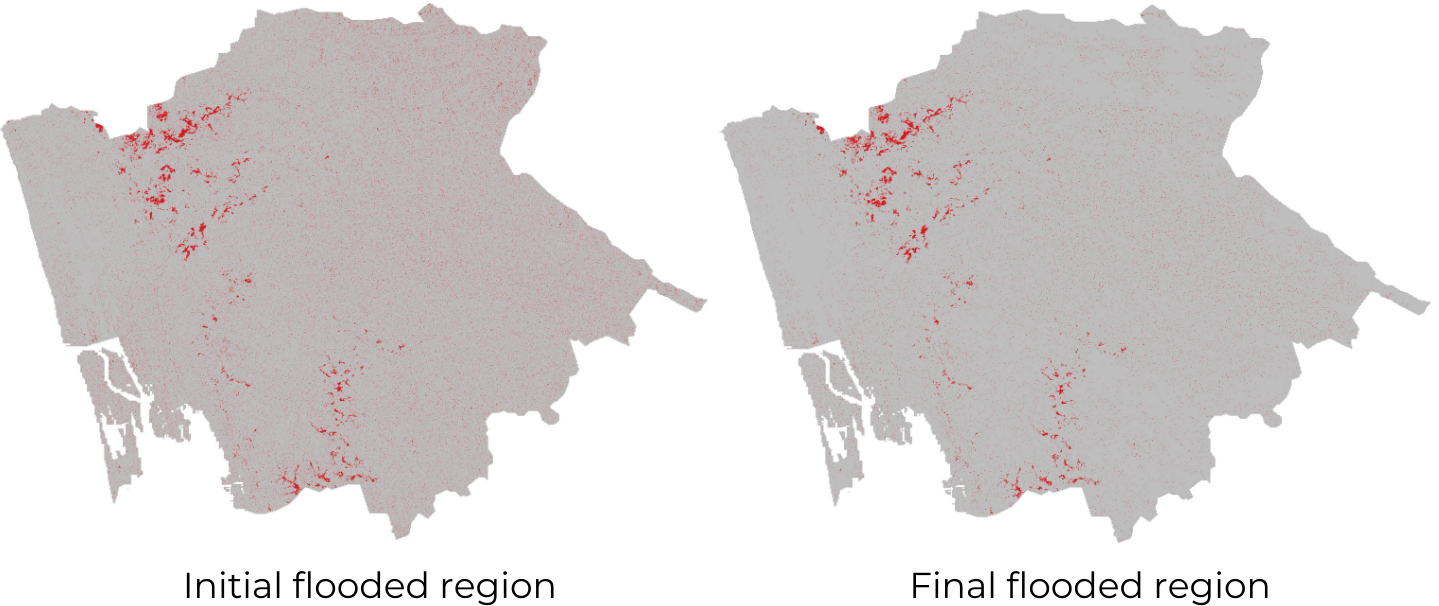

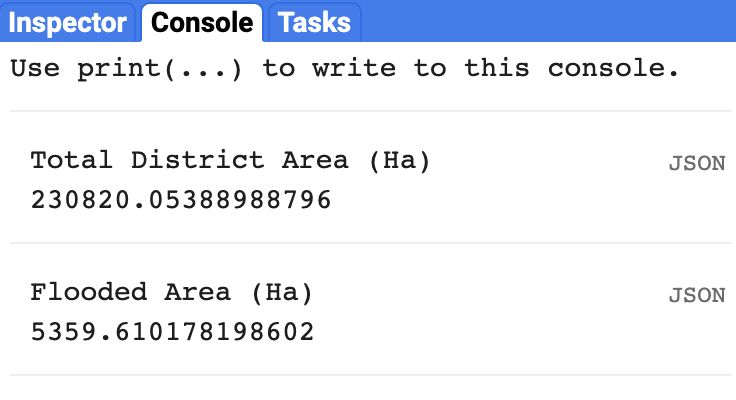

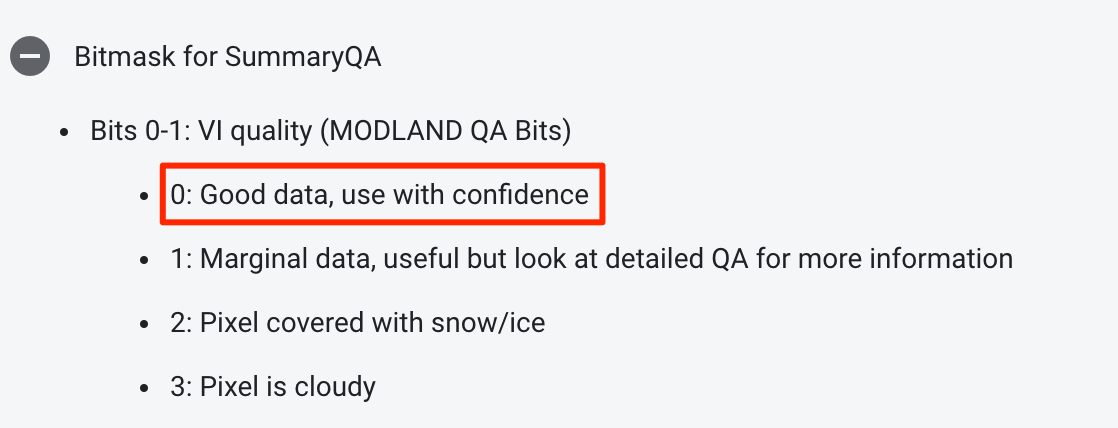

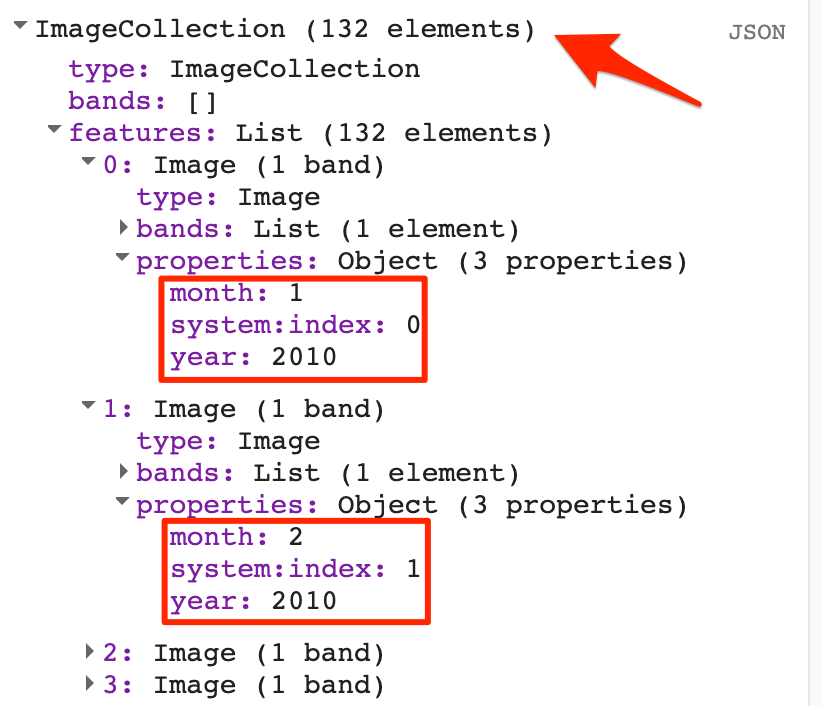

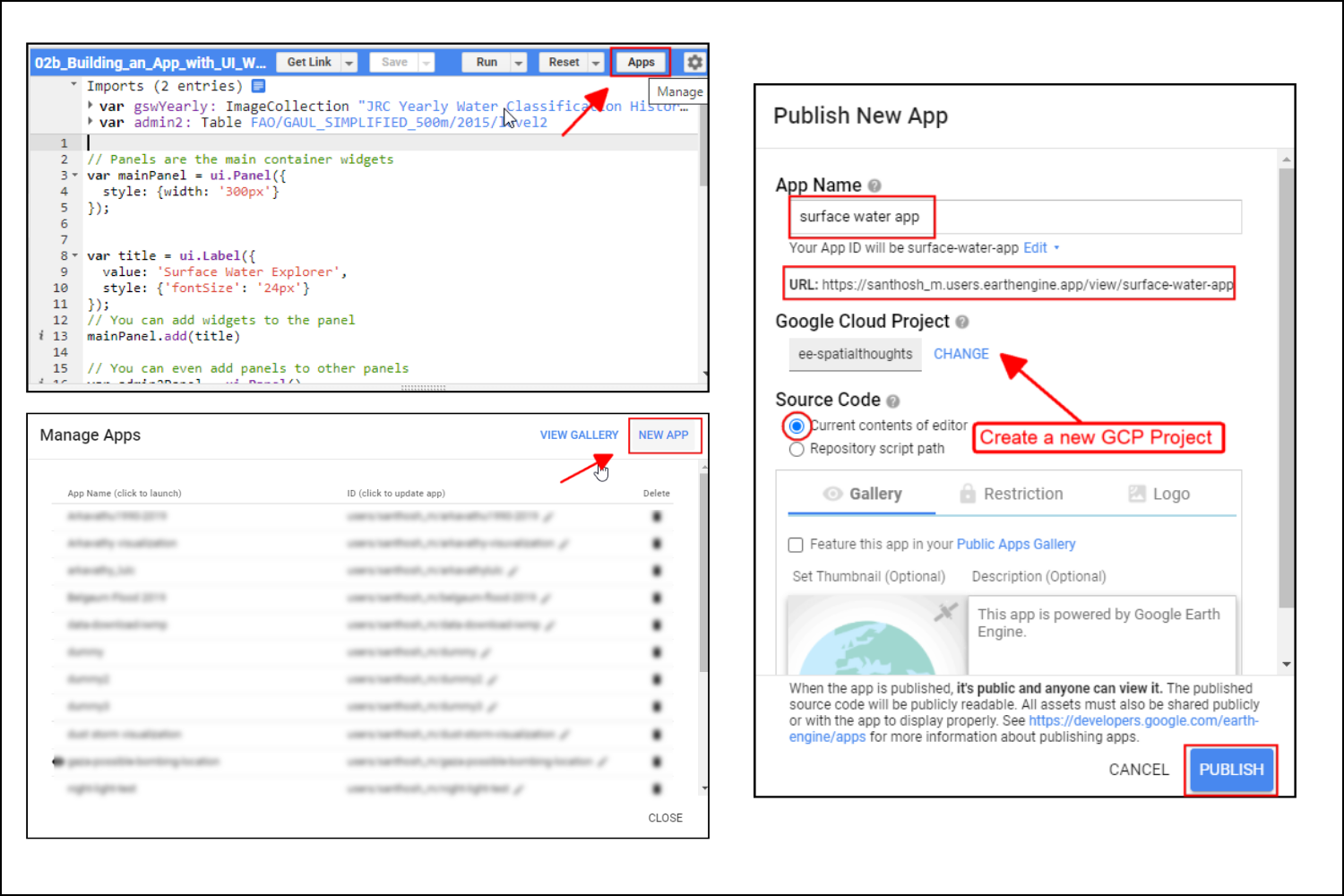

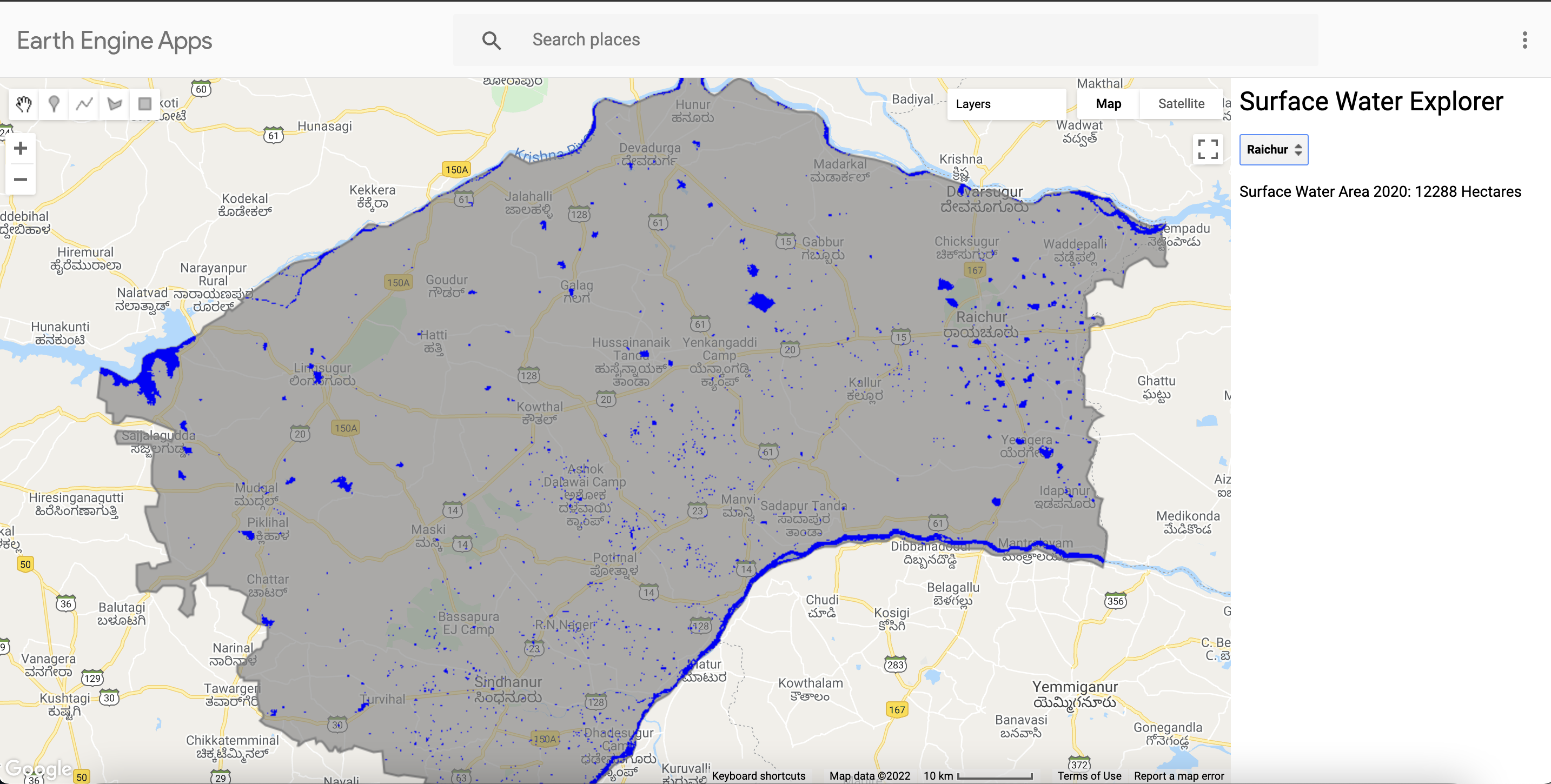

var validationGcp = gcps.filter(ee.Filter.gte('random', 0.6));